The internal metric Google uses to determine search success is time to long click. Understanding this metric is important for search marketers in assessing changes to the search landscape and developing better optimization strategies.

Short Clicks vs Long Clicks

Back in 2009 I wrote about the difference between short clicks and long clicks. A long click occurs when a user performs a search, clicks on a result and remains on that site for a long period of time. In the optimal scenario they do not return to the search results to click on another result or reformulate their query.

A long click is a proxy for user satisfaction and success.

On the other hand, a short click occurs when a user performs a search, clicks on a result and returns to the search results quickly to click on another result or reformulate their query. Short clicks are an indication of dissatisfaction.

Google measures success by how fast a search result produces a long click.

Bounce Rate vs Pogosticking

Before I continue I want to make sure we’re not conflating short clicks with bounce rate. While many bounces could be construed as short clicks, that’s not always the case. The bounce rate on Stack Overflow is probably very high. Users search for something specific, click through to a Stack Overflow result, get the answer they needed and move on with their life. This is not a bad thing. That’s actually a long click.

You can gain greater clarity on this by configuring an adjusted bounce rate or something even more advanced that takes into account the amount of time the user spent on the page. In the example above you’d likely see that users spent a material amount of time on that one page which would be a positive indicator.

The behavior you want to avoid is pogosticking. This occurs when users click through on a result, returns quickly to the search results and clicks on another result. This indicates, to some extent, that the user was not satisfied with the original result.

Two problems present themselves with pogosticking. The first is that it’s impossible for sites to measure this metric. That sort of sucks. We can only look at short bounces as a proxy and even then can’t be sure that the user pogosticked to another result.

The second is that some verticals will naturally produce pogosticking behavior. Health related queries will show pogosticking behavior since users want to get multiple points of view (or opinions if you will) on that ailment or issue.

This could be overcome by measuring the normal pogosticking behavior for a vertical or query class and then determining which results produce lower and higher than normal pogosticking rates. I’m not sure Google is doing this but it’s not out of the question since they already have a robust understanding of query and vertical mapping.

But I digress.

Speed

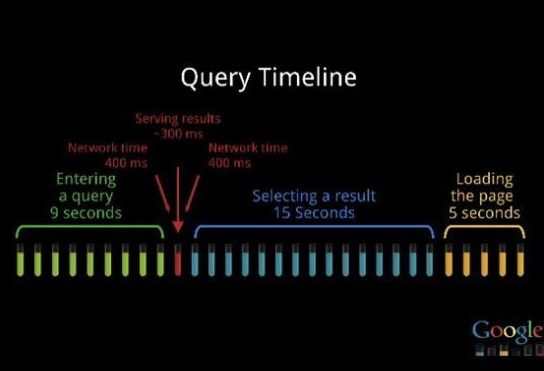

Part of the way Google works on reducing the time to long click is by improving the speed of search results and the Interent in general. Their own research showed the impact of speed on search results.

All other things being equal, more usage, as measured by number of searches, reflects more satisfied users. Our experiments demonstrate that slowing down the search results page by 100 to 400 milliseconds has a measurable impact on the number of searches per user of -0.2% to -0.6% (averaged over four or six weeks depending on the experiment). That’s 0.2% to 0.6% fewer searches for changes under half a second!

Remember that while usage was the metric used, they were trying to measure satisfaction. Making it faster to get to information made people happier and more likely to use search for future information requests. Google’s simply reducing the friction of searching.

But it’s not just the speed of presenting results but in how quickly Google gets someone to that long click that matters. Search results that don’t produce long clicks are bad for business as are those that increase the time selecting a result. And pogosticking blows up the query timeline as users loop back and tack on additional seconds worth of selection and page load time.

Make no mistake. Google wants to reduce every portion of this timeline they presented at Inside Search in 2011.

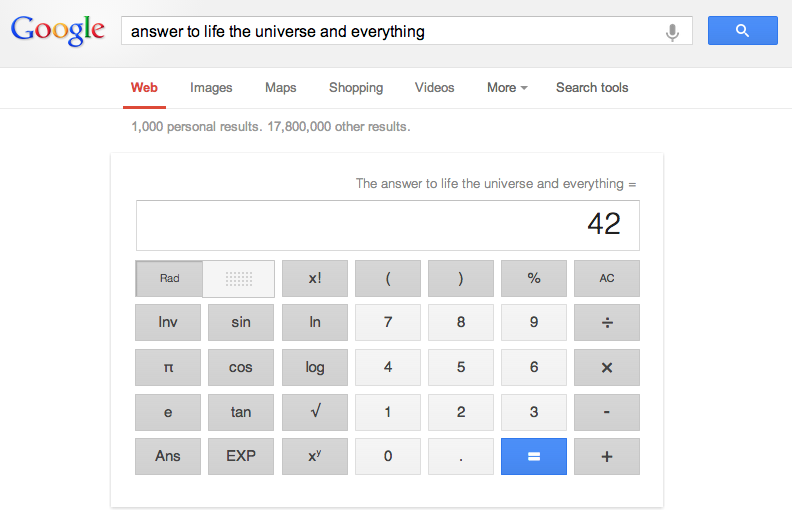

Answers

One of the ways in which we’ve seen Google reduce time to long click is through various ‘answers’ initiatives. Whether it’s a OneBox or a Knowledge Graph result the idea is that answers can often reduce the time to long click. It’s immediate gratification and in line with Amit Singhal’s Star Trek computer ideal.

In some of cases a long click is measured by the absence of a click and reformulated query. If I search for weather, don’t click but don’t take any further actions, that should register as a long click.

Ads

You’ll also hear Google (and Bing) talk about the fact that ads are answers. Of course ads are what fill the coffers but they also provide another way to get people to a long click. Arguing the opposite (that ads aren’t contributing to satisfaction) is a lot like arguing that marketers and advertisers aren’t valuable.

Not only that, but Google has features in place to help ensure that good ads answers rise to the top. The auction model coupled with quality score and keyword level bidding all produce relevant ads that lead to long clicks.

The analysis of pixel space on search results is often used to show how Google is marginalizing organic search. Yet, the other way to look at it is that advertisers are getting better at delivering results (with the help of new Google ad extensions). Isn’t it, in some ways, man versus machine? The advertiser being able to deliver a better result than the algorithm?

Without doubt Google benefits financially from having more space dedicated to paid results but they still must result in long clicks for Google to optimize long-term use, which leads to long-term revenues and profits.

I would be very surprised if changes to search results (both paid and organic) weren’t measured by the impact they had in time to long click.

Hubs

All of this is interesting but what does the time to long click metric mean for SEO? More than you might suspect.

When I started in the SEO field I read everything I could get my hands on (which is not altogether different from now). At the time there was advice about becoming a hub.

There was a good deal of hand waving about the definition of a hub but the general idea was that you wanted to be at the center of a topic by providing value and resources. People would link to you and the traffic you received would often go on to the resources you provided. About.com is a good example.

Funny thing is, this isn’t some well kept secret. Marshall Simmonds spells it out pretty clearly in this 2010 Whiteboard Friday video where he discusses bow tie theory (hubs) and link journalism. (I just watched this again while writing this and, man, this is an awesome video.)

Most people focus on the fact that hubs receive a lot of backlinks. They do because of the value they provide, which is often in the aggregation of and links to other content. In the end, the real value of hubs is that they play an important part in getting people to content and that long click.

Search is a multi-site experience.

This is what search marketers must realize. You will get credit for a long click if you’re part of the long click. If you ensure that the user doesn’t return to search results, even by sending them to another site, then you’re going to be rewarded.

Too often sites won’t link out. I regularly run into this as my clients navigate business development deals with partners. It’s frustrating. They think linking out is a sign of weakness and reduces their ability to consolidate Page Rank.

While Page Rank math might support not linking out, that strategy ultimately limits success.

Link Out!

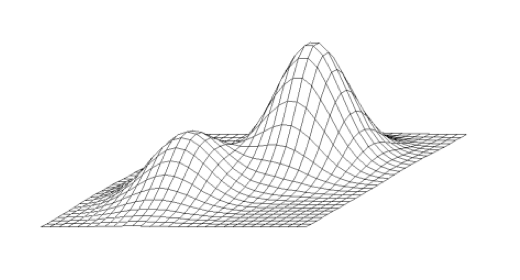

Limiting your outlinks creates a local maxima problem. You’ll optimize only up to a certain ceiling based on constrained Page Rank math. Again, not a real secret. Cyrus Shepard talked about this in a 2011 Whiteboard Friday video (though I wouldn’t stress too much about the anchor text myself.)

Linking out can help you break through that local maxima by delivering more long clicks. Suddenly, your page is a sort of mini-hub. People search, get to your page and then go on to other relevant information.

Google wants to include results that contribute to reducing the time to long click for that query.

I’m not advocating that you vomit up pages with a ton of links. What I’m recommending is that you link to other valuable sources of information when appropriate so that you fully satisfy that user’s query. In doing so you’ll generate more long clicks and earn more links over time, both of which can have profound and positive impact on your rankings.

Stop thinking about optimizing your page and think about optimizing the search experience instead.

I ran into someone as SMX West who inherited a vast number of low quality sites. These sites used the old technique of being relevant enough to get someone to the page but not delivering enough value to answer their query. The desired result was a click on an ad. Simple arbitrage when you get down to it.

In a test, placing prominent links to relevant content on a sub-set of these pages had a material and positive impact on their ranking. It’s certainly not conclusive, but it showed the potential impact of being part of a multi-site long click search result.

As an aside, it’s not that those ad clicks were bad. Some of those probably resulted in long clicks. Just not enough of them. The majority either pogosticked to another result or wound up back at the search result after an ad click. And we already know this as search marketers by looking at the performance of search versus display campaigns.

Impact On Domain Diversity

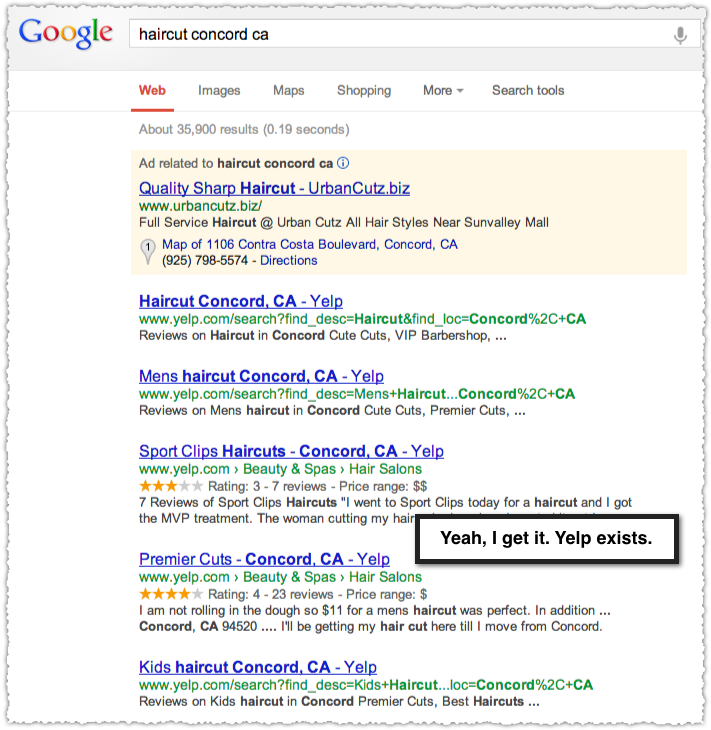

If you believe time to long click is the way in which Google is measuring search success then you start to see some of the changes in a new light. I’ve been disappointed by the lack of domain diversity on many search results.

Sadly, this type of result hasn’t been that rare within the last year. Pete Myers has been doing amazing work on this topic.

For a while I just thought this was Google being stupid. But then it dawned on me. The lack of domain diversity may be reducing the time to long click. It might actually be improving the overall satisfaction metrics Google uses to optimize search!

In some ways this makes a bit of sense, if even from a straight up Paradox of Choice perspective. Selecting from 10 different domains versus 5 might reduce cognitive strain. Too many choices overwhelm people, reducing both action and satisfaction. So perhaps Google’s just reflecting that in their results with both domain diversity (or lack there of) and more instances of 7 results pages.

Downsides to Time To Long Click?

Are these long clicks are truly a sign of satisfaction. The woman who had been cutting my hair for nearly 10 years retired. So I actually did need to find someone new. I hated search result but did wind up clicking through and using Yelp to locate someone. So from Google’s perspective I was satisfied but in reality … not so much.

I wonder how long a time frame Google uses in assessing the value of long clicks. I abandoned my haircut search a number of times over the course of a month. In many of those instances I’m sure it looked like I was satisfied with the result. It looked like a long click. Yet, if you looked over a longer period of my search history it would become clear I wasn’t. I think this is a really difficult problem to solve. Is it satisfaction or abandonment?

The other danger here is that Google is training people to use another service. Now, I don’t particularly like Yelp but what this result tells me is that if I wanted to find something like this again I should just skip Google and go right to Yelp instead.

The same could be said by reflecting our own bias toward brands. While users may respond better to brands and the time to click might be reduced, the long term implications could be that Google is training users to visit those brands directly. Why start my product search on Google when all they’re doing is giving me links to Amazon 90% of the time?

Of course, Google could argue that it will remain the hub for information requests because it continues to deliver value. (See what I did there?)

TL;DR

Google is using time to long click to measure the effectiveness of search results. Understanding this puts many search changes and initiatives into perspective and gives sites renewed reason to link out and think of search as a multi-site experience.

The Next Post: Google Removes Related Searches

The Previous Post: Tracking Image Search In Google Analytics

43 trackbacks/pingbacks

Comments About Time To Long Click

// 42 comments so far.

Derek Edmond // April 17th 2013

Fantastic perspective and argument development. I was actually having this discussion with a colleague today in proposing how we could improve (reduce) exit rates on certain page types through changes to messaging and (user) action recommendations. Good to read that others are thinking along the same lines.

Max Minzer // April 17th 2013

Whoa, AJ! I never had a clear organized idea about this, just random thoughts. Your highlight and paragraph about multi-site experience literally gave me chills of excitement when I got to it!

Would be interesting to see how Google incorporates other signals with this one when it comes to tough cases of bounce rate & long click satisfaction.

AJ Kohn // April 17th 2013

Thanks Max. I think it’s easy to get tunnel vision about your own site and forget that the search experience. It’s a bit presumptuous to think that your resource is the only resource, so providing access to other content is a great way to fulfill query intent and match the reality of multi-site search experience.

Aidan McCarthy // April 17th 2013

Brilliant insight into the ‘mind’ of Google search, I’ve often pondered how metrics like bounce rate might be used somehow but always saw the flaws with that too easily. Your way of looking at it makes much more sense and arms me better for those discussions on the value of linking out!

AJ Kohn // April 17th 2013

Glad to hear this was helpful Aidan. Don’t be afraid to link out, it’ll benefit you in the long-run.

David Portney // April 17th 2013

I don’t know, A.J. – I have a tremendous amount of respect for you and I also think this is just speculation.

We don’t know what Google does or does not really do when it comes to user behavior, and let’s face it, that’s too complex to over-simplify with a pogo-stock analogy.

I open many search results with Ctrl-click in new tabs and look at a lot of info. Just one example of how searchers interact with search results is too varied to just say that if someone heads back to G or B after a search that’s a strike against that result. Sometimes I click on a search result and the phone rings and I’m on the phone for 10 minutes. Or someone walks in with a question. Or I go to the bathroom. Just examples of the fact that how people interact with SERPs is not cut-and-dried.

Certainly SE’s realize that human behavior, in all areas including interacting with search results, is chaotic and varied. Since we don’t actually know what SE’s do or don’t do in their algo’s, I’m not sure it makes a lot of sense for highly respected search marketing experts to write about bounce and long click as if that’s a fact. I’d expect this on the Warrior Forum, but not here.

David

AJ Kohn // April 17th 2013

David,

Thanks for your comments. I obviously can’t know 100% that Google uses the time to long click metric but there’s a substantial amount of evidence I’m piecing together that is both public and private in nature.

What I can say is that pogosticking behavior is not a mystery as I discuss and link to in my pogosticking post. Yahoo filed a patent on the topic as far back as May of 2007. Google representatives will often refer to long clicks in conversation. Not only that but we received confirmation that Google tracks this type of behavior through bounce back Authorship results.

Now, I often use site: queries and would love to use regex as a way to search. But the truth is I am not even close to the norm when it comes to search engine behavior. The worst thing I could do is use myself as the target market. I even speak to that in this post where I found the haircut result to be poor. Instead, Google is looking at an enormous corpus of data where the types of experiences you bring up are swallowed up and factored into the equation.

I’m confident that Google cares (deeply) about how quickly they get people to the right answer and believe there’s ample evidence to support that. You are free to disagree but I stand firm by my analysis.

Martin Pierags // April 18th 2013

great analysis and a light at the end of the tunnel. I think Google is in deed very clever in providing good results, but I don’t yet understand why so many results from one author are shown in SERPs. I suppose its going to be fixed some day for reasons of diversity in Search Experience.

AJ Kohn // April 18th 2013

Thanks Martin. The problem here is that I’m unsure whether diversity is broken from Google’s perspective.

For some time many SERPs have had multiple results from one domain. I’d chalked it up to a potential bug but it’s gone on far too long for it to be an oversight. Instead, I think the lack of diversity may be producing better results as measured by ‘time to long click’.

Whether that is good in the long term is debatable.

Andy Kinsey - Redstar // April 18th 2013

Brilliant article and insight into long click usage by Google and how this could shape the future of search. It maybe worth asking the same kind of question “what is a long click” (or its equiv) for Google Now – is it if you interact with a piece rather than slide it away, but that doesn’t work for all cards – so is it if you slide it away after a certain time, again not always – what then about an aggregate time across card types along with interaction / time to slide away … that may just work? what do you think

AJ Kohn // April 18th 2013

Andy,

Thanks for your thought provoking comment! I’m intensely interested in Google Now (and love it personally.) I think interaction is the best satisfaction metric Google Now has, but to your point there are some cards that don’t require interaction so the time to swipe away seems logical.

I think you’d need to establish benchmarks for the average time to swipe for each card type. You might even have to look at that based on whether it was presented with other cards, how many, in what order and so on and so forth. It’s such a personalized product though (particularly since there is active configuration to some degree) which makes it different than traditional web search.

I’ll have to give this some more thought.

Jim Rudnick // April 18th 2013

Like @Max here, I too never thought much about this – but did make an assumption that if G wanted to “track” the click times – it “could” use that metric…but then it dropped off my radar and havent’ thought about that now for at least a year….

Good on you to post this AJ as it reminds me of the lengths that G can go to, to try to validate site content….and yup, I’ll be watching for more of same too, eh!

🙂

AJ Kohn // April 18th 2013

Thanks for the comment Jim. Google is keenly interested in getting people to an answer as quickly as possible. Once you understand that mindset it changes how you look at search changes. OneBox results reduce the time to long click. Ditto with Knowledge Graph. What the SEO industry might think of as intrusive or intentionally removing organic from the mix is really just an effort to meet time to long click KPIs.

Ehren Reilly // April 18th 2013

It’s not feasible for everyone, but if you are a site where internal search is a big part of usage (e.g. UrbanSpoon, Trulia, Amazon, eBay), you could actually measure this information based on how users behave on your internal search results. Granted, the use case is different from Google search, and it’s a bit of a hassle to build, but if you have the resources it’s a really great way to ferret out your most sucky/disappointing pages, so you can improve them.

AJ Kohn // April 18th 2013

Yes Ehren! That’s a really great idea and proxy.

Nathan Safran // April 18th 2013

Great stuff aj, really insightful. It’s got me thinking about ways I might tinker with this concept from a research perspective at conductor. I know of course only google has access to time to long click info, but maybe there are elements of this kind of searcher behavior that can be tested in a lab environment etc. let me know if you think of anything 🙂

AJ Kohn // April 19th 2013

Thanks Nathan.

Michah Fisher-Kirshner and I talked a bit about this over on Google+. So one thing you could do is look at pogosticking rates on different SERPs using a lab environment. Similarly, you could include a number of random results into a SERP and see if the time to long click increased and/or the pogosticking rate increased. Or you could order the results backwards and measure the differences etc. etc.

I’d need to think through how to make sure that the methodology produced something of substance but … you could likely do something to tease out some interesting insights given enough participants and a robust lab (i.e – interactive) environment.

Mary Kay Lofurno // April 19th 2013

I liked your point about linking out and how just linking in creates a local maxima problem. I have never stopped issuing the advice of linking out as it is just part of a natural progression of business relationships if it makes sense for your company/web site/task.

I never understood why some in the community went so far the other way with linking in over the last 10 years. Thanks, Mary Kay

Doc Sheldon // April 19th 2013

AJ, you never cease to amaze! This article has SO much to offer, and helps me put into perspective some of the tie-ins I’ve been looking at surrounding AgentRank. Keep this great stuff coming!

AJ Kohn // April 19th 2013

Thank you so much Doc. That means a lot coming from you – someone who’s consistently demonstrated critical thinking.

Ralph aka fantomaster // April 21st 2013

Assuming you are right in your assessment of Google’s long clicks bias – and you certainly argue a brilliant case for it -, from a guerilla SEO perspective one logical consequence would be to generate lots of traffic that simply hits your pages via the SERPs, never returning to Google’s page again. (Yes, it could be entirely articifial, if need be, as long it’s not blatantly traceable as such via obvious footprints etc.)

By your reasoning, this should help with good rankings (if only by fortifying them significantly, assuming you manage to generate enough critical click-through mass) or even boost them, constituting “perceived satisfaction”. Not sure this is really the case at this point in time. (Reminds one of the DirectHit search engine of yore, doesn’t it?) But it should be fairly easy to test and validate.

AJ Kohn // April 21st 2013

Ralph,

Thank you so much for the comment. I’ve talked with a few people on and off about how this type of behavior might be emulated. I think it would wind up being more difficult than it seems on the surface.

First, we don’t have a good idea of what the benchmarks are for this metric. Now, you might be able to test your way into this but if you were to push too much long click traffic to a site you might blow past the normal boundaries and land yourself in a review queue. Larger sites might be able to get away with it since the volume of total traffic would create a slow counter-weight to those efforts.

Second, and more importantly, is that Google would be looking a query path that is longer than just one query. If I were sniffing out this type of network I’d be looking for visits where prior queries weren’t in line with the current one and where no reformulations took place. In short, if long click traffic for a cluster of terms blossomed from a set of 10,000 users who had previously never had an interest in that query cluster and performed no further reformulations afterwards … well, I’d be suspicious. Ditto if a higher percentage than normal had web history turned off.

I’m not saying it couldn’t work, but I think it would take a sophisticated and long-term commitment to creating a query log that allowed these manufactured long clicks to go undetected. That said, you’re smarter about that kind of stuff than I am.

Gregory Smith // April 21st 2013

Aj,

Thanks a lot for this post, I think I’m going to follow you in this research and do a bit of research of my own. I might even do a followup post.

Thanks a ton for the enlightenment!

michael balistreri // April 21st 2013

using one’s own in-site search testing latency might be fun. Using time as expressed above within an overall satisfaction indicator for serp modification, is useless. There are so many factors beyond google’s ability to even properly stage/understand this metric (one session vs multiple via cookies – user A leaving and being replaced by user B – technical), I doubt they place much emphasis on it.

AJ Kohn // April 22nd 2013

Michael,

You’re entitled to your own opinion. But Google’s ability to understand the clickstream is rather solid given the ubiquity of their cookie. After I published this I went looking for additional citations for the short and long click terminology (outside of my own personal interactions) … and found it.

That’s from a January 2013 interview with Amit Singhal.

Grant Simmons // April 21st 2013

This is great stuff.

Search engines need to have a feedback mechanism to assess relevance of search query intent to site content “Intent to Content” – the long click / short click makes logical sense, I would think that data would be augmented with social signals and site engagement signals, aligned with aggregated query and site data to measure consistent interaction rather than linear measurement.

Been writing some articles along those lines on Search Engine Watch… planning to cover the onsite experience and how that might logically send additional signals of relevance to search engines.

Duane Forrester has talked about this effect, and Yahoo has a patent filed (with thanks to Bill Slawski for some research) that offers similar language around the understanding of metrics of user engagement to somewhat quantify quality of ranked / clicked results.

http://www.seobythesea.com/2011/09/how-a-search-engine-may-measure-the-quality-of-its-search-results/

All boils down to a simple fact… Ranking isn’t a *simple* equation 🙂

Cheers

AJ Kohn // April 22nd 2013

Thanks Grant.

I think it boils down to the idea that search engines want to find ways to measure search success. Google, in particular, is concerned about reducing the time to that successful result. That’s partly due to the fact that any friction could create abandonment. So the two ideas are aligned.

Ranking isn’t a simple equation, particularly if you begin to think of search as a multi-site experience.

Ralph aka fantomaster // April 22nd 2013

AJ,

full agreement on the more complex aspects of the matter re possibly boosting rankings via artificial long click traffic. However, unless something very fundamental has changed in the ‘Plex, I’d be very surprised if they had really implemented that kind of a sophisticated bulwark of protective layers by default.

Exploring, as I’m occasionally wont to (heh), the “darker” sides of search optimization tech, it never ceases to surprise me how simplistic if not downright primitive a lot of their overall setup is – and how easy it actually is to achieve plenty of success with purportedly “long demoted”, positively acient black hat techniques to this very day, provided you adjust them a little bit to a more contemporary scenario.

In any case, I think you’re spot on in pursuing this topic and if you should come up with any follow-up postings at some later point, I for one would definitely appreciate it!

AJ Kohn // April 22nd 2013

Ralph,

You’d certainly know more about this than I. And I think we as an industry are often more forward thinking than what is in practice at Google. For all of Google’s savvy (which is considerable), they can only apply their resources to so many issues.

So I’ll keep you updated on any other things I hear and/or tease out if you do the same based on your tests. Deal?

Ralph aka fantomaster // April 24th 2013

Sounds like a plan. 🙂

David Tucker // April 25th 2013

Despite Google’s attempts to reduce the query time, I’ve been dissatisfied with the search results I’ve been getting from this engine lately. First, I’ve noticed that the first page may contain 3 or even more results from the same domain. Sometimes it’s easier to go to the site I need right from the start skipping the Googling phase at all.

Mark McKnight // April 25th 2013

Thank you very much for sharing this great article. Good to read that others are thinking along the same lines. You got a brilliant insight into the ‘mind’ of Google search.

Emory Rowland // April 26th 2013

Very much enjoyed reading and learning from these ideas, AJ. The only thing that keeps nagging me is the question of why Google would invest so much confidence in a metric that could conceivably be gamed by click bots, perhaps the sort that attack AdSense sites.

Emil Petkov // April 29th 2013

Great article and great point of view 🙂 I never used bounce rate as a serious factor and you prove my thinking. Really, the time is much more of a factor.

Just one question – i suppose it’s not everyone’s case, but my experience shows me that about 80% of the short clicks are with 00:00 sec duration. So it seems the visitor leave before taking a look. In such cases – is the linking out a solution? After all the visitor need to see the link with relevant information in order to click on it.

Emo

AJ Kohn // April 30th 2013

Emil,

First I’d suggest implementing tracking that allowed you to measure the time spent on that page. If the real time is less than 10 seconds then you certainly have a problem. If they’re there for 45 seconds on average you might not have a problem at all. Of course, I’d still recommend ensuring that the page(s) in question answers all those ‘magic’ questions. So link to other content, other resources that would satisfy the user.

Victor Tribunsky // May 18th 2013

Hm.. It is very interesting. I never think about outbound links in such a way. I was trying to keep them in minimum. I need to rethink. Thank you.

Jessica Bridges // June 10th 2013

Thank you for writing such a well thought-out post and making it easy to understand for SEO and non-SEO minded people alike. I’ve been trying to explain to my marketing team that we need to diversify links on our site and that it isn’t necessarily a BAD thing to link to other sites. They don’t want to do anything to direct visitors away from our site. I wasn’t familiar with Google tracking the “long-click,” but it makes so much sense. Maybe with this article I can finally convince my team that this is a good thing. I love the idea of focusing on the “search experience” and trying to fulfill that for our customers.

Also, I’m looking for a new related posts plugin for our WordPress site and wanted to suggest that we use a plugin that shows related posts from other blogs. I knew they wouldn’t go for that, but this topic give some good reasons why linking to other sties can still be relevant to boost SEO on our site. Thanks!

Dan Rapoport // July 08th 2013

Great post and more ammunition in my conversations with clients that user experience is a huge part of SEO. I’ve literally had clients tell me to “Add [keyword x] to our site’s keywords”–to which I reply, “That’s not how it works.”

Engaging, useful content wins again.

Dustin Heap // August 06th 2013

“This is what search marketers must realize. You will get credit for a long click if you’re part of the long click. If you ensure that the user doesn’t return to search results, even by sending them to another site, then you’re going to be rewarded.”

Awesome stuff. I think this is my 3rd or 4th time reading this article. Nonetheless, fascinating stuff in regards to the theory behind why linking out isn’t a bad thing. Still not a perfect science for G but puts in the right mindset of providing value at all times to better your chances of being served up as relevant content to searchers.

Bonnie Hughes // January 18th 2014

Thanks so much for this useful information, came across this page researching SEO, I will bookmark this site and refer back, I’m sure there is tons of informative stuff to read, very helpful insights.

Dunstan Barrett // March 03rd 2014

I guess “content is king” is FINALLY becoming reality. I’m not sure “even by sending them to another site, then you’re going to be rewarded” is always necessarily true. Ultimately, if the majority of users than land on site A then move to site B, then surely site A is providing nothing that site B isn’t. Look at the extreme example of “made for Adsense” sites.

AJ Kohn // March 06th 2014

Dunstan,

Well, it can’t be a complete pass through, site A in your example has to provide some additional value. However, sometimes that value is in aggregating all of the various resources available on a topic. About.com followed this strategy and still remains a top 50 web property.

Sorry, comments for this entry are closed at this time.

You can follow any responses to this entry via its RSS comments feed.