Understanding query syntax may be the most important part of a successful search strategy. What words do people use when searching? What type of intent do those words describe? This is much more than simple keyword research.

I think about query syntax a lot. Like, a lot a lot. Some might say I’m obsessed. But it’s totally healthy. Really, it is.

Query Syntax

Syntax is defined as follows:

The study of the patterns or formation of sentences and phrases from words

So query syntax is essentially looking at the patterns of words that make up queries.

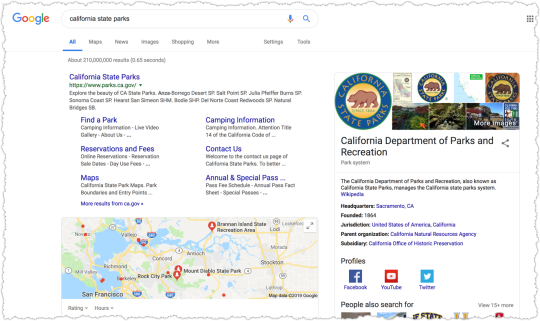

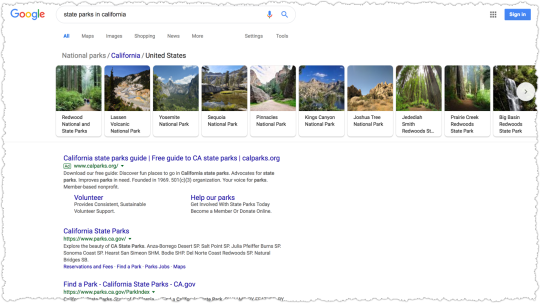

One of my favorite examples of query syntax is the difference between the queries ‘california state parks’ and ‘state parks in california’. These two queries seem relatively similar right?

But there’s a subtle difference between the two and the results Google provides for each makes this crystal clear.

The result for ‘california state parks’ has fractured intent (what Google refers to as multi-intent) so Google provides informational results about that entity as well as local results.

The result for ‘state parks in california’ triggers an informational list-based result. If you think about it for a moment or two it makes sense right?

The order of those words and the use of a preposition change the intent of that query.

Query Intent

It’s our job as search marketers to determine intent based on an analysis of query syntax. The old grouping of intent as informational, navigational or transactional are still kinda sorta valid but is overly simplistic given Google’s advances in this area.

Knowing that a term is informational only gets you so far. If you miss that the content desired by that query demands a list you could be creating long-form content that won’t satisfy intent and, therefore, is unlikely to rank well.

Query syntax describes intent that drives content composition and format.

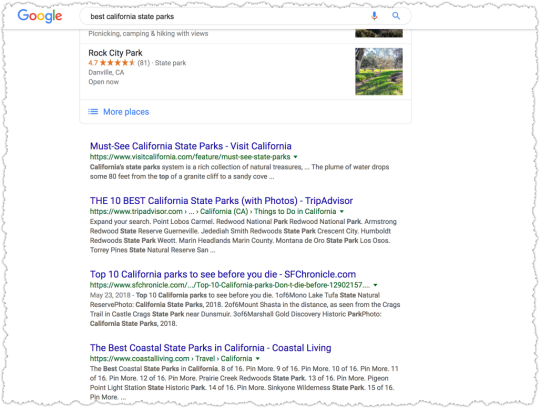

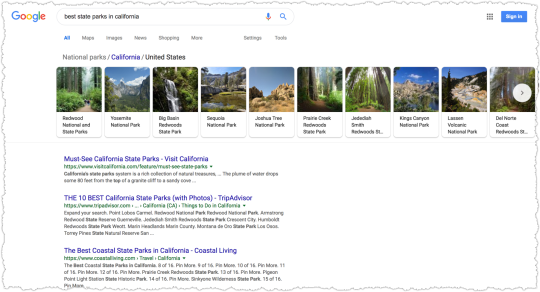

Now think about what happens if you use the modifier ‘best’ in a query. That query likely demands a list as well but not just a list but an ordered or ranked list of results.

For kicks why don’t we see how that changes both of the queries above.

Both queries retain a semblance of their original footprint with ‘best california state parks’ triggering a local result and ‘best state parks in california’ triggering a list carousel.

However, in both instances the main results for each are all ordered or ranked list content. So I’d say that these two terms are far more similar in intent when using the ‘best’ modifier. I find this hierarchy of intent based on words to be fascinating.

The intent models Google use are likely more in line with more classic information retrieval theory. I don’t subscribe to the exact details of the model(s) described but I think it shows how to think about intent and makes clear that intent can be nuanced and complex.

Query Classes

Understanding what queries trigger what type of content isn’t just an academic endeavor. I don’t seek to understand query syntax on a one off basis. I’m looking to understand the query syntax and intent of an entire query class.

Query classes are repeatable patterns of root terms and modifiers. In this example the query classes would be ‘[state] state parks’ and ‘state parks in [state]’. These are very small query classes since you’ll have a defined set of 50 to track.

What about the ‘best’ versions? What syntax would I use and track? It’s not an easy decision. Both SERPs have infrastructure issues (Google units such as the map pack, list carousel or knowledge panel) that could depress clickthrough rate.

In this case I’d likely go with the syntax used most often by users. Even this isn’t easy to ferret out since Google’s Keyword Planner aggregates these terms while other third-party tools such as ahrefs show a slight advantage to one over the other.

I’d go with the syntax that wins with the third-party tools but then verify using the impression and click data once launched.

Each of these query classes demand a certain type of content based on their intent. Intent may be fractured and pages that aggregate intent and satisfy both active and passive intent have a far better chance of success.

Query Indices

I wrote about query indices or rank indices back in 2013 and still rely on them heavily today. In the last couple of years many new clients have a version of these in their dashboard reports.

Unfortunately, the devil is in the details. Too often I find that folks will create an index that contains a variety of query syntax. You might find ‘utah bike trails’, ‘bike trails utah’ and ‘bike trails ut’ all in the same index. Not only that but the same variants aren’t present for each state.

There are two reasons why mixing different query syntax in this way is a bad idea. The first is that, as we’ve seen, different types of query syntax might describe different intent. Trust me, you’ll want to understand how your content is performing against each type of intent. It can be … illuminating.

The second reason is that the average rank in that index starts to lose definition if you don’t have equal coverage for each variant. If one state in the example performs well but only includes one variant while another state does poorly but has three variants then you’re not measuring true performance in that query class.

Query indices need to be laser focused and use the dominant query syntax you’re targeting for that query class. Otherwise you’re not measuring performance correctly and could be making decisions based on bad data.

Featured Snippets

Query syntax is also crucial to securing the almighty featured snippet – that gorgeous box at the top that sits on top of the normal ten blue links.

There has been plenty of research in this area about what words trigger what type of featured snippet content. But it goes beyond the idea that certain words trigger certain featured snippet presentations.

To secure featured snippets you’re looking to mirror the dominant query syntax that Google is seeking for that query. Make it easy for Google to elevate your content by matching that pattern exactly.

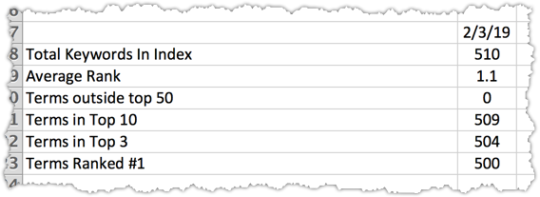

Good things happen when you do. As an example, here’s one of the rank indices I track for a client.

At present this client owns 98% of the top spots for this query class. I’d show you that they’re featured snippets but … that probably wouldn’t be a good idea since it’s a pretty competitive vertical. But the trick here was in understanding exactly what syntax Google (and users) were seeking and matching it. Word. For. Word.

The history of this particular query class is also a good example of why search marketers are so valuable. I identified this query class and then pitched the client on creating a page type to match those queries.

As a result, this query class (and the associated page type) went from contributing nothing to 25% of total search traffic to the site. Even better, it’s some of the best performing traffic from a conversion perspective.

Title Tags

The same mirroring tactic used for featured snippets is also crazy valuable when it comes to Title tags. In general, users seek out cognitive ease, which means that when they type in a query they want to see those words when they scan the results.

I can’t tell you how many times I’ve simply changed the Title tags for a page type to target the dominant query syntax and seen traffic jump as a result. The increase is generally a combination, over time, of both rank and clickthrough rate improvements.

We know that this is something that Google understands because they bold the query words in the meta description on search results. If you’re an old dog like me you also remember that they used to bold the query words in the Title as well.

Why doesn’t Google bold the Title query words anymore? It created too much click bias in search results. Think about that for a second!

What this means is that by having the right words in the Title bolded created a bias too great for Google’s algorithms. It inflated the perceived relevance. I’ll take some of that thank you very much.

There’s another fun logical argument you can make as a result of this knowledge but that’s a post for a different day.

At the end of the day, the user only allocates a certain amount of attention to those search results. You win when you reduce cognitive strain and make it easier for them to zero in on your content.

Content Overlap Scores

I’ve covered how the query syntax can describe specific intent that demands a certain type of content. If you want more like that check out this super useful presentation by Stephanie Briggs.

Now, hopefully you noticed that the results for two of the queries above generated a very similar SERP.

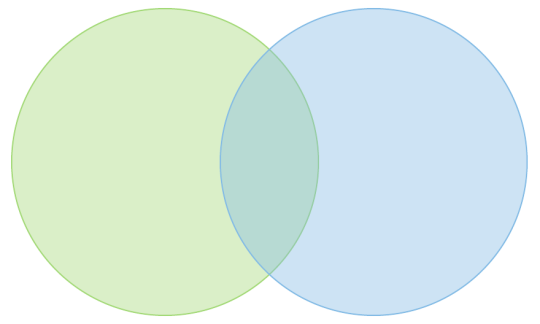

The results for ‘best california state parks’ and ‘best state parks in california’ both contain 7 of the same results. The position of those 7 shifts a bit between those queries but what we’re saying is there is a 70% overlap in content between these two results.

The amount of content overlap between two queries shows how similar they are and whether a secondary piece of content is required.

I’m sure those of you with PTPD (Post Traumatic Panda Disorder) are cringing at the idea of creating content that seems too similar. Visions of eHow’s decline parade around your head like pink elephants.

But the idea here is that the difference in syntax could be describing different intent that demands different content.

Now, I would never recommend a new piece of content with a content overlap score of 70%. That score is a non-starter. In general, any score equal to 50% or above tells me the query intent is likely too similar to support a secondary piece of content.

A score of 0% is a green light to create new content. The next task is to then determine the type of content demanded by the secondary syntax. (Hint: a lot of the time it takes the form of a question.)

A score between 10% and 40% is the grey area. I usually find that new content can be useful between 10% and 20%, though you have to be careful with queries that have fractured intent. Because sometimes Google is only allocating three results for, say, informational content. If two of those three are the same then that’s actually a 66% content overlap score.

You have to be even more careful with a content overlap score between 20% and 30%. Not only are you looking at potential fractured intent but also whether the overlap is at the top or interspersed throughout the SERP. The former often points to a term that you might be able to secure by augmenting the primary piece of content. The latter may indicate a new piece of content is necessary.

It would be nice to have a tool that provided content overlap scores for two terms. I wouldn’t rely on it exclusively. I still think eyeballing the SERP is valuable. But it would reduce the number of times I needed to make that human decision.

Query Evolution

When you look at and think about query syntax as much as I do you get a sense for when Google gets it wrong. That’s what happened in August of 2018 when an algorithm change shifted results in odd ways.

It felt like Google misunderstood the query syntax or, at least, didn’t understand the intent the query was describing. My guess is that neural embeddings are being used to better understand the intent behind query syntax and in this instance the new logic didn’t work.

See, Google’s trying to figure this out too. They just have a lot more horsepower to test and iterate.

The thing is, you won’t even notice these changes unless you’re watching these query classes closely. So there’s tremendous value in embracing and monitoring query syntax. You gain insight into why rank might be changing for a query class.

Changes in the rank of a query class could mean a shift in Google’s view of intent for those queries. In other words, Google’s assigning a different meaning to that query syntax and sucking in content that is relevant to this new meaning. I’ve seen this happen to a number of different query classes.

Remember this when you hear a Googler talk about an algorithm change improving relevancy.

Other times it could be that the mix of content types changes. A term may suddenly have a different mix of content types, which may mean that Google has determined that the query has a different distribution of fractured intent. Think about how Google might decide that more commerce related results should be served between Black Friday and Christmas.

Once again, it would be interesting to have a tool that alerted you to when the distribution of content types changed.

Finally, sometimes the way users search changes over time. An easy example is the rise and slow ebb of the ‘near me’ modifier. But it can be more subtle too.

Over a number of years I saw the dominant query syntax change from ‘[something] in [city]’ to ‘[city] [something]’. This wasn’t just looking at third-party query volume data but real impression and click data from that site. So it pays to revisit assumptions about query syntax on a periodic basis.

TL;DR

Query syntax is looking at the patterns of words that make up queries. Our job as search marketers is to determine intent and deliver the right content, both subject and format, based on an analysis of query syntax.

By focusing on query syntax you can uncover query classes, capture featured snippets, improve titles, find content gaps and better understand algorithm changes.

TL;DC

(This is a new section I’m trying out for the related content I’ve linked to within this post. Not every link reference will wind up here. Only the ones I believe to be most useful.)

A Language for Search and Discovery

Search Driven Content Strategy

The end. Seriously. Go back to what you were doing. Nothing more to see here. This isn’t a Marvel movie.

The Next Post: The Invisible Attribution Model of Link Acquisition

The Previous Post: What I Learned In 2018

1 trackbacks/pingbacks

Comments About Query Syntax

// 15 comments so far.

Victor Pan // February 11th 2019

Best, Reviews, VS, 2019 – I too, have a healthy obsession… and the shift from Google seem to have been to add a knowledge carousel (dammit Wikipedia) for a “list” of items for some queries. Search marketers that double-down on query classes are seeing huge wins, but at the same time these are the areas Google is creating search experiences just on a particular query class/vertical (not sure what the right terminology is here, but words matter). “jobs” and “flights” come into mind. I scared and frightened at the same time.

I think with the whole introduction of a Topic Layer + Google Discover, query syntax is going to be even more important to get right. Syntax pretty much dictates how advanced you are in the content you’re expecting, and a lot of times what you plan to do with that information. RIP recommending writing for a Xth grade reading level for everything (that sounded like a good idea back in the day, but now it’s a bit silly especially if your target converting audience typically has a masters degree in something).

Letting my mind wander a bit…. where I worry a bit is whether we can create content systematically for a particular query class that is able to update itself and improve over time in value for users at scale. It seems like UGC often gets pulled into the equation and content governance becomes the secret sauce in doing things that scale that can adapt to the seasonal fluctuations of intent changing. An ML-based bot for approving/disapproving additions? Not sure. We’re doing things pretty manually, keeping all that data, and hoping some day an answer will come from just looking at old things change over time (something something everything could be an ML problem if you frame it right).

As always, thanks for tickling my brain AJ.

AJ Kohn // February 11th 2019

Glad to have a fellow obsessive Victor!

There are definitely areas where Google is encroaching. It just means you have to find other query classes instead or reduce your expectations for those query classes. One thing I’m seeing is far more volume on query classes with more words – four or even five sometimes. Google seems to be rewarding specificity far more lately.

Agreed on the reading level thing. There’s just … well, people don’t really read anyway so what’s the use? I’m not so keen on Google’s discover jargon. It can be useful in certain instances but is still pretty limiting. I remember seeing something a number of years back that said Google tracked hundreds of variations of intent. Sadly, I can’t find that now. It’s frustrating as hell and haunts me.

I’m not sure about the ways in which you could automate updates to content to meet changes to intent. I think we’re a ways from that given how much of this is still so human driven. But a good first step would be simply knowing when the intent changes. I don’t think we have an early warning sign for that right now.

Kane Jamison // February 11th 2019

AJ great post!

When you’re thinking about content overlap – would you say that “Ranking URLs” is sufficient comparison (eg 6 shared links between these 2 queries = 60% overlap)? Or are you thinking of literally comparing the full text content of the URLs on each SERP?

AJ Kohn // February 11th 2019

Thanks Kane. You wrote a doozy of one too! And just that the ranking URLs are the same. I have no need to compare the full text within a tool. I’d just be looking to validate whether I needed eyes on the SERP.

But I could see returning 60% overlap and then a breakdown of intent as you’ve described it in your tool.

Drew Diehl // February 12th 2019

Hey AJ,

Super interesting stuff! I primarily work in the auto industry and have been conducting research on the variance in featured snippet content that pops up for a comparison query and how it relates to the car brand.

I haven’t found anything concrete, but there are some interesting trends when it comes to the snippet content for comparison content for perceived luxury brands vs cheaper brands. Essentially, for cheaper brands the snippet is more likely to focus on price and for luxury brands the snippet likely focuses on interior features.

When considering buyer intent for both groups, this makes plenty of sense, but have you noticed any changes in snippet content based on buyer intent related to a brand? It’d be interesting to see how they would gather data (sentiment analysis?) to incorporate, but I could see this incorporated into their query intent algorithm.

Thanks!

AJ Kohn // February 13th 2019

Thanks for the interesting comment Drew.

I think in those instances it’s likely just a product of the searches being conducted. You’re probably not going to see the words cheap and maserati together that often. The snippets team looks for specific comparison words (vs or versus) and then will grab the most relevant wording around that phrase sometimes. That’s where co-occurence of words and brands (aka entities) might come into play.

Raffa // February 15th 2019

very insightful thank you! I am also thinking…”It would be nice to have a tool that provided content overlap scores for two terms”. In fact, I may build it myself. But keep us posted if you come across such a tool!

AJ Kohn // February 17th 2019

Thanks Raffa. And whether it’s you or someone else, I just hope the content overlap tool becomes a reality soon.

Mike Perez // February 18th 2019

Great stuff as usual AJ. After reading your post, I was inspired to create a simple content overlap tool. I figured I would share it with you and your readers as well. https://1point21interactive.com/cot/

AJ Kohn // February 18th 2019

Thanks Mike. A+ for putting that together so quickly. The tool works, though I’d be keen if it worked a bit quicker.

Mike Perez // February 19th 2019

Not a problem. Yes, was definitely too slow. Made some changes. Let me know if that seems faster for you.

AJ Kohn // February 19th 2019

MUCH faster Mike. Very nice! Just referenced this with a client.

Toby Graham // March 20th 2019

AJ I don’t know how you do it but you look at very complex SEO issues and keep it entertaining.

I’ve also had insane success in repurposing content to align more to searcher intent. It’s actually blown me away how a few small tweaks to existing can have such an impact.

Also the fractured intent terms are the real pain the SEO’s ass these days. I get why they’re doing it. And it does actually force users to expand on the number of words they’re using – which helps everyone in the long run. But I’ve seen high volume generic terms become impossible to compete on. All because the wise one has decided the site no longer matches that intent. So long 110,000 searches a month. Been nice knowing you.

p.s. Cool tool, Mike Perez (FYI – had to click on location to get the data to show)

AJ Kohn // March 24th 2019

Toby,

Thanks for the comment and apologies for the tardy reply. First off, I really appreciate that feedback about making the complex entertaining. It’s one of the things I really strive to do so it’s great to hear that I’ve accomplished that.

And you’re right, small tweaks can have a large impact. Algorithm changes often reveal those pieces of content where you have a query/intent/content mismatch. Solve those and you can be right back in the thick of things.

As for fractured intent, I feel you there. It’s not an easy conversation to have with a client. In fact, I just had one of those two days ago. It’s often very hard for a company to let go of a root term they think they should rank for but can’t.

Adam Ellifritt // May 13th 2019

Wow, you take quite possibly the most in-depth look at SEO and make it incredibly entertaining. Thank you for putting this together. 🙂

Sorry, comments for this entry are closed at this time.

You can follow any responses to this entry via its RSS comments feed.