SEO A/B testing is limiting your search growth.

I know, that statement sounds backward and wrong. Shouldn’t A/B testing help SEO programs identify what does and doesn’t work? Shouldn’t SEO A/B testing allow sites to optimize based on statistical fact? You’d think so. But it often does the opposite.

That’s not to say that SEO A/B testing doesn’t work in some cases or can’t be used effectively. It can. But it’s rare and my experience is SEO A/B testing is both applied and interpreted incorrectly, leading to stagnant, status quo optimization efforts.

SEO A/B Testing

The premise of SEO A/B testing is simple. Using two cohorts, test a control group against a test group with your changes and measure the difference in those two cohorts. It’s a simple champion, challenger test.

So where does it go wrong?

The Sum is Less Than The Parts

I’ve been privileged to work with some very savvy teams implementing SEO A/B testing. At first it seemed … amazing! The precision with which you could make decisions was unparalleled.

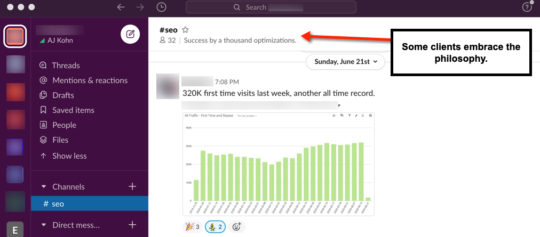

However, within a year I realized there was a very big disconnect between the SEO A/B tests and overall SEO growth. In essence, if you totaled up all of the SEO A/B testing gains that were rolled out it was way more than actual SEO growth.

just sayin’ pic.twitter.com/NgLkw1SPvD

— Luke Wroblewski (@LukeW) May 15, 2018

I’m not talking about the difference between 50% growth and 30% growth. I’m talking 250% growth versus 30% growth. Obviously something was not quite right. Some clients wave off this discrepancy. Growth is growth right?

Yet, wasn’t the goal of many of these tests to measure exactly what SEO change was responsible for that growth? If that’s the case, how can we blithely dismiss the obvious fact that actual growth figures invalidate that central tenant?

Confounding Factors

So what is going on with the disconnect between SEO A/B tests and actual SEO growth? There are quite a few reasons why this might be the case.

Some are mathematical in nature such as the winner’s curse. Some are problems with test size and structure. More often I find that the test may not produce causative changes in the time period measured.

A/A Testing

Many sophisticated SEO A/B testing solutions come with A/A testing. That’s good! But many internal testing frameworks don’t, which can lead to errors. While there are more robust explanations, A/A testing reveals whether your control group is valid by testing the control against itself.

If there is no difference between two cohorts of your control group then the A/B test gains confidence. But if there is a large difference between the two cohorts of your control group then the A/B test loses confidence.

More directly, if you had a 5% A/B test gain but your A/A test showed a 10% difference then you have very little confidence that you were seeing anything but random test results.

In short, your control group is borked.

Lots of Bork

There are a number of other ways in which your cohorts get get borked. Google refuses to pass a referrer for image search traffic. So you don’t really know if you’re getting the correct sampling in each cohort. If the test group gets 20% of traffic from image search but the control group gets 35% then how would you interpret the results?

Some wave away this issue saying that you assume the same distribution of traffic in each cohort. I find it interesting how many slip from statistical precision to assumption so quickly.

Do you also know the percentage of pages in each cohort that are currently not indexed by Google? Maybe you’re doing that work but I find most are not. Again, the assumption is that those metrics are the same across cohorts. If one cohort has a materially different percentage of pages out of the index then you’re not making a fact based decision.

Many of these potential errors can be reduced by increasing the sample size of the cohorts. That means very few can reliably run SEO A/B tests given the sample size requirements.

But Wait …

Maybe you’re starting to think about the other differences in each cohort. How many in each cohort have a featured snippet? What happens if the featured snippets change during the test? Do they change because of the test or are they a confounding factor?

Is the configuration of SERP features in each cohort the same? We know how radically different the click yield can be based on what features are present on a SERP. So how many Knowledge Panels are in each? How many have People Also Asked? How many have image carousels? Or video carousels? Or local packs?

Again, you have to hope that these are materially the same across each cohort and that they remain stable across those cohorts for the time the test is being run. I dunno, how many fingers and toes can you cross at one time?

Exposure

Sometimes you begin an SEO A/B test and you start seeing a difference on day one. Does that make sense?

It really shouldn’t. Because an SEO A/B test should only begin when you know that a material amount of both the test and control group have been crawled.

Google can’t have reacted to something that it hasn’t even “seen” yet. So more sophisticated SEO A/B frameworks will include a true start date by measuring when a material number of pages in the test have been crawled.

Digestion

What can’t be known is when Google actually “digests” these changes. Sure they might crawl it but when is Google actually taking that version of the crawl and updating that document as a result? If it identifies a change do you know how long it takes for them to, say, reprocess the language vectors for that document?

That’s all a fancy way of saying that we have no real idea of how long it takes for Google to react to document level changes. Mind you, we have a much better idea of when it comes to Title tags. We can see them change. And we can often see that when they change they do produce different rankings.

I don’t mind SEO A/B tests when it comes to Title tags. But it becomes harder to be sure when it comes to content changes and a fool’s errand when it comes to links.

The Ultimate SEO A/B Test

In many ways, true A/B SEO tests are core algorithm updates. I know it’s not a perfect analogy because it’s a pre versus post analysis. But I think it helps many clients to understand that SEO is not about any one thing but a combination of things.

More to the point, if you lose or win during a core algorithm update how do you match that up with your SEO A/B tests? If you lose 30% of your traffic during an update how do you interpret the SEO A/B “wins” you rolled out in the months prior to that update?

What we measure in SEO A/B tests may not be fully baked. We may be seeing half of the signals being processed or Google promoting the page to gather data before making a decision.

I get that the latter might be controversial. But it becomes hard to ignore when you repeatedly see changes produce ranking gains only to erode over the course of a few weeks or months.

Mindset Matters

The core problem with SEO A/B testing is actually not, despite all of the above, in the configuration of the tests. It’s in how we use the SEO A/B testing results.

Too often I find that sites slavishly follow the SEO A/B testing result. If the test produced a -1% decline in traffic that change never sees the light of day. If the result was neutral or even slightly positive it might not even be launched because it “wasn’t impactful”.

They see each test as being independent from all other potential changes and rely solely on the SEO A/B test measurement to validate success or failure.

When I run into this mindset I either fire that client or try to change the culture. The first thing I do is send them this piece on Hacker Noon about the difference between being data informed and data driven.

Because it is exhausting trying to convince people that the SEO A/B test that saw a 1% gain is worth pushing out to the rest of the site. And it’s nearly impossible in some environments to convince people that a -4% result should also go live.

In my experience SEO A/B test results that are between +/- 10% generally wind up being neutral. So if you have an experienced team optimizing a site you’re really using A/B testing as a way to identify big winners and big losers.

Don’t substitute SEO A/B testing results over SEO experience and expertise.

I get it. It’s often hard to gain the trust of clients or stakeholders when it comes to SEO. But SEO A/B testing shouldn’t be relied upon to convince people that your expert recommendations are valid.

The Sum is Greater Than The Parts

Because the secret of SEO is the opposite of death by a thousand cuts. I’m willing to tell you this secret because you made it down this far. Congrats!

Clients often want to force rank SEO recommendations. How much lift will better alt text on images drive? I don’t know. Do I know it’ll help? Sure do! I can certainly tell you which recommendations I’d implement first. But in the end you need to implement all of them.

By obsessively measuring each individual SEO change and requiring it to obtain a material lift you miss out on greater SEO gains through the combination of efforts.

In a follow-up post I’ll explore different ways to measure SEO health and progress.

TL;DR

SEO A/B tests provide a comforting mirage of success. But issues with how SEO A/B tests are structured, what they truly measure and the mindset they usually create limit search growth.

The Next Post: What I Learned In 2020

The Previous Post: Rich Results Test Bookmarklets

Comments About SEO A/B Testing

// 21 comments so far.

Amanda // February 03rd 2021

Hear, hear. A lot of marketing and dev teams aren’t mature enough or have the right statistical analysis in place for conversion rate optimisation, let alone the more “bleeding edge” SEO A/B testing.

It’s a bit like design and UX in some ways, where just because we aren’t able to absolutely quantify for you the effect of this one change doesn’t mean it’s not a good thing to implement that will improve the website.

AJ Kohn // February 03rd 2021

Thank you for your comment Amanda. It’s so important to think about whether this is a good change for the user.

Sometimes tests aren’t even measuring whether it improved conversion, much less weekly or monthly active users or something complex like an increase in multi-touch attribution using a 90 day window.

I still love to measure but I see better results from those who ship and measure more things rather than fight over the validity of a 1% lift via an A/B SEO test.

Amanda King // February 03rd 2021

Oh goodness, yes. And the whole rigmarole of //it got a -1% result so nope// that you mentioned. Even (or perhaps especially) statistics has a margin of error…I find the less mature in testing a company is the more they stick to the absolute numbers, without any room for colour or context.

Keen to hear your thoughts on measurement, too. I feel like you and Simo would have a fun time over a beverage or two figuring our how best to automate reporting on a delta of multi-touch attribution….

And then there are the folx who roll out CRO experiments without acknowledging that the lift they see could be negated by lower visibility in SERPs….sigh. Nightmares.

AJ Kohn // February 04th 2021

Completely agree that the less mature a company is in testing the more they stick to the absolute numbers. It’s honestly the impetus for this post. It crushes the growth opportunity at those companies.

On measurement, I might be able to converse and keep up but Simo would run circles around me.

Louis Gray // February 03rd 2021

Bork bork bork.

Still enjoying your posts, AJ!

AJ Kohn // February 04th 2021

Thank you Louis! It’s great to have you check in.

Richard Baxter // February 04th 2021

I worry that SEO A/B testing is sold on the principle that “the average SEO is at best guessing” when in fact; experience wins. And I also think the wisdom of the crowd applies to the industry best practice we’ve established. A/B testing tends to promote an idea that at scale SEO work is the only kind of SEO work, when in fact I find myself working through lots of tiny optimisations (like CTR by improving meta descriptions to better match the likely intent of a query, for example). This process of improvement isn’t something you could adequately emulate with AB testing. Buyer beware – test things, sure – but implement and re-test with common sense applied.

AJ Kohn // February 04th 2021

Well said Richard.

SEO is … opaque and as such it can be hard for decision makers to believe that SEOs know what they’re talking about, particularly when compared to our buttoned-up PPC siblings. To be clear, a lot of SEOs don’t know what they’re talking about. But many of us do because of our experience.

Too often SEO A/B testing is employed because they think it’s all a crapshoot and a guessing game. Expertise is meaningful and should not be dismissed as simple ‘gut feeling’. SEO A/B testing can be effective but only if SEO expertise is riding shotgun on what is being tested and interpreting what the results truly mean.

Nick Swan // February 04th 2021

I feel like I’ve got to reply to this as I have a tool called SEOTesting.com!

While the tool does have the ability to collect data from 2 groups of pages as an SEO split test, 99% of the tests people run using SEOTesting are time based tests. They understand they could be impacted by seasonality and algo updates, but they find them useful for two things:

1, Bearing seasonality and algo updates in mind, whether those changes had an positive affect

2, Simply as a marker, so in 6 months time they can remember what work was done on a page – and have the ability to look back at a before and after with regards data.

It’s been really interesting to do a bit of analysis on time based tests that have completed. The results come out as being a 50% chance of an increase in clicks, and a 50% chance of a decrease in clicks! And this is based on the fact that my tool is aimed at people who know what they are doing when it comes to SEO!

SEO testing (time based or split testing) isn’t a silver bullet – but I think it’s something that is interesting to run and helps you learn to dig into the data behind changes that have been made on a site.

AJ Kohn // February 04th 2021

I’m glad you did reply. Similarly, I’ve had a great dialog with Will Critchlow on Twitter.

I actually like point two quite a bit. I find many don’t document changes very well so being able to annotate changes against a timeline is quite useful.

Now, the reliance on Google Search Console data does scare me since it is incomplete and frequently incorrect. Though if measured consistently it should still provide some insight.

Ramesh Singh // February 05th 2021

Well kudos to AJ for doing this article. I can relate many of the instances you mentioned in this article.

I personally do A/A testing to understand whether the test i’m doing making any change to my clicks, ctr or rankings within the specified time usually 2-4 weeks for individual page. I’m using Nick’s SEOtesting.com and quite happy with the tool. Like he mentioned you can able to understand what changes bring better visibility and providing annotation of what was changed.

Rick Bucich // February 04th 2021

“The opposite of death by a thousand cuts” bam!

Oh boy does this post touch about a thousand nerves. “Success” may not even have any measurable impact traffic wise.

And don’t get me started on UX or conversion A/B testing that alter the production page to the point where it can impact SEO – and decisions are made on those results independently of SEO/traffic impact.

Lastly, very difficult to run a clean test if the data is dirty and the company won’t clean the data without running tests first. This is just poor leadership.

AJ Kohn // February 04th 2021

Agreed Rick. There are some SEO changes for which I doubt there will be a short-term bump in traffic. But I believe it will improve conversion or engagement or satisfaction, which ultimately will – overtime – lead to higher rankings and traffic.

Unfortunately, in some instances internal stakeholders just want a number to point to so they can ‘prove’ their worth. It’s an easier story to tell.

Will Critchlow // February 05th 2021

I put some of my thoughts up on twitter (you kindly referred to them above, AJ) but I thought it was worth including here as well – written from the perspective of being a founder of an SEO A/B testing software platform!

So. It won’t surprise you to know I disagree with the first line (“SEO A/B testing is limiting your search growth”). But I agree with some crucial points:

1. you do need to work hard to avoid confounding factors and validate your work.

2. you do need to work hard to avoid “borking” your tests

3. most importantly you do need the right mindset – we talk a lot about “doing business not science”. It’s about doing better than you could without testing.

Now. Some things I disagree with:

1. Should you worry about something happening in the control and not in the variant? Not unduly IMO. This is a risk with any controlled test, and is exactly why you analyse the output statistically rather than eyeballing it.

(sidenote: maybe this is more about the “borking” point but we actually do put a lot of effort into detecting if a test has too small a sample size, has pages behaving differently to their groups, etc and into making statistically-similar buckets)

2. Is it a problem that we don’t know exactly when google has “digested” a change? Again, not particularly – the statistics detect an uplift when and if it appears.

3. Should you avoid testing because a core update might change the landscape? Not in my opinion – you might as well argue that you shouldn’t change anything or do any SEO because the world might change.

Ultimately, I agree that SEO success is a result of many small improvements, but my takeaway from this is that it’s more important to test, not less:

https://www.searchpilot.com/resources/blog/marginal-losses-the-hidden-reason-your-seo-performance-is-lagging/

We have a lot of internal guidance that I need to write up for public consumption that covers the kind of thing you talk about in terms of figuring out what to do with inconclusive tests etc – our professional services team (one of our hopefully-not-too-secret-weapons: https://searchpilot.com/features/professional-services/ ) spends a lot of time telling customers similar things.

Short version: roll out inconclusive tests if they are backed by a good hypothesis, don’t throw away negative tests – consider modifying / learning from them. In fact we should “default to deploy” and seek to avoid the worst drops. Remember we’re doing business, not science.

More here: https://twitter.com/willcritchlow/status/1357367171949752321

AJ Kohn // February 06th 2021

As you say Will, I think we agree on a lot of points. I won’t go further here in this response since you’ve linked to the Twitter threads. (If you’re reading this, go on over and follow the replies.)

I think I have greater reservations about accuracy given the number of factors in play. However, I realize that in some ways we agree that the goal is to do more and not less. We may quibble over whether that ‘more’ should always be A/B tested, but I think we’re largely on the same page in terms of through-put and shipping being vital to success.

“Doing business, not science” is a great tagline.

Frank Sandtmann // February 05th 2021

Great post again, AJ. SEO has no silver bullet, but is rather operating following the principle of death by a thousand cuts. I really like that analogy.

Working mostly with B2B customers, I am experiencing another problem with A/B testing. Due to the relatively low number of visits, for most tests it would take ages to reach statistical significance. Thus, experience and expertise might be even more valuable in niche markets.

AJ Kohn // February 06th 2021

Thanks Frank. And yes, to really get the most out of A/B testing you need a fairly large amount of traffic.

In those instances, I often see sites wait a long time for each test to gain significance. But that means they often only do a handful of tests! That, to me, is the real danger. You have to take more swings to get more hits.

aaron // February 08th 2021

The other big aspect here beyond changes like seasonality are competitors are changing and user perceptions are constantly changing. Plus some traffic is traffic you do not actually want. One of my early clients had a list of keywords by search volume & some of those had modifiers like “cheap” or “discount” in them. They were very easy to rank for, but then the chargebacks came & increased customer service time and cost wanting to get freebies or change the terms of the deal after purchase as the wrong customer demographics flooded into the site & polluted the average order value, profit margin, refund costs, etc.

AJ Kohn // February 08th 2021

Thanks for the comment Aaron. You bring up two great points. First is that many will run that A/B test, get the result and then not revisit it … ever. It’s like the test closes the book on the issue. But as you say, user behavior changes over time. More than we often realize.

Second is the idea that all traffic is good traffic. I often say we could get a lot of traffic if we put ‘Free Beer’ in the Title tags but it wouldn’t actually help your business.

Dan // February 17th 2021

Few points:

1) In addition to A/A testing, I think it is important to keep a long-term holdout group that is never included in either test or control segments and is infrequently updated. That way you get a baseline for the cumulative effect of all SEO tests.

2) The compounding issues that AJ discusses such as Google changes during a test fall into a more general category of bias that randomized testing does resolve interactions with external factors. For example, one site had just two products out of thousands that drove a big part of Holiday revenue. Both happened to make it into the same cohort for a test that started last week in November. While that was a dramatic confounding factor, any test that goes on more than a few weeks will likely get hit by something

3) I see SEO testing designed to get quick “wins.” I know of a team that did a load of title tag testing and evaluated click rates off of the SERP. They made determinations and updates in just a few weeks and never looked at changes in ranking down-the-road.

AJ Kohn // February 18th 2021

Thanks for the comment Dan.

Yes, if you’ve got enough traffic a long-term holdout can be quite useful. And you’re right that the longer a test runs, the better chance of confounding factors. Oddly, it may also help reduce a few of those as well. Analysis then becomes increasingly important.

Your last point is crucial. Title tag tests are great but often revert to the mean. And if you’re only looking at one metric it might not be great for long term success. I could put Free Shipping in the Title and juice CTR but if I don’t offer Free Shipping both conversion and engagement could suffer and ultimately reduce ranking.

Sorry, comments for this entry are closed at this time.

You can follow any responses to this entry via its RSS comments feed.