Google recently launched a new feature that allows users to personalize their search results by blocking certain domains. What impact will this have and what does it mean for the future of search?

Artificial Intelligence

A recent New York Post article by Peter Norvig discussed advances in artificial intelligence. Instead of creating HAL, the current philosophy is to allow both human and computer to concentrate on what they do best.

A good example is the web search engine, which uses A.I. (and other technology) to sort through billions of web pages to give you the most relevant pages for your query. It does this far better and faster than any human could manage. But the search engine still relies on the human to make the final judgment: which link to click on, and how to interpret the resulting page.

The partnership between human and machine is stronger than either one alone. As Werner von Braun said when he was asked what sort of computer should be put onboard in future space missions, “Man is the best computer we can put aboard a spacecraft, and the only one that can be mass produced with unskilled labor.” There is no need to replace humans; rather, we should think of what tools will make them more productive.

I like where this might be leading and absolutely love the idea of personalized results. Let me shape my own search results!

Human Computer Information Retrieval

I’ve been reading a lot about HCIR lately. It’s a fascinating area of research that could truly change how we search. Implemented the right way, search would become very personal and very powerful.

The challenge seems to be creating effective human computer refinement interfaces. Or, more specifically, interfaces that produce active refinement, not passive refinement.

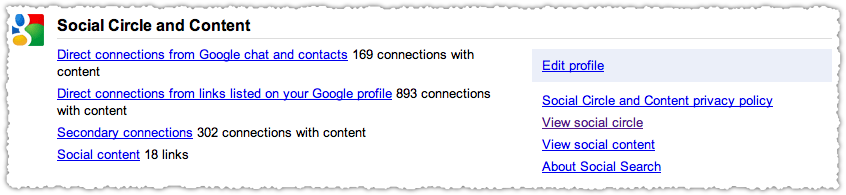

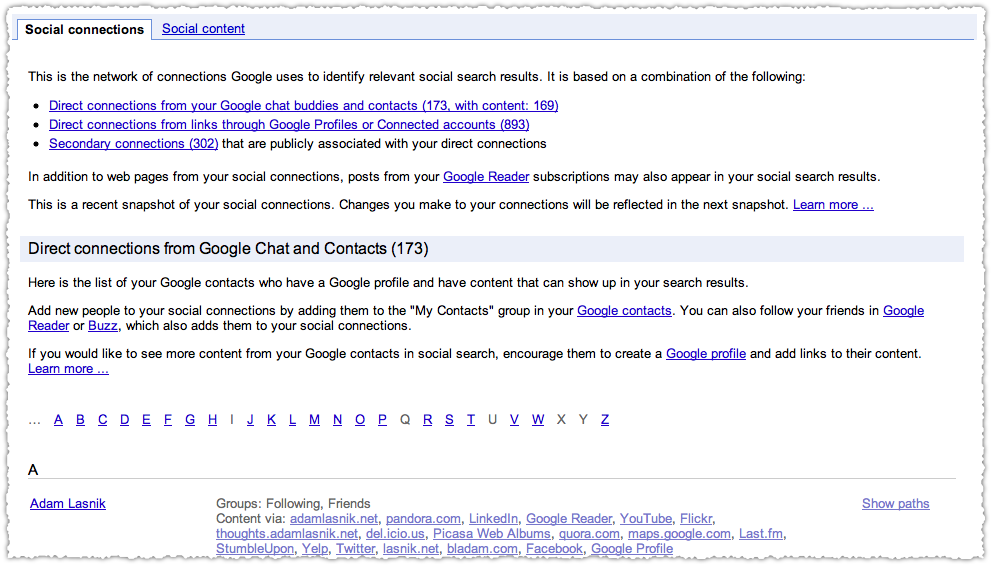

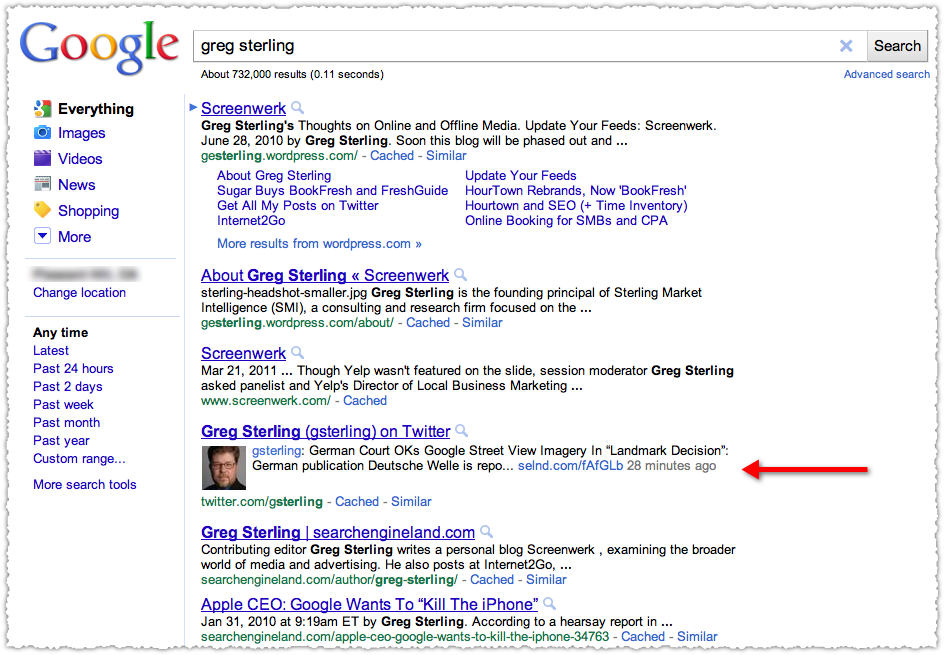

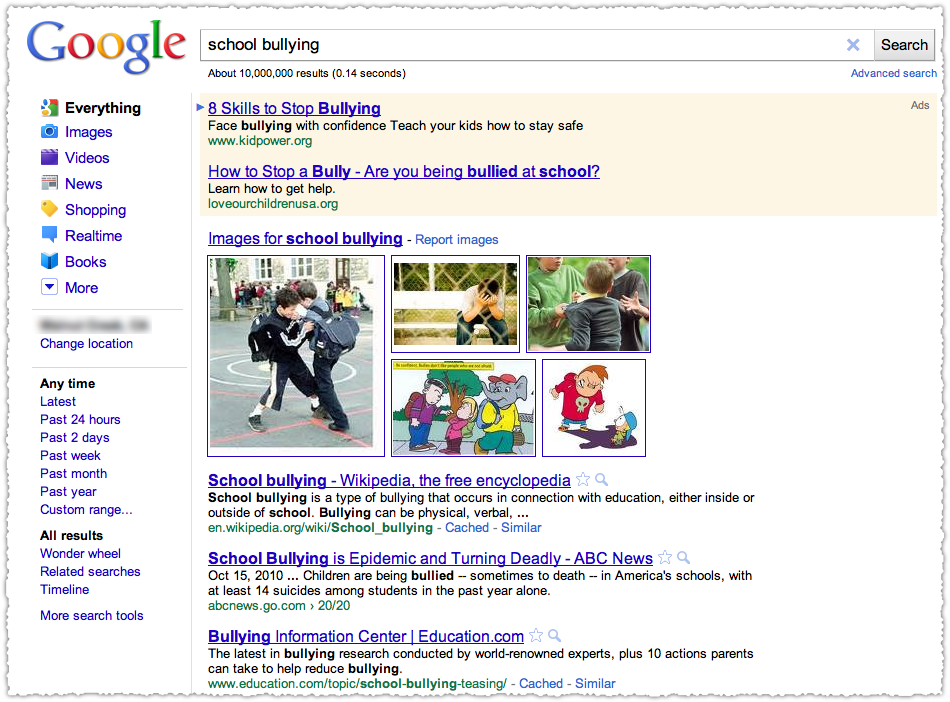

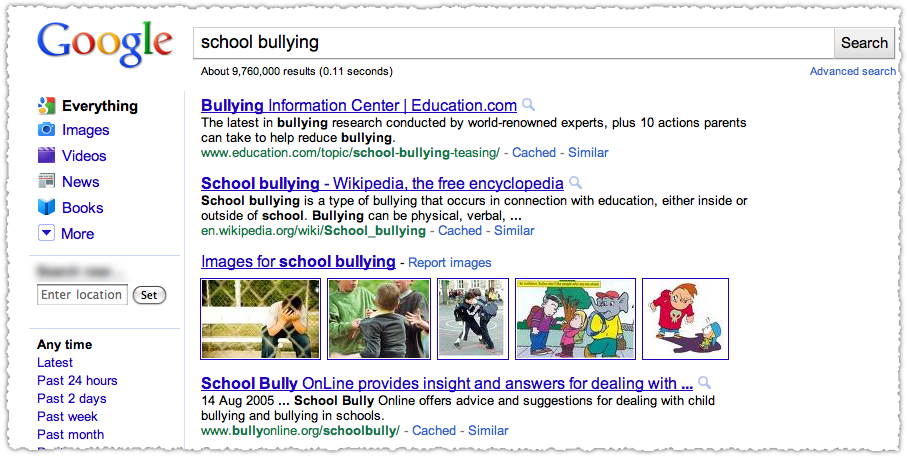

At present, Google uses a lot of passive refinement to personalize results. They look at an individual’s search and web history, track click-through rate and pogosticking on SERPs and add a layer of geolocation.

Getting users to actively participate has been a problem for Google.

A Brief History of Google Personalization

Google launched personalized search in June of 2005 and expanded their efforts in February of 2007. But the first major foray into soliciting active refinement was in November of 2008 with the launch of SearchWiki.

This new feature is an example of how search is becoming increasingly dynamic, giving people tools that make search even more useful to them in their daily lives.

The problem was that no one really used SearchWiki. In the end it was simply too complicated and couldn’t compete with other elements on the page, including the rising prominence of universal search results and additional Onebox presentations.

In December of 2009 Google expanded the reach of personalized search.

What we’re doing today is expanding Personalized Search so that we can provide it to signed-out users as well. This addition enables us to customize search results for you based upon 180 days of search activity linked to an anonymous cookie in your browser.

This didn’t go down so well with a number of privacy folks. However, I believe it showed that Google felt personalized search did benefit users. They also probably wanted to expand their data set.

In March of 2010 SearchWiki was retired with the launch of Stars.

With stars, we’ve created a lightweight and flexible way for people to mark and rediscover web content.

Stars wasn’t really about personalizing results. It presented relevant bookmarks at the top of your search results. Google clearly learned that the interaction design for SearchWiki wasn’t working. The Stars interaction design was far easier, but the feature benefits weren’t compelling enough.

A year later, Stars is replaced with blocked sites.

We’re adding this feature because we believe giving you control over the results you find will provide an even more personalized and enjoyable experience on Google.

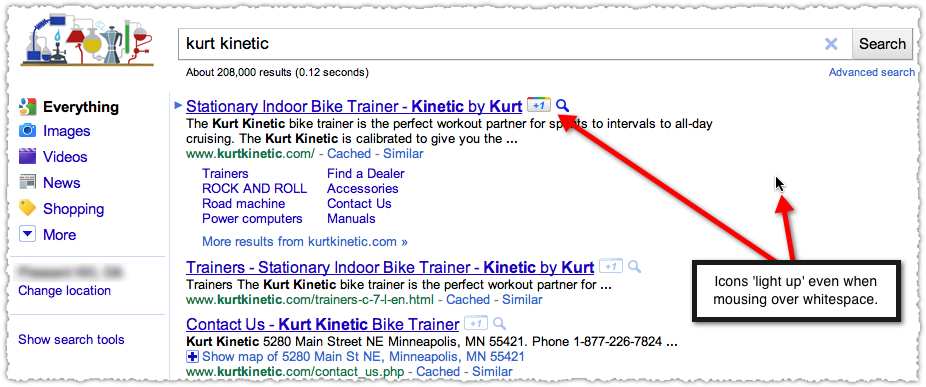

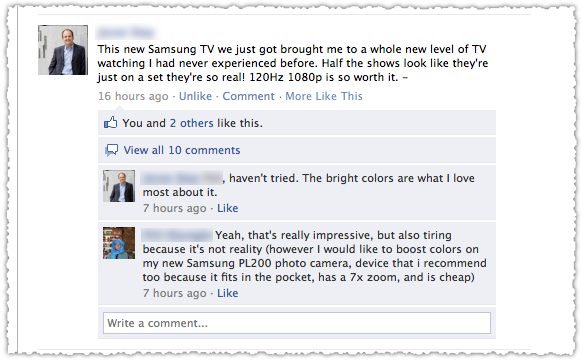

Actually, I’m not sure what this feature is called. Are we blocking sites or hiding sites? The lack of product marketing surrounding this feature makes me think it was rushed into production.

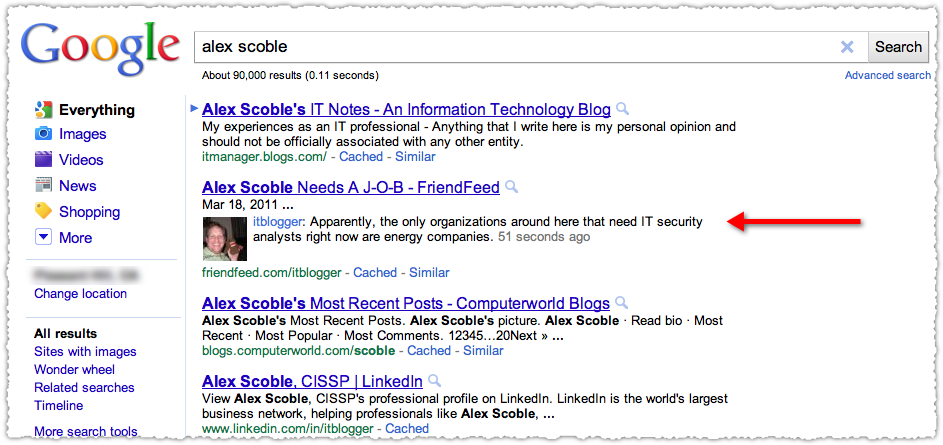

In addition, the interaction design of the feature is essentially the same as FriendFeed’s hide functionality. Perhaps that’s why the messaging is so confused.

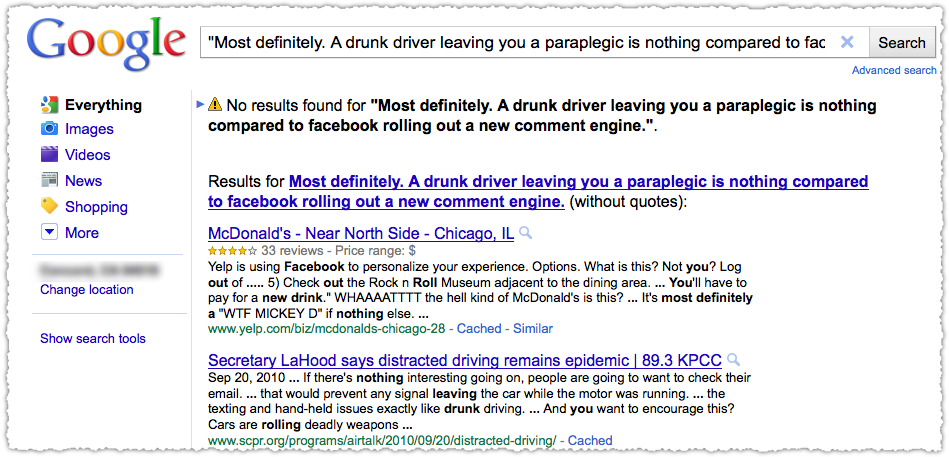

Cribbing the FriendFeed hide feature isn’t a bad thing – it’s simple, elegant and powerful. In fact, I hope Google adopts the extended feature set and allows results from a blocked site to be surfaced if it is recommended by someone in my social graph.

Can Google Engage Users?

I wish Google would have launched the block feature more aggressively and before any large scale algorithmic changes. The staging of these developments points to a lack of confidence in engaging users to refine search results.

Google hasn’t solved the active engagement problem. Other Google products that rely on active engagement have also failed to dazzle, including Google Wave and Google Buzz.

I worry that this short-coming may cause Google to focus on leveraging engagement rather then working on ways to increase the breadth and depth of engagement.

In addition, while we’re not currently using the domains people block as a signal in ranking, we’ll look at the data and see whether it would be useful as we continue to evaluate and improve our search results in the future.

This may simply be a way to reserve the right to use the data in the future. And, in general, I don’t have a problem with using the data as long as it’s used in moderation.

Curated data can help augment the algorithm. Yet, it is a slippery slope. The influence of others shouldn’t have a dramatic effect on my search results and certainly should not lead to sites being removed from results altogether.

That’s not personalization, that’s censorship.

SERPs are not Snowflakes

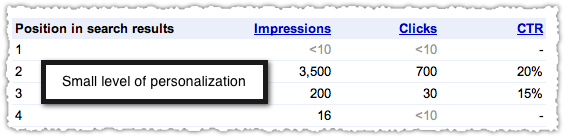

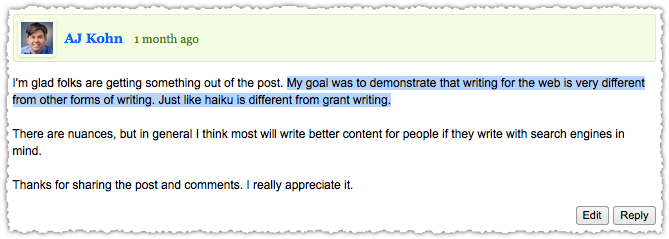

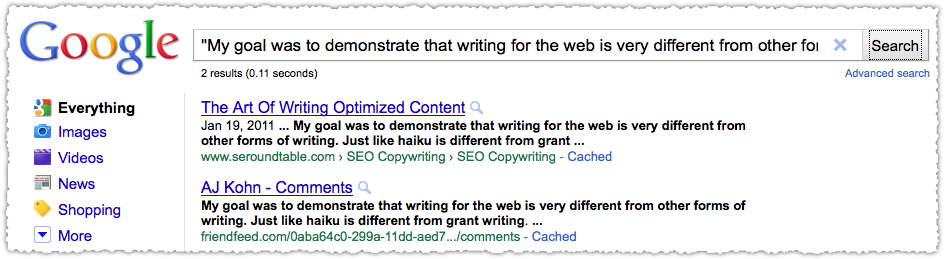

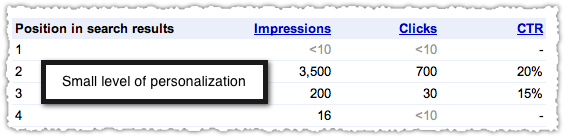

All of Google’s search personalization has been relatively subtle and innocuous. Rank is still meaningful despite claims by chicken little SEOs. I’m not sure what reports they’re looking at, but the variation in rank on terms due to personalization is still low.

Even when personalization is applied, it is rarely a game changer. You’ll see small movement within the rankings, but not wild changes. I can still track and trend average rank, even with personalization becoming more commonplace. Given the amount of bucket testing Google is doing I can’t even say that the observed differences can be attributed solely to personalization.

I don’t use rankings as a way to steer my SEO efforts, but to think rank is no longer useful as a measurement device is wrong. Yet, personalization still has the potential to be disruptive.

The Future of Search Personalization

Google needs to increase the level of active human interaction with search results. They need our help to take search to the next level. Yet, most of what I hear lately is about Google trying to predict search behavior. Have they given up on us? I hope not.

Gary Marchionini, a leader in the HCIR field, puts forth a number of goals for HCIR systems. Among them are a few that I think bear repeating.

Systems should increase user responsibility as well as control; that is, information systems require human intellectual effort, and good effort is rewarded.

Systems should be engaging and fun to use.

The idea that the process should be engaging, fun to use and that good effort is rewarded sounds a lot like game mechanics. Imagine if Google could get people to engage search results on the same level as they engage with World of Warcraft!

Might a percentage complete device, popularized by LinkedIn, increase engagement? Maybe, like StackOverflow, certain search features are only available (or unlocked) once a user has invested time and effort? Game mechanics not only increases engagement but helps introduce, educate and train users on that product or system.

Gamification of search is just one way you could try to tackle the active engagement problem. There are plenty of other avenues available.

Personalization and SEO

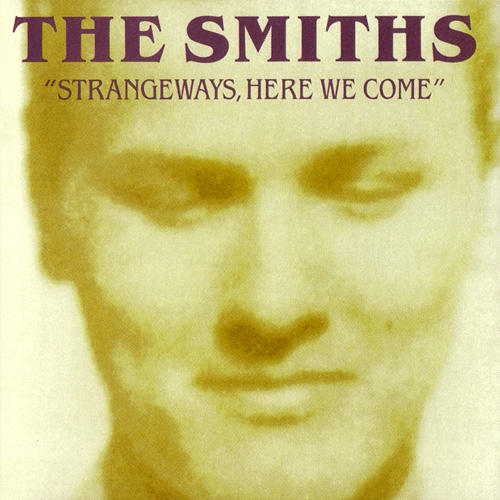

I used the cover artwork from the Smith’s last studio album at the beginning of this post. I thought ‘Strangeways, Here We Come’ was an apt description for the potential future of personalized search. However, a popular track from this album may be more meaningful.

Stop me if you think you’ve heard this one before.

SEO is not dead, nor will it die as a result of personalization. The industry will continue to evolve and grow. Personalization will only hasten the integration of numerous other related fields (UX and CRO among others) into SEO.

The block site feature is a step in the right direction because it allows control and refinement of the search experience transparently without impacting others. It could be the start of a revolution in search. Yet … I have heard this one before.

Lets hope Google has another album left in them.