There are plenty of SEO metrics staring you right in your face as the folks at SEOmoz recently pointed out.

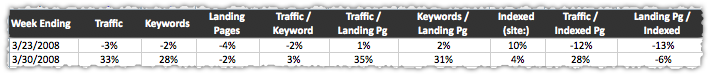

SEO Metrics Dashboard

I’ll quickly review the SEO metrics I’ve tracked and used for years. Combined they make a decent SEO metrics dashboard.

SEO Visits

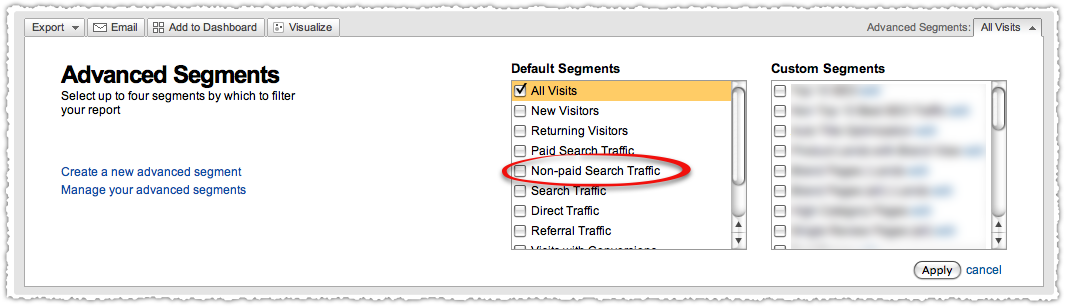

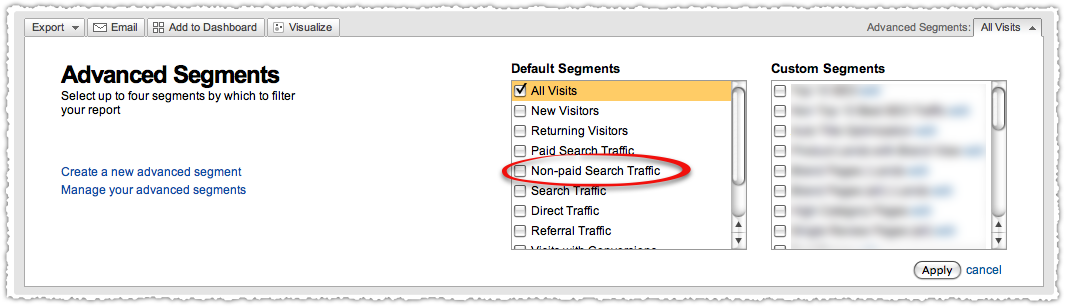

Okay, turn in your contractor SEO credentials if you’re not tracking this. Google Analytics makes it easy with their built in Non-paid Search Traffic default advanced segment.

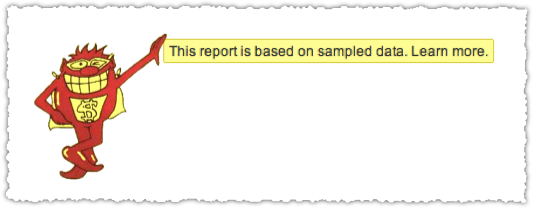

However, be careful to measure by the week when using this advanced segment. A longer time frame can often lead to sampling. You do not want to see this. It’s the Google Analytics version of the Whammy.

Alternatively, you can avoid the default advanced segment and instead navigate to All Traffic -> Search Engines (Non-Paid) or drill down under All Traffic Sources to Medium -> Organic. Beware, you still might run into the sampling whammy if you’re looking at longer time frames.

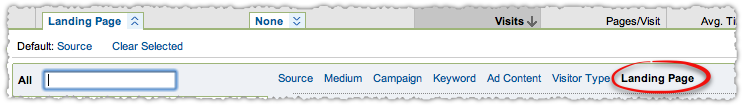

SEO Landing Pages

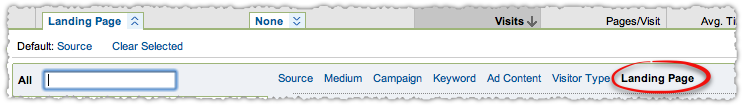

Using Google Analytics, use the drop down menu to determine how many landing pages drove SEO traffic by week.

I’m less concerned with the actual pages then simply knowing the raw number of pages that brought SEO traffic to the site in a given week.

SEO Keywords

Similarly, using the Google Analytics drop down menu, you can determine how many keywords drove SEO traffic by week.

Again, the actual keywords are less important to me (at this point) than the weekly volume.

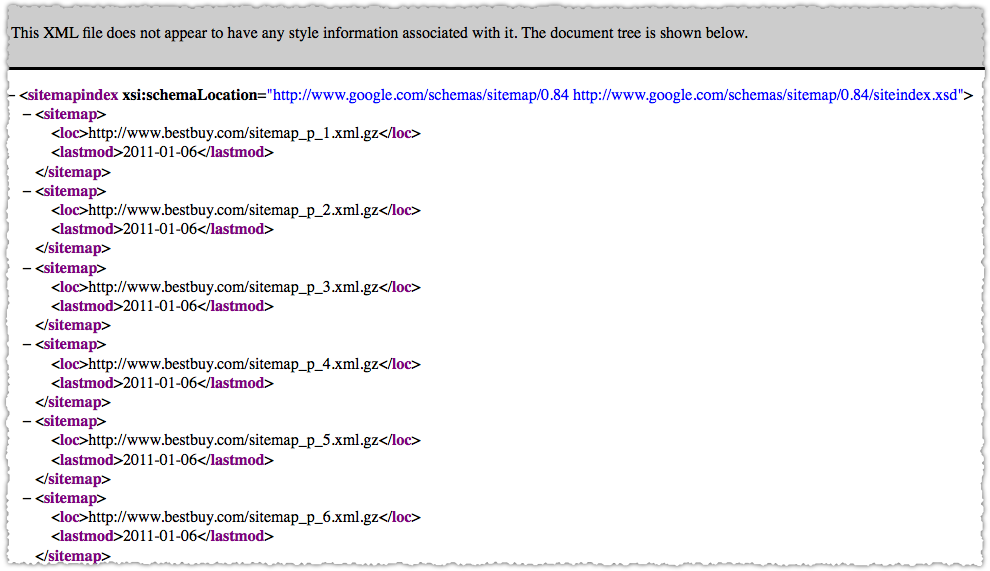

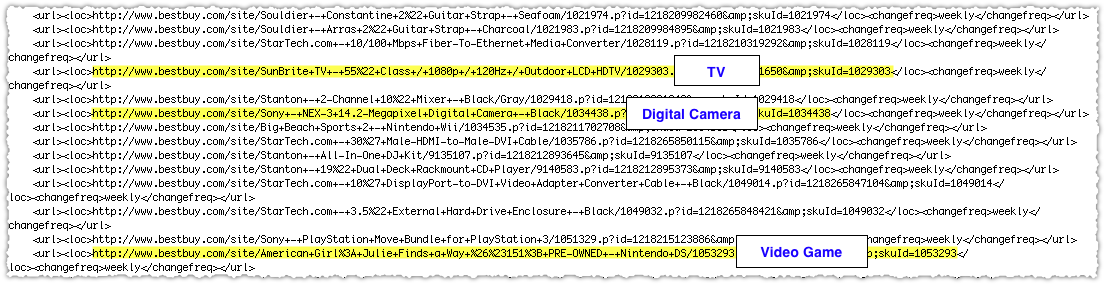

Indexed Pages

Each week I also capture the number of indexed pages. I used to do this using the site: operator but have been using Google Webmaster Tools for quite a while since it seems more accurate and stable.

If you go the Webmaster Tools route, make certain that you have your sitemap(s) submitted correctly since duplicate sitemaps can often lead to inflated indexation numbers.

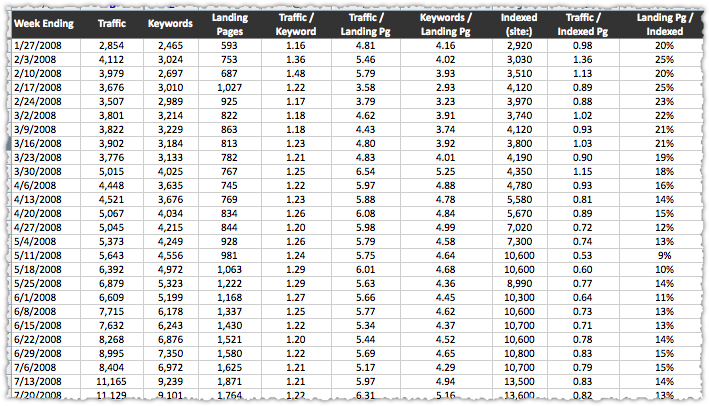

Calculated Fields

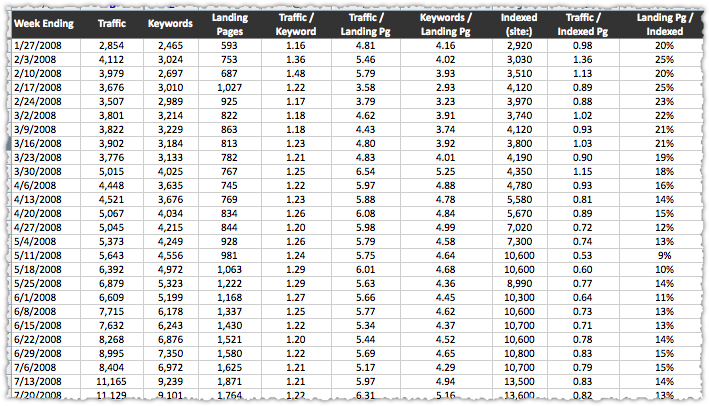

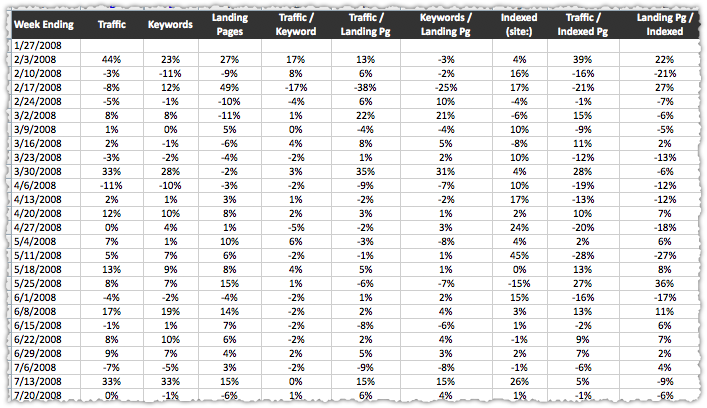

With those four pieces of data I create five calculated metrics.

- Visits/Keywords

- Visits/Landing Pages

- Keywords/Landing Pages

- Visits/Indexed Pages

- Landing Pages/Indexed Pages

These calculated metrics are where I find the most benefit. While I do track them separately, analysis can only be performed by looking at how these metrics interact with each other. Let me say it again, do not look at these metrics in isolation.

Inevitably I get asked, is such-and-such a number a good Visits/Landing Pages number? The thing is there are no good or bad numbers (within reason). The idea is to measure (and improve) the performance of these metrics over time and to use them to diagnose changes in SEO traffic.

Visits/Keywords

This metric can often provide insight into how well you’re ranking. When it goes up, your overall rank may be rising. However, it could also be influenced by seasonal search volume. For example, if you were analyzing a site that provided tax advice, I’d guess that the Visits/Keywords metric would go up during April due to the increased volume for tax terms.

Remember, these metrics are high level indicators. They’re a warning system. When one of the indicators changes, you investigate to determine the reason the metric changed. Did you get more visits or did you receive the same traffic from fewer keywords? Find out and then act accordingly.

Visits/Landing Pages

The Visits/Landing Pages metric usually tells me how effective an average page is at attracting SEO traffic. Again, look under the covers before you make any hasty decisions. An increase in this metric could be the product of fewer landing pages. That could be a bad sign, not a good one.

In particular, look at how Visits/Keywords and Visits/Landing Pages interact.

Keywords/Landing Pages

I use this metric to track keyword clustering. This is particularly nice if you’re launching a new set of content. Once published and indexed you often see the Keywords/Landing Pages metric go down. New pages may not attract a lot of traffic immediately and the ones that do often only bring in traffic from a select keyword.

However, as these pages mature they begin to bring in more traffic; first from just a select group of keywords and then (if things are going well) you’ll find they begin to bring in traffic from a larger group of keywords. That is keyword clustering and it’s one of the ways I forecast SEO traffic.

Visits/Indexed Pages

I like to track this metric as a general SEO health metric. It tells me about SEO efficiency. Again, there is no real right or wrong number here. A site with fewer pages, but ranking well for a high volume term may have a very high Visits/Indexed Pages metric. A site with a lot of pages (which is where I do most of my work) may be working the long-tail and will have a lower Visits/Indexed Pages number.

The idea is to track and monitor the metric over time. If you’re launching a whole new category for an eCommerce site, those pages may get indexed quickly but not generate the requisite visits right off the bat. Whether the Visits/Indexed Pages metric bounces back as those new pages mature is what I focus on.

Landing Pages/Indexed Pages

This metric gives you an idea of what percentage of your indexed pages are driving traffic each week. This is another efficiency metric. Sometimes this leads me to investigate which pages are working and which aren’t. Is there a crawl issue? Is there an architecture issue? It can often lead to larger discussions about what a site is focused on where it should dedicate resources.

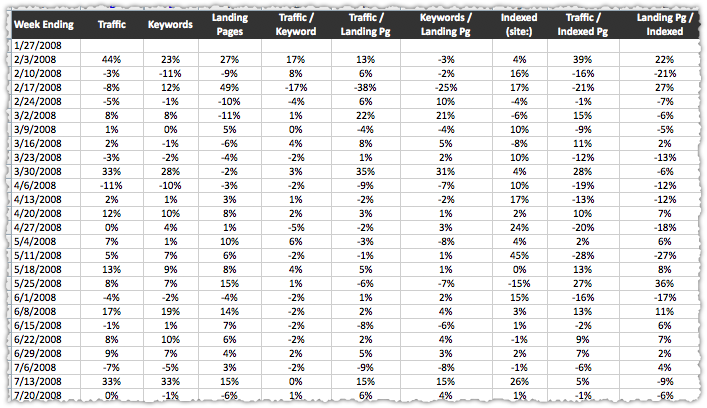

Measure Percentage Change

Once you plug in all of these numbers and generate the calculated metrics you might look at the numbers and think they’re not moving much. Indeed, from a raw number perspective they sometimes don’t move that much. That’s why you must look at it by percentage change.

For instance, for a large site moving the Visits/Keyword metric from 3.2 to 3.9 may not look like a lot. But it’s actually a 22% increase! And when your SEO traffic changes you can immediately look at the percentage change numbers to see what metric moved the most.

To easily measure the percentage change I recommend creating another tab in your spreadsheet and making that your percentage change view. So you wind up having a raw number tab and a percentage change tab.

SEO Metrics Analysis

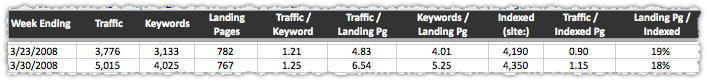

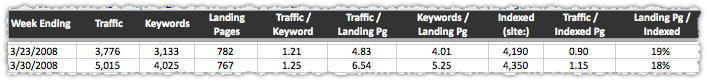

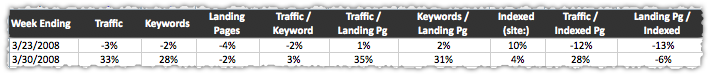

I’m going to do a quick analysis looking back at some of this historical data. In particular I’m going to look at the SEO traffic increase between 3/23/08 and 3/30/08.

That’s a healthy jump in SEO traffic. Let there be much rejoicing! To quickly find out what exactly drove that increase I’ll switch to the percentage change view of these metrics.

In this view you quickly see that the 33% increase in SEO traffic was driven almost exclusively by a 28% increase in Keywords. This was an instance where keyword clustering took effect and pages began receiving traffic for more (related) query terms. Look closely and you’ll notice that this increase occurred despite a decrease of 2% in number of Landing Pages.

Of course the next step would be to determine if certain pages or keyword modifiers were most responsible for this increase. Find the pattern and you have a shot at repeating it.

Graph Your SEO Metrics

If you’re more visual in nature create a third tab and generate a graph for each metric. Put them all on the same page so you can see them together. This comprehensive trend view can often bring issues to the surface quickly. Plus … it just looks cool.

Add a Filter

If you’re feeling up to it you can create the same dashboard based on a filter. The most common filter would be conversion. To do so you build an Advanced Segment in Google Analytics that looks for any SEO traffic with a conversion. Apply that segment, repeat the Visits, Landing Pages and Keywords numbers and then generate new calculated metrics.

At that point you’re looking at these metrics through a performance filter.

The End is the Beginning

This SEO metrics dashboard is just the tip of the iceberg. Creating detailed crawl and traffic reports will be necessary. But if you start with the metrics outlined above, they should lead you to the right reports. Because the questions they’ll raise can only be answered by doing more due diligence.

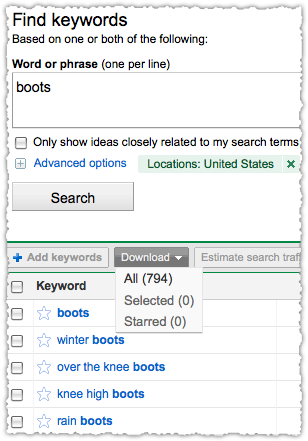

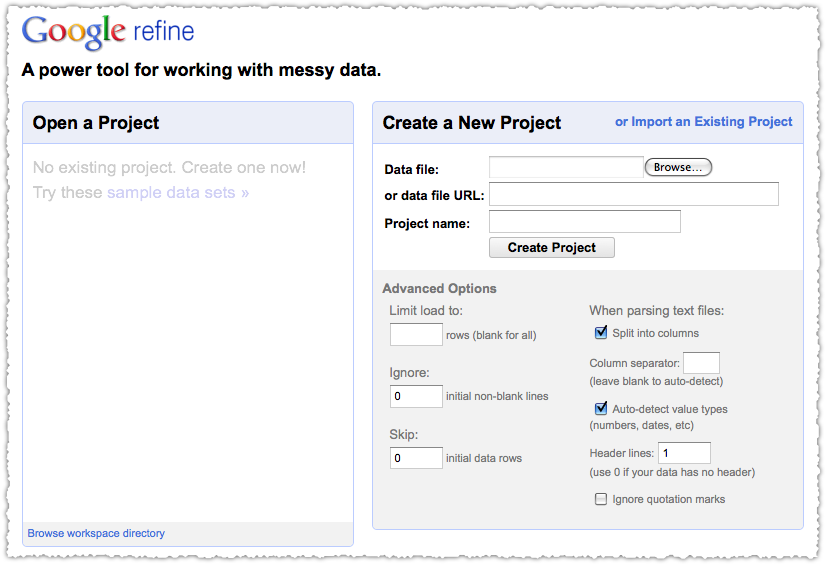

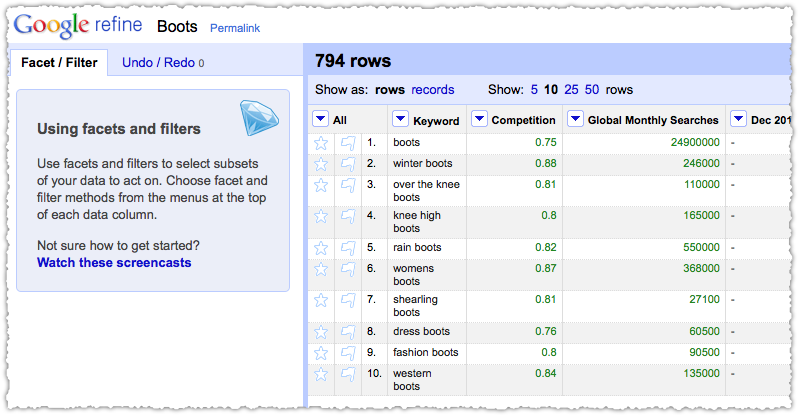

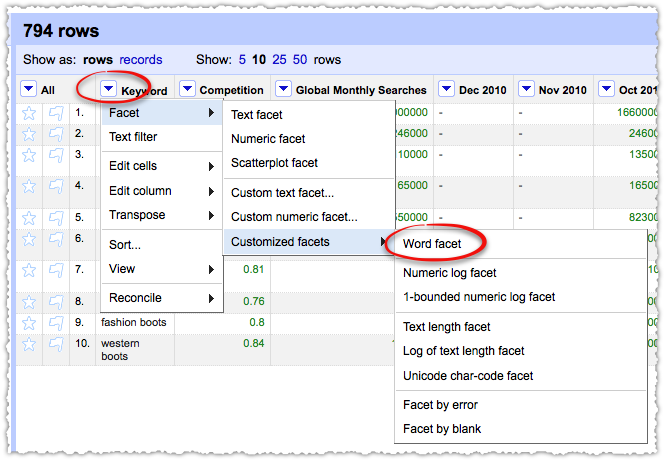

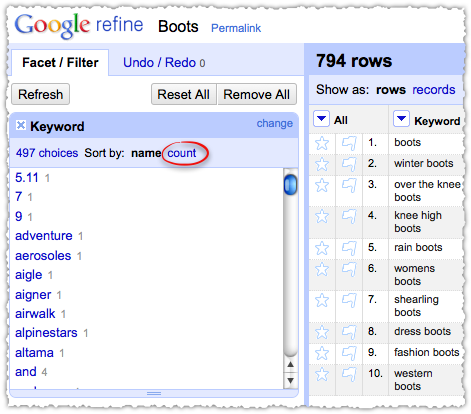

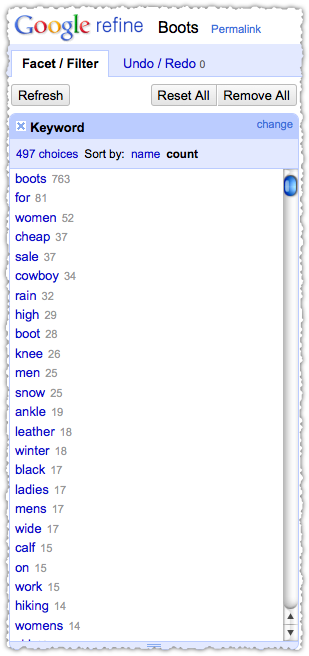

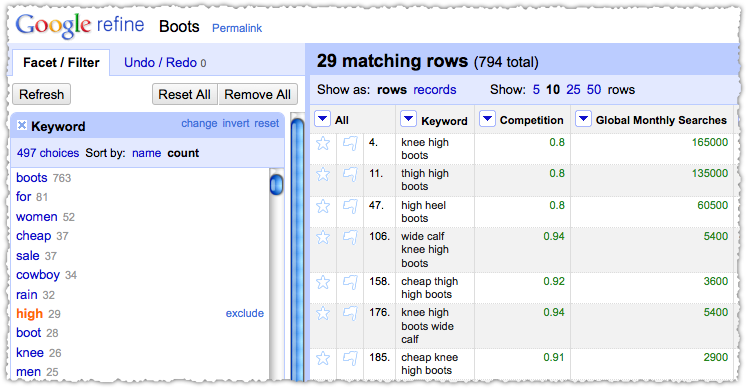

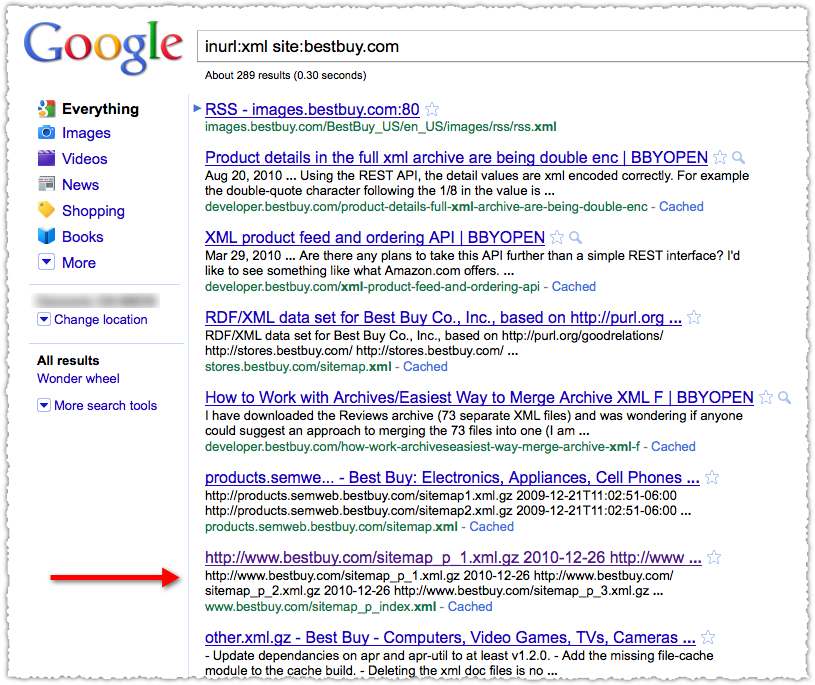

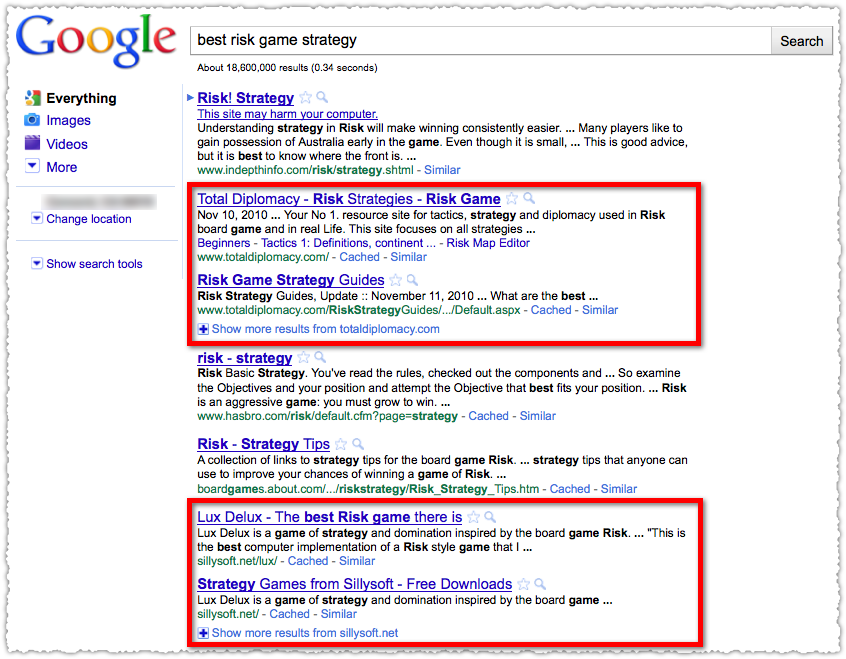

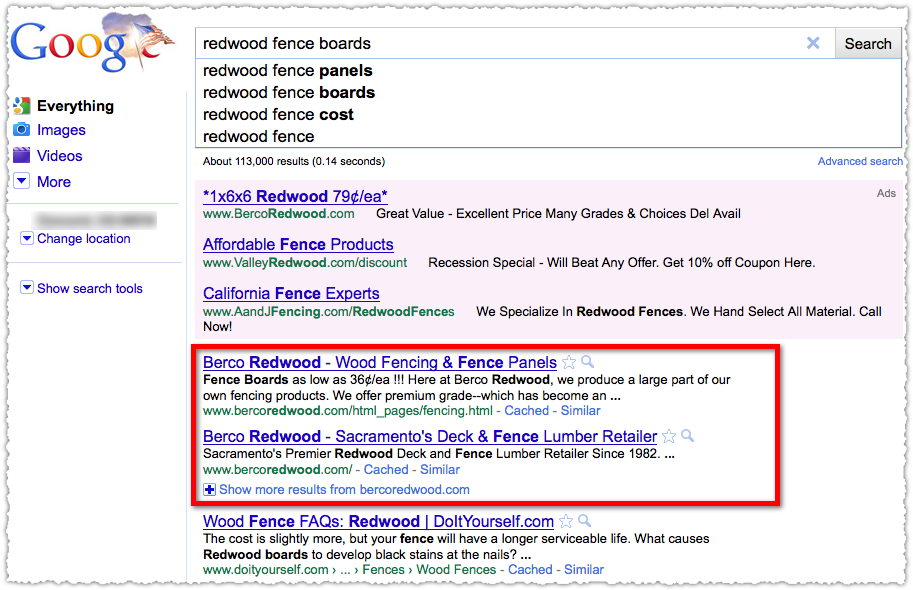

Keyword research is a vital component of SEO. Part of that research usually entails finding the most frequent modifiers for a keyword. There are plenty of ways to do this but here’s a new way to do so using Google Refine.

Keyword research is a vital component of SEO. Part of that research usually entails finding the most frequent modifiers for a keyword. There are plenty of ways to do this but here’s a new way to do so using Google Refine.