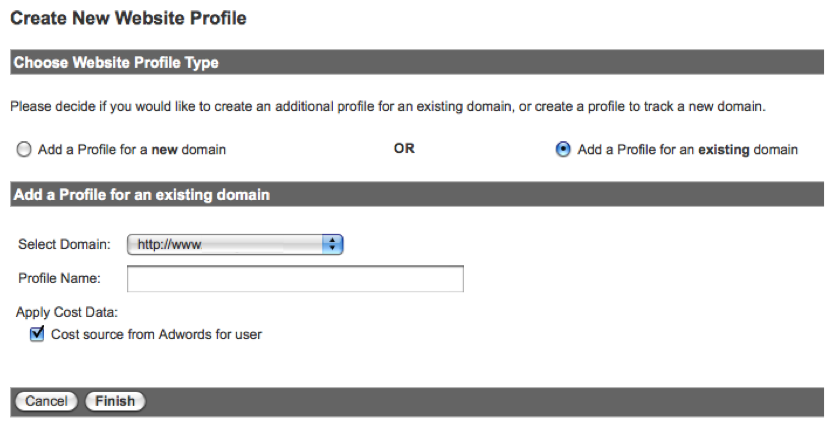

A few months ago I wrote about how you could track keyword rank in Google Analytics. I said I’d blog about how to create ranking reports based on the new data but as I did due diligence on those reports I found something much more interesting.

AJAX Search Results Performance

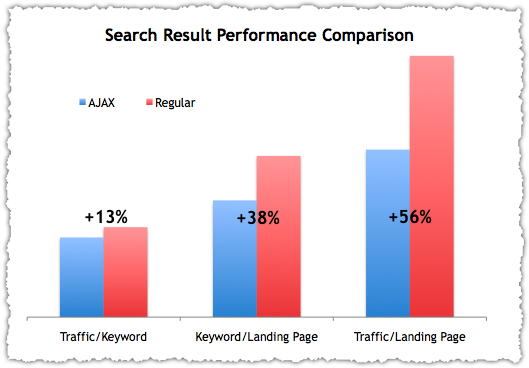

There is a profound difference in the performance of Google AJAX search results versus normal search results.

Standard search results drive 13% more traffic per keyword, 38% more keywords per landing page and 56% more traffic per landing page than their AJAX counterparts.

Creating The Search Result Comparison

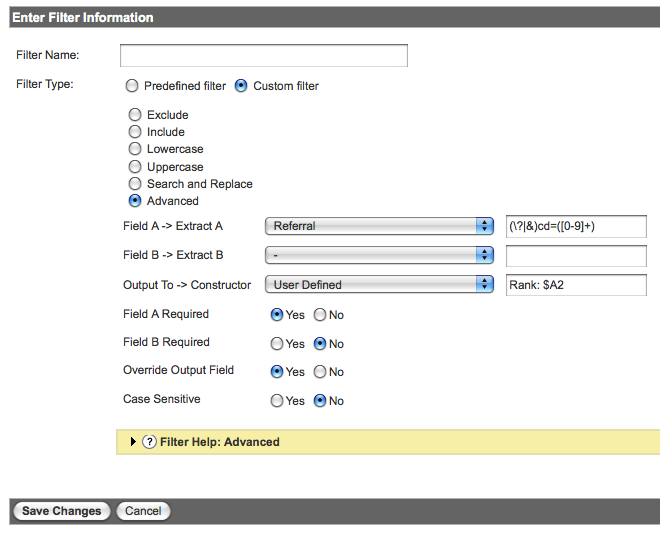

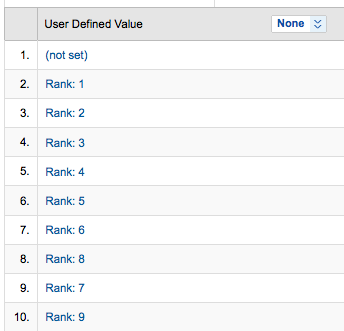

The Google Analytics rank hack captures a specific rank parameter present on AJAX search results. If that parameter doesn’t exist no rank is reported for that keyword. Upon implementing the GA rank hack you find that Google is serving AJAX results approximately 20% to 25% of the time. This is simply a product of looking at traffic that is reported with a rank compared to the total traffic reported in the profile.

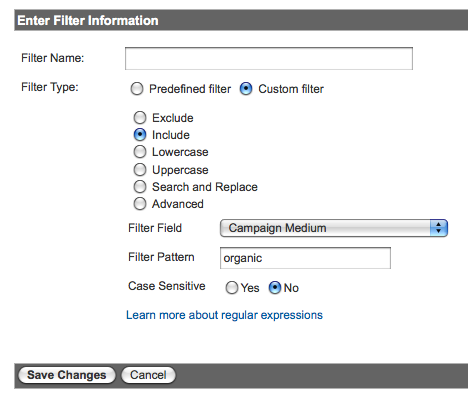

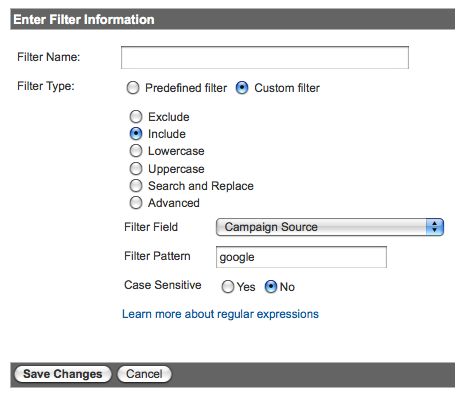

You can also create advanced segments that include or exclude the AJAX search results by using the user defined value (Rank:) implemented in the GA rank hack. This gives you the ability to easily compare the performance of AJAX versus normal search results.

Each week I capture the amount of traffic as well as the number of keywords and landing pages that drove that traffic. I then create calculated metrics around traffic per keyword, keywords per landing page and traffic per landing page. There’s no real right or wrong number for these metrics, but it can be quite useful in trend analysis and, in this instance, for comparison analysis.

I’ve aggregated results from two large sites that come from very different verticals. Both showed similar results. I recognize that two is a small sample size so I encourage others to perform the same analysis to increase the confidence level of these results.

What’s Going On With AJAX Results?

It’s difficult to say with certainty why AJAX search results would perform so radically different than normal search results.

I first compared the Browser and OS profile for each to see if there was a distribution discrepancy. Sure enough, there is. Normal results are presented with far greater frequency (almost to exclusion) for the following:

- Safari/Macintosh

- Chrome/Windows

- Firefox/Macintosh

- Safari/iPhone

- Safari/Android

- Safari/iPad

- Safari/iPod

- Chrome/Macintosh

Yet, when I isolate Internet Explorer/Windows (sadly, the dominant combination) I see the same pattern for each result type. So while the difference in Browser and OS is interesting, it doesn’t seem to be the reason for the performance differential between AJAX and normal search results.

Could it be algorithmic? It seems far fetched to think Google would implement a different algorithm for AJAX search results. Nevertheless, lets put it down using the ‘no idea is a bad idea’ brainstorming methodology.

Perhaps it’s related to the presentation of search results. This makes a bit of sense since they are being rendered differently. But most people can’t tell the difference between the two, so I don’t think the way in which they are rendered would result in different user behavior.

But what about the intersection between algorithm and presentation? What if the implementation of Onebox, personalization or AdWords varied by type of search result? Could Google be using AJAX search results for testing purposes?

Further analysis leads me to believe this might be true.

The June Gloom Update

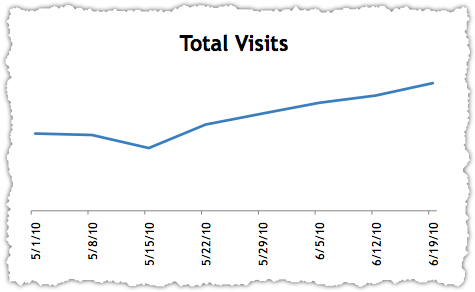

The thread of this analytic sweater began when I noted that total traffic from Google was going up but ranked traffic was going down.

The opposing trend lines don’t make sense. This strange trend began around June 1st which is why I call it June Gloom.

Of course, a simple explanation could be that Google was showing fewer AJAX results. So I dug further.

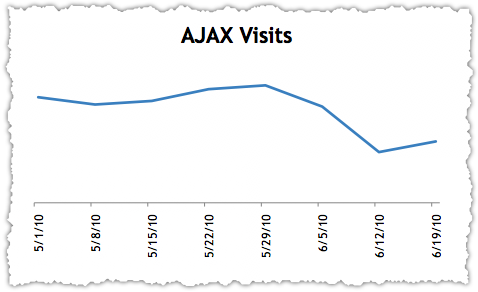

Ranked Traffic on Google AJAX Results

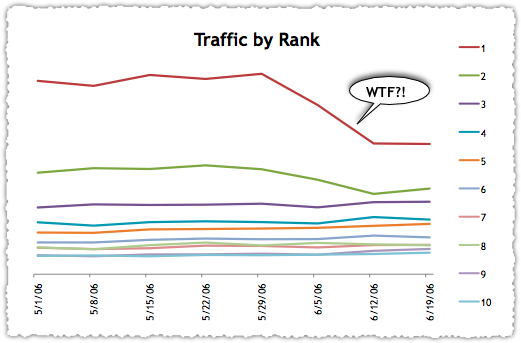

The great thing about the Google Analytics rank hack is that you can track the trend of each position.

The trend for keyword rank 1 and 2 dropped dramatically (~40% and ~25% respectively) while all other ranks were flat or improved during the same period. The drop in traffic from rank 1 and rank 2 far surpassed the increases in traffic from all other ranks combined. Hence, the drop in overall AJAX results traffic.

Clearly, this same pattern is not happening with normal search results. If it was, the total traffic from Google would be going down. Instead it’s going up. This also indicates that it is not about how often AJAX search is presented.

What Caused June Gloom?

Maybe the tracking parameter is broken. It could, but that would mean that it was broken on just keywords ranked first and second, and that it was only broken on those ranks some of the time. This isn’t impossible, but it certainly seems improbable. Inspection of AJAX search results didn’t turn up any missing rank parameters.

I decided to check a handful of high volume keywords which had a number 1 ranking. An advanced segment for rank 1 can quickly identify these keywords. On both sites, the top performing keywords did not show any impact from June Gloom.

I double checked this by using the new Top Queries report in Google Webmaster Tools. Downloading and comparing the weekly data, I rounded the position number and then created a pivot table to look at impressions and clicks for keywords in the first position. There was virtually no difference in impressions, clicks or click rate pre and post June Gloom.

This doesn’t mean it’s not happening, it only means that it’s not happening on the top queries. Remember, the Top Queries report is not all inclusive and it probably draws from all results – both AJAX and standard.

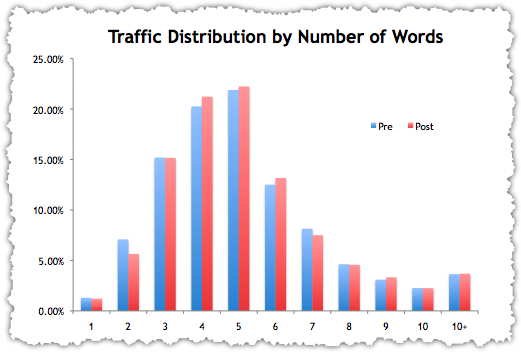

If top queries were unaffected, it followed that long tail keywords were being impacted by June Gloom. This could be based on keyword volume, keyword type or number of words in the query. The latter was the easiest to test.

By using the Google Analytics API we grabbed keywords that had delivered traffic at rank 1. We used an Excel function to count the number of words and then created a pivot table showing the sum of clicks by word count. We then compared the distribution of traffic by word count in a pre and post June Gloom week. The result? The distribution was virtually the same.

So we can only theorize that long tail keywords based on volume or type are being impacted by June Gloom. What would do that? Greater AdWords reach? More personalization? A higher rotation of Onebox and/or 7-pack listings? Or maybe it is algorithmic. Unfortunately, we have been unable to find the smoking gun – that query or queries that present differently on each type of search result.

Or perhaps we’ve botched it – made a gross assumption or overlooked the obvious. Once again, peer review is encouraged.

Who’s this we? This is a good time to mention that my colleague Jeremy Post provided invaluable assistance in data gathering and in talking through the analysis.

June Gloom and Caffeine

These days no change in traffic can escape the Caffeine question. Strangely, there is some evidence of Caffeine in the data.

The number of landing pages driving total traffic has increased. Landing pages are up nearly 20% for standard search results and only down 3% for AJAX search results. In fact, one of the two sites saw a small increase in landing pages from AJAX results. The number of landing pages was least affected in AJAX results compared to the changes seen in traffic and keywords.

This leads me to believe that the improvements in crawl and indexation brought on by Caffeine may be adding more landing pages. However, these new landing pages haven’t yet acquired a cluster of keywords. This drives down the keywords per landing page metric. Essentially, the rate in which keywords are added is less than the rate landing pages are being added.

The difference on AJAX search results is that there were substantial keyword losses even as new landing pages were being added. June Gloom may actually have been tempered by the impact of Caffeine.

AJAX Results and June Gloom

The evidence suggests there is a difference between AJAX and normal search results. And June Gloom only serves to reinforce this theory.

SEO is supposed to be a zero sum game. One site gains traffic at the expense of another. Are other sites benefiting from AJAX results? What sites are now getting more traffic from those top two positions?

Of course, AJAX results and June Gloom could be the vehicle Google is using to test Onebox, 7-pack, personalization and universal search options. SEO is still a zero sum game … but the game board is expanding.

Are you ready?

Your marketing plan should be like a Swiss Army Knife with SEO being just one of the tools. Not only that, but you need to use that tool the right way. Getting a whole bunch of traffic that doesn’t convert isn’t going to help your business. Don’t neglect the other tools at your disposal. In fact, some of those other tools might actually help your SEO efforts in the long run.

Your marketing plan should be like a Swiss Army Knife with SEO being just one of the tools. Not only that, but you need to use that tool the right way. Getting a whole bunch of traffic that doesn’t convert isn’t going to help your business. Don’t neglect the other tools at your disposal. In fact, some of those other tools might actually help your SEO efforts in the long run.