The real estate bubble popped. Will the link bubble be next?

The real estate bubble was the product of greed, low interest rates, loose lending policies and derivatives. Nearly anyone could get a house and people bought into the idea that real estate would always be a good investment. The result of this irrational exuberance? Homes were valued far more then they were worth.

The Link Bubble

Are links that different than real estate?

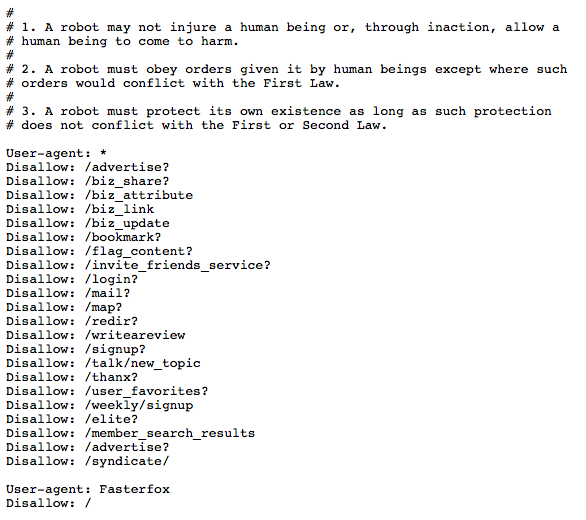

Links have traditionally been a reliable sign of trust and authority because they were given out judiciously, a lot like mortgages. For a long time link policies were tight. You needed references and documentation before you earned that link.

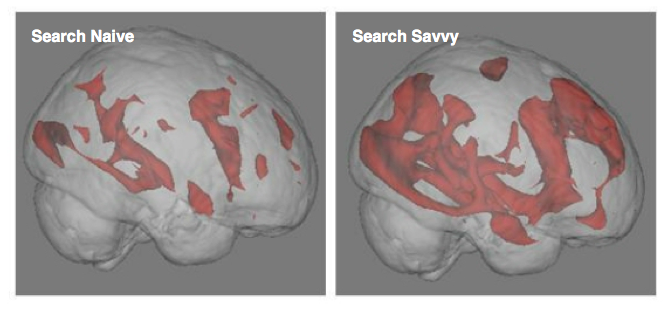

In addition, links weren’t looked upon as an investment tool. The concept that links influenced SEO hadn’t taken hold. The motivation behind links was relatively pure and that meant Google and others could rely on them as an accurate signal of quality.

Links or Content?

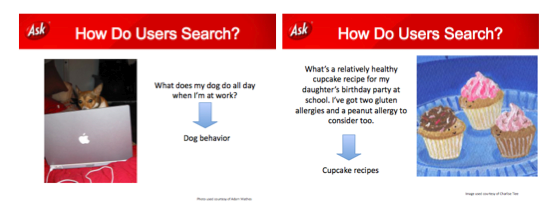

Many have recently bemoaned the death of hand crafted content and the rise of content farms as a threat to search quality. But is content really the problem?

Content has little innate value from a search perspective. Yes, search engines glean the content topic based on the text. It’s like knowing the street address of a house. You know where it is and, probably, a bit about the neighborhood. But it doesn’t tell you about the size, style or quality of the home.

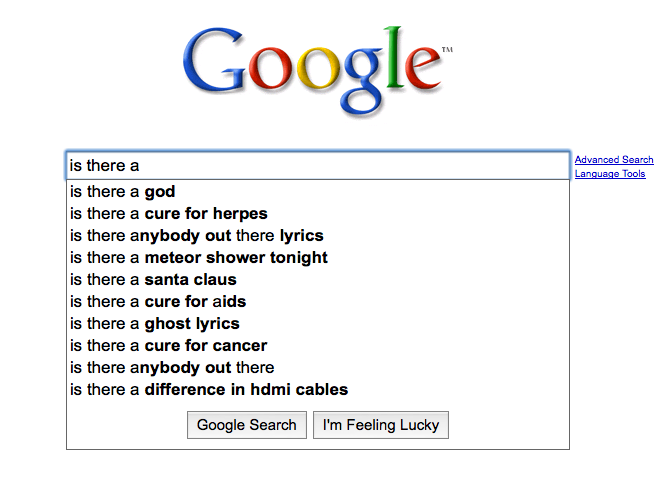

Long tail searches are akin to searching for a house by street address. So, content without links may sometimes produce results. But the vast majority of searches will require more information. That’s where links come in.

McDonald’s Content

Lets switch analogies for a moment. Some have called Demand Media the McDonald’s of content. There’s a bit of brilliance in that comparison, but not in the way most think.

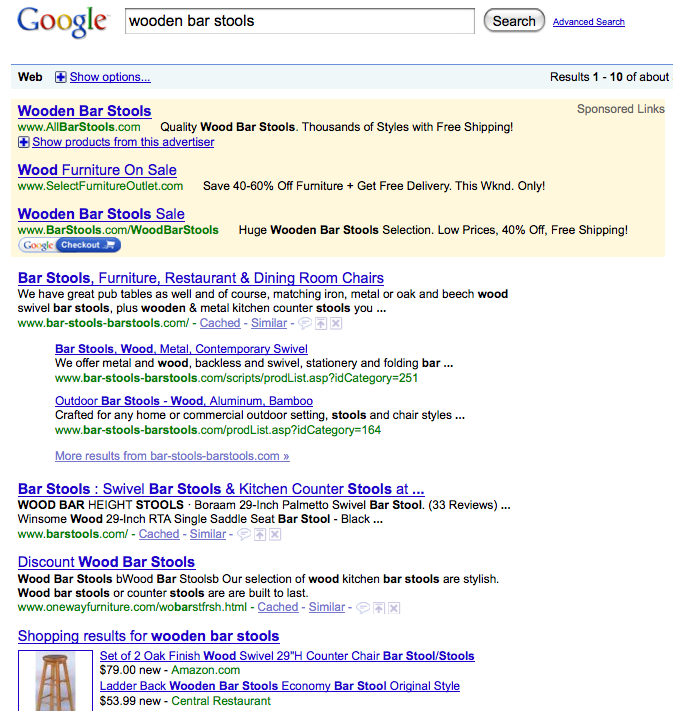

Both McDonald’s and Demand Media crank out product that many would argue is mediocre. Offline, McDonald’s buys the best real estate and uses low prices, brand equity and marketing to ensure diners select them over competitors.

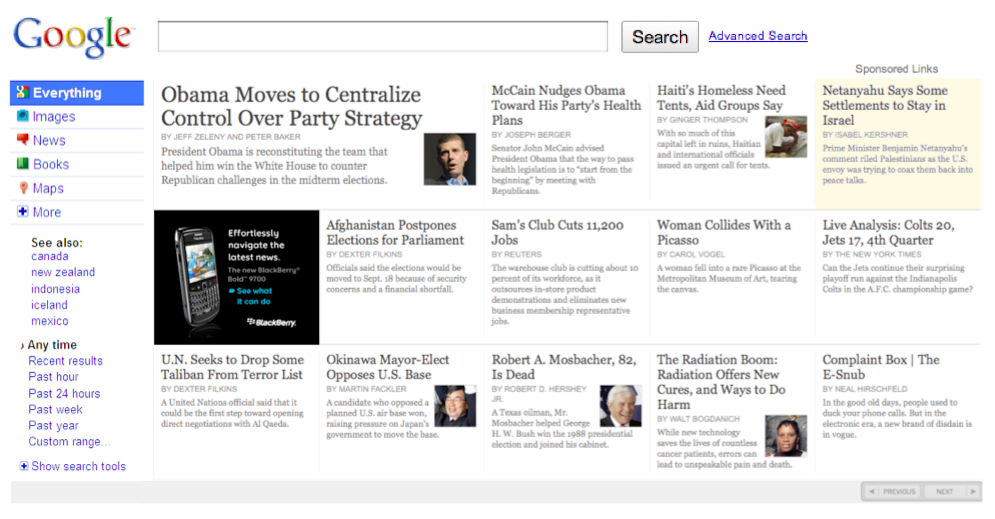

Online, Google holds the prime real estate. But that real estate can’t be outright purchased. And in the absence of price, we’re left with brand equity and marketing. Online, brand equity translates into trust and authority. And links are the marketing that help build and maintain that brand equity.

Demand Media has brands (their words) that give it automatic trust and authority. Publish something on eHow and it automatically inherits the domain’s trust and authority, built on over 11 million backlinks.

Writers for Demand Media are provided revenue share opportunities on their articles. Here’s one of the tips they give to writers to boost traffic to their articles.

2) Link to your article from other websites.

Link from your own website or blog, from a message board or forum, from your social networking profile on MySpace or Facebook and more. The more high quality links to your article there are on the web, the more highly a search engine will rank it.

Demand Media combines the installed brand equity of multiple sites (which happen to be cross-linked) with an incentive to contributors to generate additional links. The content doesn’t have to be great when links secure premium online real estate.

There might be something better down the road, but McDonald’s is always right there at the corner.

Link Inflation

The last few years have produced major changes surrounding links. Linkbuilding is now a common term and strategy. A number of notable SEO firms tout links as the way to achieve success.

Linkbuilding firms sprung up. Linkbulding software of various shades of gray were launched. Paid links of various flavors flourished. Social bookmarking and networking accelerated link inflation. And new business models like Demand Media sprung up to take advantage of the link economy, creating a collection of sites and implementing incentives that result in something that resembles derivatives.

Link policies went from tight to loose and people got greedy. Anyone can get links these days. So what’s the natural result of this link activity?

Link Recession

The value of links is inflated and at some point the system will correct. The algorithm will change to address the abuse of links. Unlike The Federal Reserve, Google probably isn’t looking for a soft landing, nor are they going to extend a bailout.

Some links will continue to matter. Links that are in the right neighborhood. The ones with tree lined streets, good schools and low crime. But will links from cookie cutter planned communities still be valuable? Strong links will mean more because they’ll hold their value, while many more links will be neutralized.

I’m no Nouriel Roubini, but I do believe that a major link correction is coming in 2010. Google must address the link bubble to make search results better.