Is Google’s Vince Change SEO Affirmative Action?

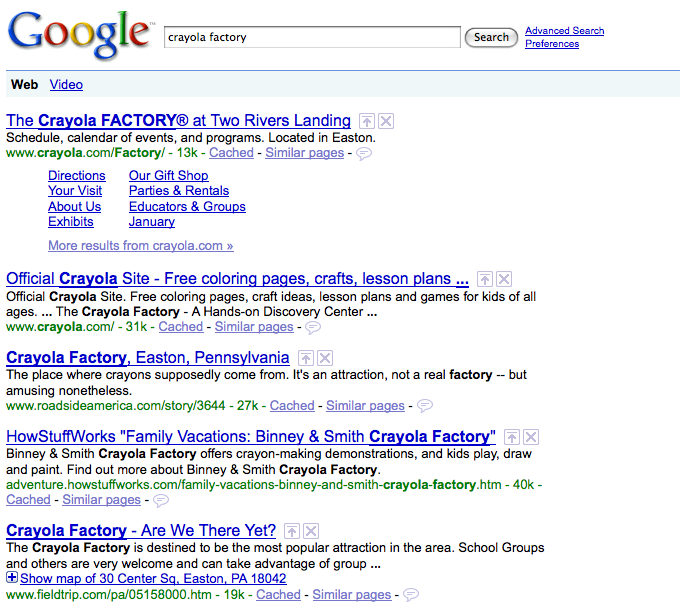

The recent ‘Vince Change’ by Google has been discussed, debated and well documented by Aaron Wall. In essence, the Vince Change gave brands more trust for short queries.

At first, the Vince Change didn’t bother me too much. Change is part of the business (keeps many of us in business) and it seems a waste to get worked up by such things. But the more I thought about it the more it bugged me.

Google Taxonomic Search

Late last year I’d theorized that Google might be employing taxonomic limits to certain search results. I wrote about the topic in response to some reports of unusual SERP behavior for product searches.

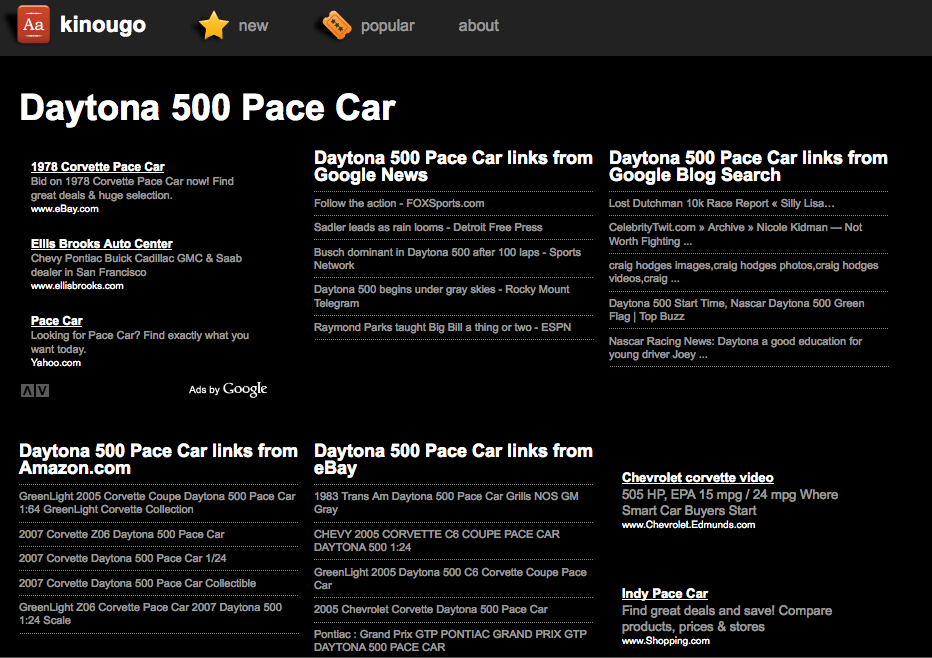

The general idea surrounding taxonomic search is that Google wants an even distribution of site types on certain searches. For products you’d likely want the manufacturer as well as a few retailers, a few review sites, a few shopping comparison sites and a few discussion forums. Having the results dominated by any one type (regardless of SEO strength) would be a suboptimal user experience.

I’m a bit ambivalent on the idea of taxonomic search. It probably would provide a better user experience and it simply raises the bar for good SEO. I’m still not sure Google is doing this, but it’s more intriguing to think about with Google’s Vince Change.

Commercial Results

I kept mulling over the Vince Change, both in what it was and how it might have been implemented. Matt Cutts, whom I like a lot, provided an answer that discussed the former but not the latter.

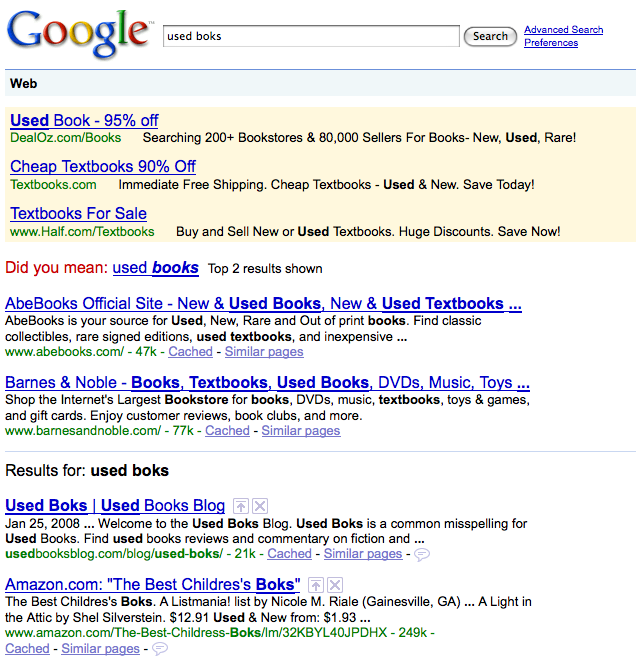

The part that caught my attention was when Matt discussed the SERP for ‘eclipse’ and specifically his mention of “commercial results”. So is it not brand but commercial results that were impacted by the Vince Change? Is there a simple taxonomy of commercial versus non-commercial sites?

But the RankPulse results don’t really bear that out. There were plenty of other commercial sites there previously, they just didn’t have the same name recognition. I couldn’t shrug off the Vince Change.

Is trust assigned or earned?

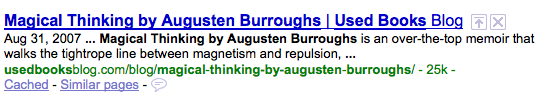

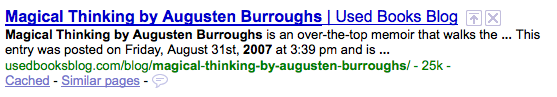

If the Vince Change was about trust and authority, how did these brand sites receive trust and authority? Was it assigned or earned? If it was earned, how exactly was it earned? If it had been earned through SEO (backlinks, on-page optimization etc.) shouldn’t they have already been highly ranked?

I found it difficult to determine how you’d ‘turn the knob’ of trust and authority and only have it impact certain types of sites. “Certain types of sites” would lead naturally to a taxonomic structure of some sort. But that would be a transparent proxy for brand and I want to believe Matt what he says they didn’t target brands.

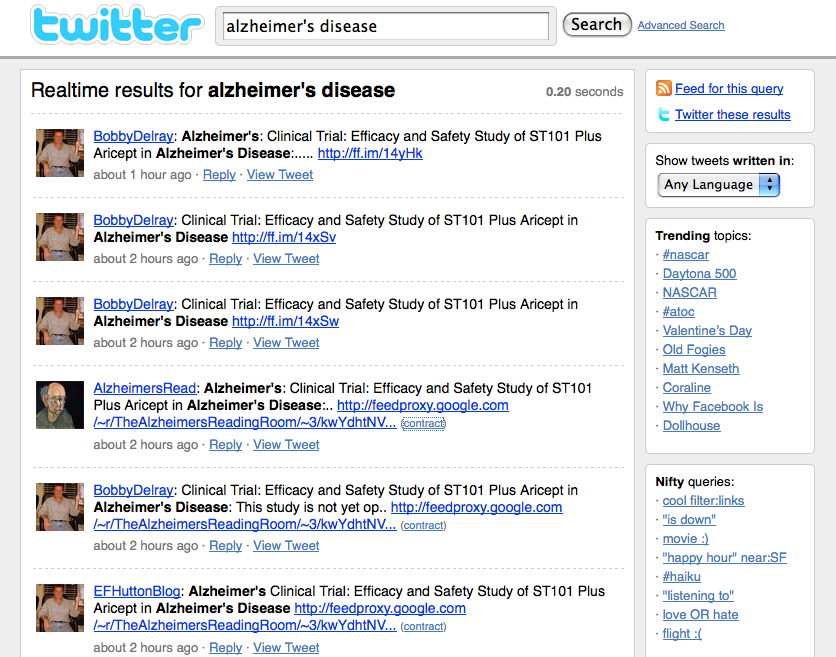

Instead, did Google simply assign this trust and authority? A type of SEO affirmative action. If so, based on what criteria? If it’s not ‘brand’, what is it? It doesn’t seem like traffic, nor does it seem to have roots in traditional SEO. As of SMX West it wasn’t SearchWiki data, but maybe that’s changed since. Google does move fast.

Or maybe it’s another piece of data? Advertiser saturation or CTR via DoubleClick? Could it be about money?

Why the Vince Change matters

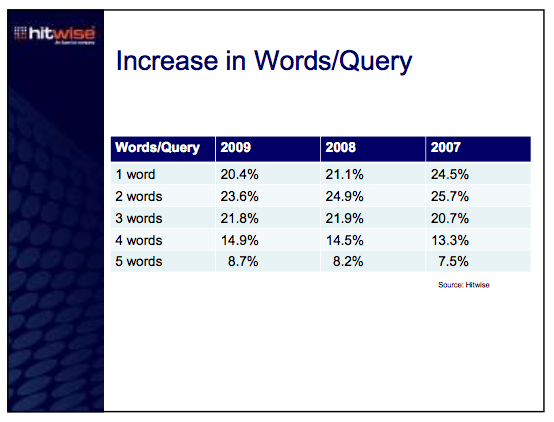

The Vince Change seems to target very broad queries. While short queries are still a big and important part of SEO, the trend is toward longer search queries. Yet, the inability to determine how trust and authority pooled around these brands is unsettling. What prevents the same type of change to be implemented on long tail queries?

Brands should be required to earn their trust on a level playing field.

Are we … being hustled?