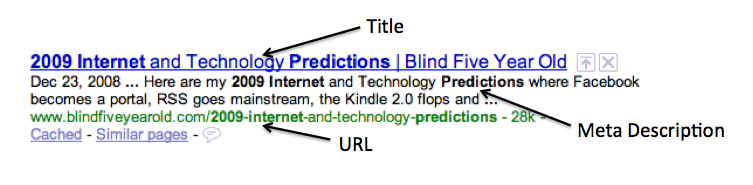

The SEO Holy Trinity. In the name of the Title, and of the URL and of the Meta Description.

If you’re just getting started with search engine optimization (SEO) you must get these three things right. Here’s the Blind Five Year Old explanation on why these three elements are critical to your SEO success.

Title

A search engine walks into a library. No, it’s not the set-up line to a joke. It’s an analogy for how a search engine will look for content. Everyone likes new content, even search engines, so it’ll walk straight over to the new releases section.

Now, remember, a search engine is like a five year old. So when it wanders over to the new releases you need to make sure it can understand what your page is about. The title is the best way to communicate what your post or page is about.

A five year old will probably not care who wrote the book, so don’t put your name, company or blog at the beginning of the title. If you have it at all, put it last.

A five year old isn’t going to get your clever double entendre or understand irony. Keep that stuff in the body of your content and use your title like you would for a children’s book. For example, what do you think Frog and Toad Are Friends is about? Yeah, probably about a frog and a toad who are friends. Bottom line – keep it simple.

Keep it brief. Search engines, like most five year olds, have a short attention span. It’s not that they can’t read all the words, but Google is only going to display 65 characters to people looking for your content. So keep it under that limit. If you’re using your brand at the end of the title, you can go just past the 65 character limit and thus sacrifice the brand name to the HTML ether.

URL

Lets extend the library analogy above. You can think of the URL as the spine of the book. A search engine will have less of a chance of understanding what that book (your page or post) is about if you don’t have a descriptive URL.

Imagine if all a search engine saw on the spine of your page was a dewey decimal classification sticker. Here’s are some examples of what that might look like on your site:

http://www.domain.com/p=124

http://www.domain.com/viewDocument.aspx?d=903

Now, if you were a high-functioning adult you might be able to interpret that to get what it means, or you might find your way to the card catalog to do further research on what it meant.

But search engines are like five year olds!

They’re not going to do that. They aren’t going to understand it and they’re not going to take the time to investigate or research. The search engine will simply move on to something that looks more interesting and is easier to understand.

Instead, use your title (minus your brand name if you’re using that) as your URL. Simply use hyphens between each word and you’ve created a URL that a five year old will understand.

If you can, don’t bury the page in lots of directories. Make your URL as flat as possible while retaining the context. For instance, which of the two URLs below is easier to understand at a glance?

http://www.domain.com/toys/non-electronic/animals/stuffed/bears/brown/paddington.htm

http://www.domain.com/toys/paddington-bear-stuffed-animal.htm

In a pinch you can take out words that don’t contribute additional meaning. So, you could drop ‘the’ or ‘and’ in many cases to make the URL even more concise.

Meta Description

You might have read elsewhere that meta description doesn’t matter. Don’t believe it for a second! Meta description is important because it’s your chance to market your content.

Lets, again, use the library analogy. Readers might scan the book title. It tells them a little bit about what the content of the book. Okay, it’s about a toad and a frog who are friends but … what do they do? The meta description is the blurb on the back of the book that tells you more.

The meta description is the place to entice people to click on the search result. You might get ranked well for a term, but the next step is to get them to click. Sure, you have a much better chance based on your rank, but the text you use in the meta description does matter.

Try to get your keyword phrase in there, twice if at all possible. Why? Because Google will bold the search terms in your result. The bold styling reinforces that they’ve gotten the correct result and draws the eye to that listing.

So put something in there that tells them they want to come to your page. Make it exciting or interesting. Tell them what they’ll gain from visiting your page. Sell your content.

Putting It All Together

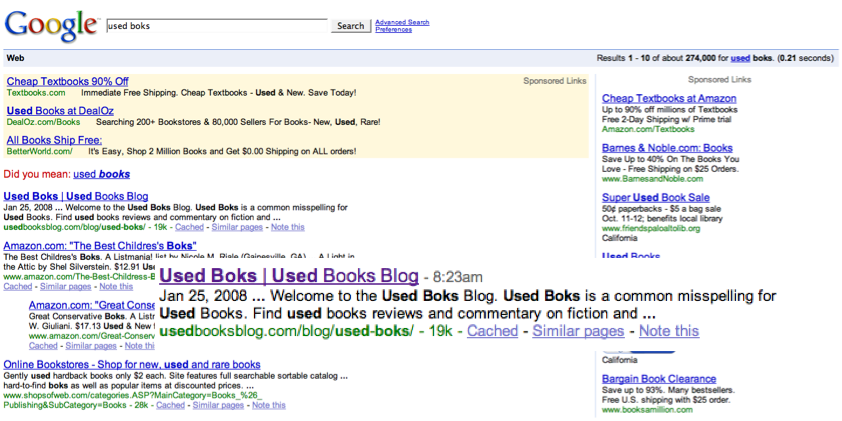

So here’s what the SEO Holy Trinity winds up looking like in Google.

This page or post was optimized for the phrases ‘2009 Internet Predictions’ and ‘2009 Technology Predictions‘. (I could have done a post for each one to be even more focused but … time is not infinite!) Do the searches for yourself and see how I rank.

Now this isn’t the end of SEO. You’ll need to do more to achieve high rankings and traffic. But the SEO Holy Trinity is the base that you must have to ensure success.

In the name of the Title, and of the URL and of the Meta Description.

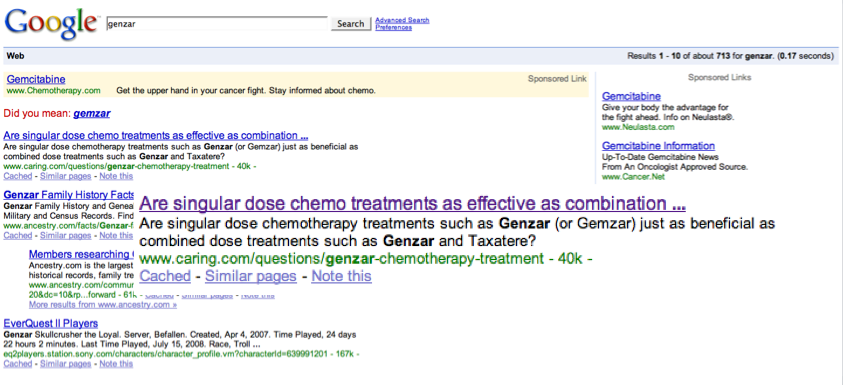

Not everyone is the world’s best speller and even those that are sometimes hit the wrong key and wind up searching for a misspelled keyword. It’s human nature and there’s no reason why you shouldn’t optimize for the most common misspellings. And there’s an easy way to target these misspellings without making yourself look like a fool.

Not everyone is the world’s best speller and even those that are sometimes hit the wrong key and wind up searching for a misspelled keyword. It’s human nature and there’s no reason why you shouldn’t optimize for the most common misspellings. And there’s an easy way to target these misspellings without making yourself look like a fool.