Do I think Google’s policy around encrypting searches (except for paid clicks) for logged-in users is fair? No.

But whining about it seems unproductive, particularly since the impact of (not provided) isn’t catastrophic. That’s right, the sky is not falling. Here’s why.

(Not Provided) Keyword

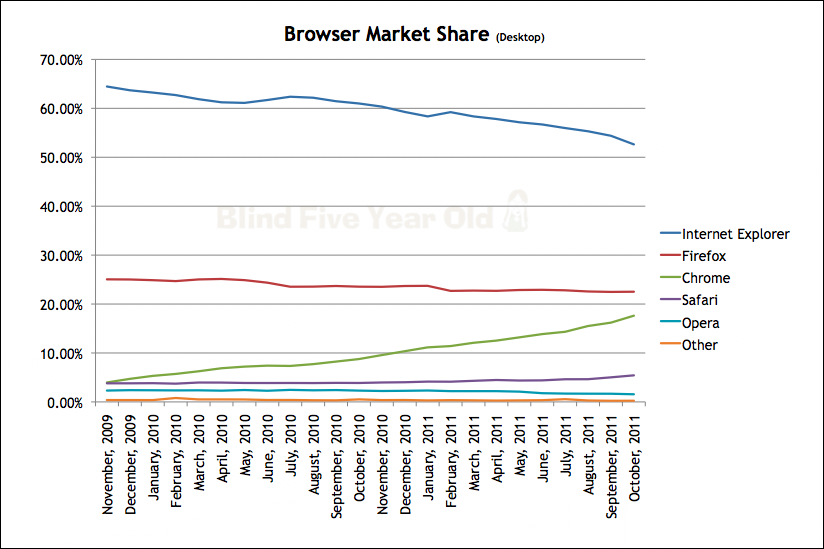

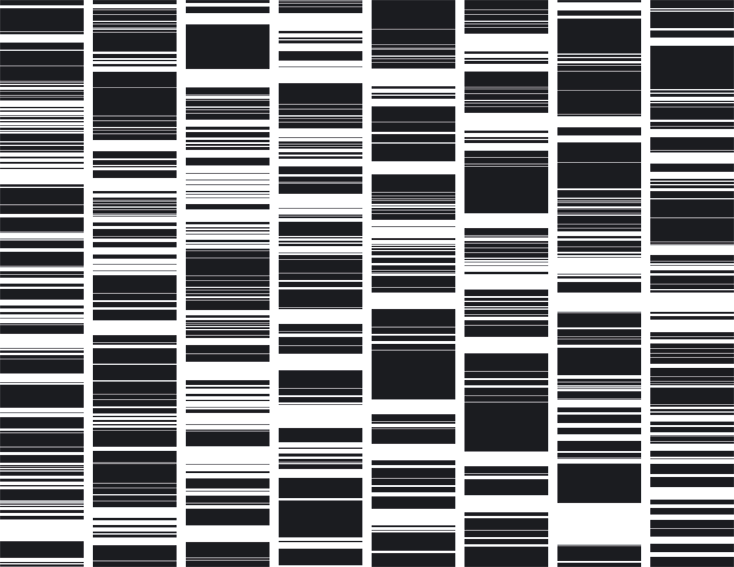

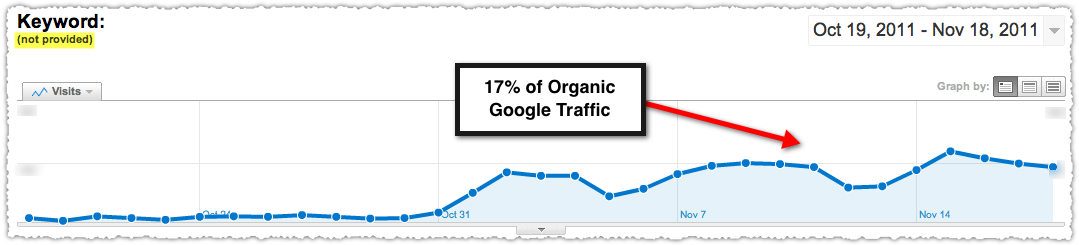

By now I’m sure you’ve seen the Google Analytics line graph that shows the rise of (not provided) traffic.

Sure enough, 17% of all organic Google traffic on this blog is now (not provided). That’s high in comparison to what I see among my client base but makes sense given the audience of this blog.

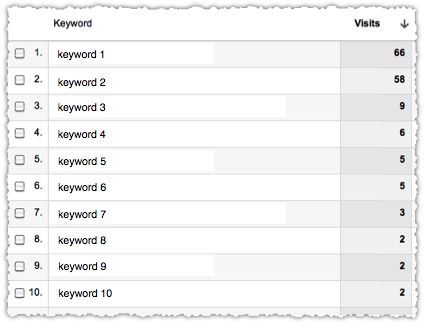

Like many others (not provided) is also my top keyword by a wide margin. I think seeing this scares people but it makes perfect sense. What other keyword is going to show up under every URL?

Instead of staring at that big aggregate number you have to look at the impact (not provided) is having on a URL by URL basis.

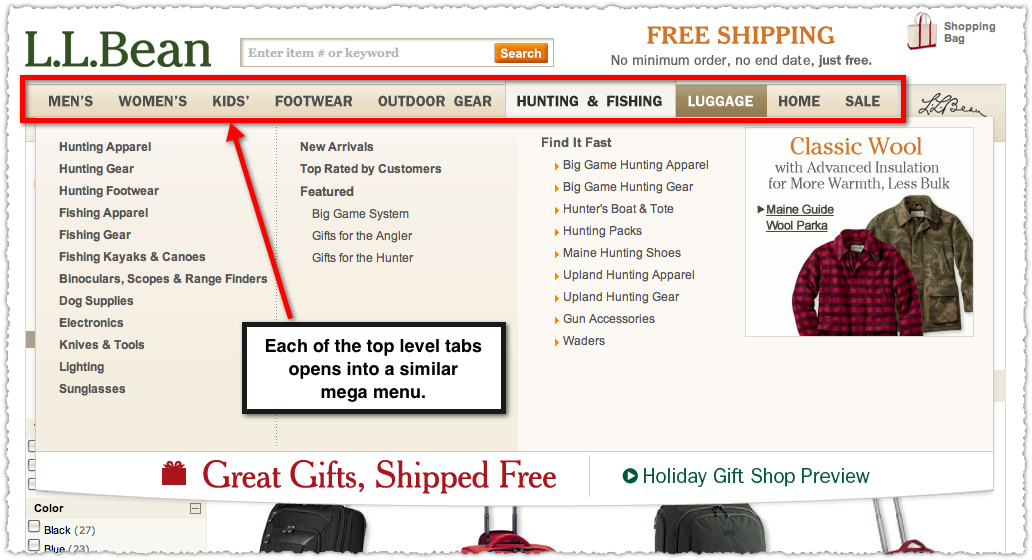

Landing Page by Keywords

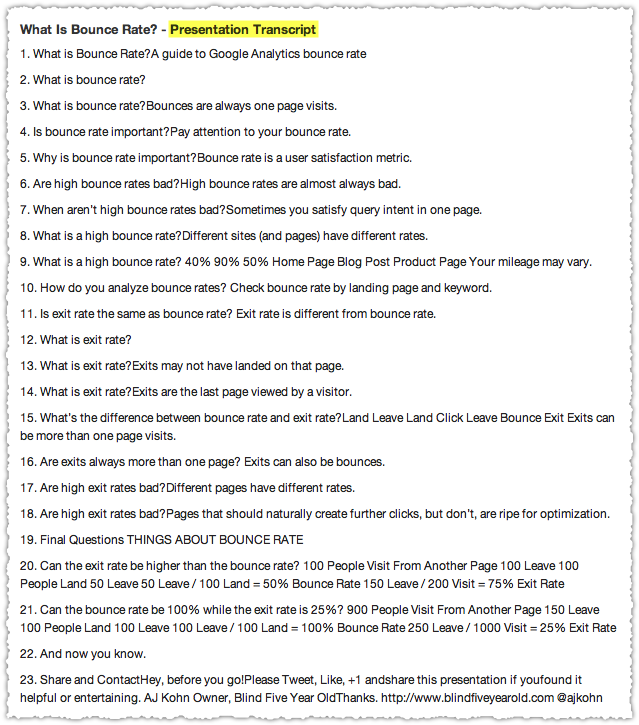

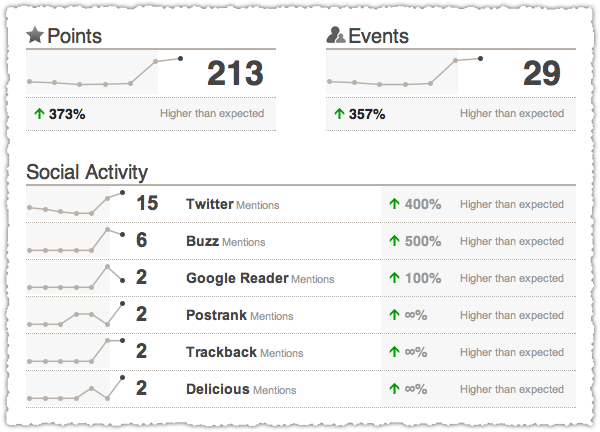

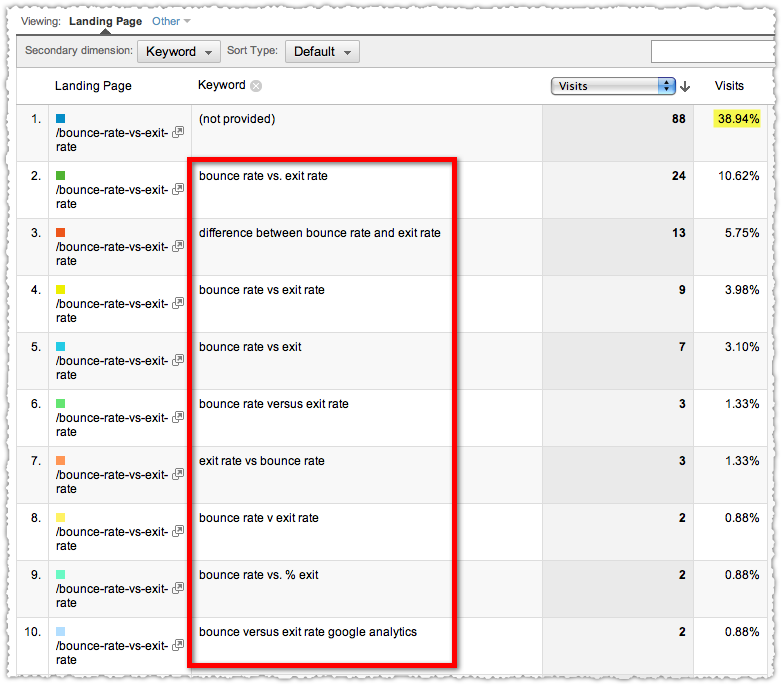

To look at the impact of (not provided) for a specific URL you need to view your Google organic traffic by Landing Page. Then drill down on a specific URL and use Keyword as your secondary dimension. Here’s a sample landing page by keywords report for my bounce rate vs exit rate post.

In this example, a full 39% of the traffic is (not provided). But a look at the remaining 61% makes it pretty clear what keywords bring traffic to this page. In fact, there are 68 total keywords in this time frame.

Clustering these long-tail keywords can provide you with the added insight necessary to be confident in your optimization strategy.

(Not Provided) Keyword Distribution

The distribution of keywords outside of (not provided) gives us insight into the keyword composition of (not provided). In other words, the keywords we do see tell us about the keywords we don’t.

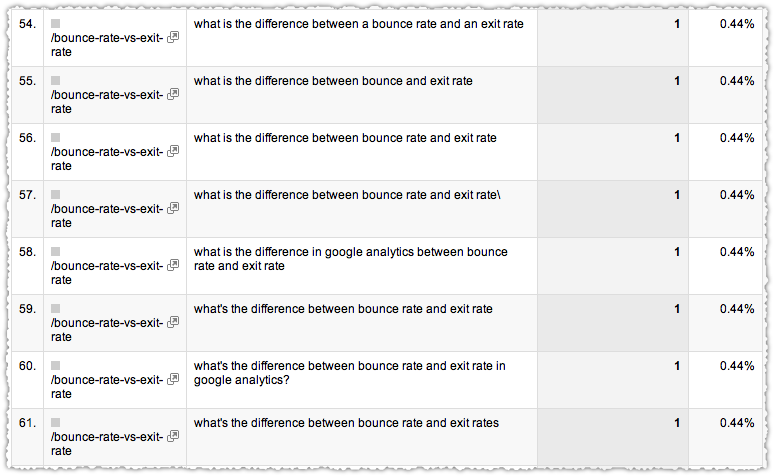

Do we really think that the keywords that make up (not provided) are going to be that different from the ones we do see? It’s highly improbable that a query like ‘moonraker steel teeth’ is driving traffic under (not provided) in my example above.

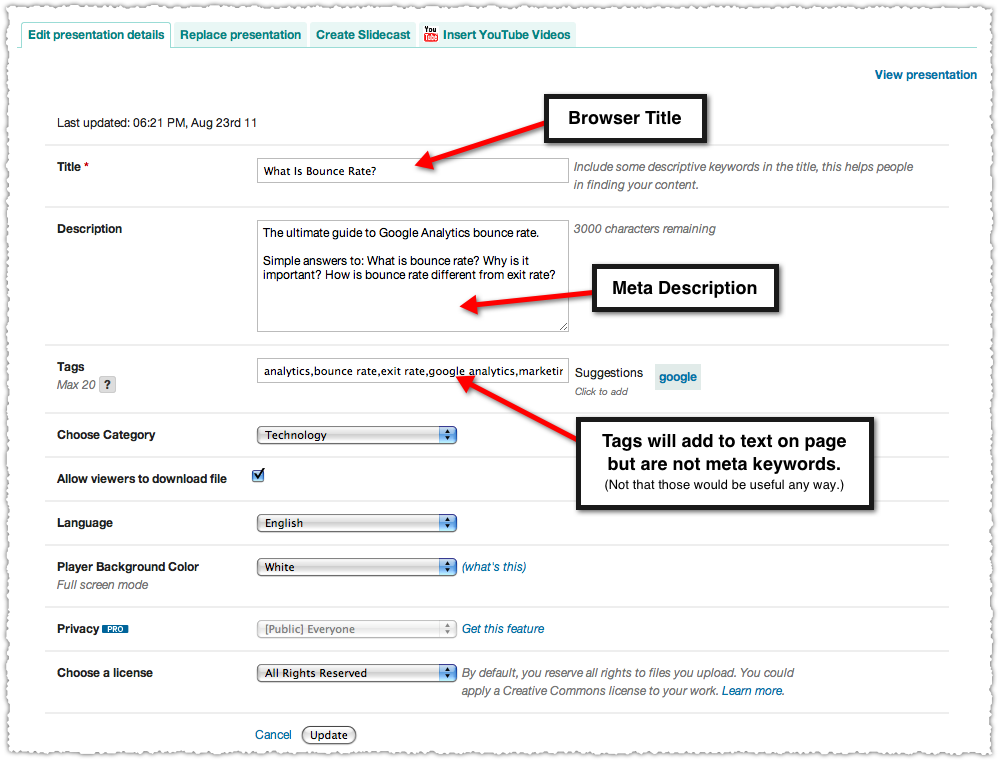

If you want to take things a step further you can apply the distribution of the clustered keywords against the pool of (not provided) traffic. First you reduce the denominator by subtracting the (not provided) traffic from the total. In this instance that’s 208 – 88 which is 120.

Even without any clustering you can take the first keyword (bounce rate vs. exit rate) and determine that it comprises 20% of the remaining traffic (24/120). You can then apply that 20% to the (not provided) traffic (88) and conclude that approximately 18 visits to (not provided) are comprised of that specific keyword.

Is this perfectly accurate? No. Is it good enough? Yes. Keyword clustering will further reduce the variance you might see by specific keyword.

Performance of (Not Provided) Keywords

The assumption I’m making here is that the keyword behavior of those logged-in to Google doesn’t differ dramatically from those who are not logged-in. I’m not saying there might not be some difference but I don’t see the difference being large enough to be material.

If you have an established URL with a history of getting a steady stream of traffic you can go back and compare the performance before and after (not provided) was introduced. I’ve done this a number of times (across client installations) and continue to find little to no difference when using the distribution method above.

Even without this analysis it comes down to whether you believe that query intent changes based on whether a person is logged-in or not? Given that many users probably don’t even know they’re logged-in, I’ll take no for 800 Alex.

What’s even more interesting is that this is information we didn’t have previously. If by chance all of your conversions only happen from those logged-in, how would you have made that determination prior to (not provided) being introduced? Yeah … you couldn’t.

While Google has made the keyword private they’ve actually broadcast usage information.

(Not Provided) Solutions

Don’t get me wrong. I’m not happy about the missing data, nor the double standard between paid and organic clicks. Google has a decent privacy model through their Ads Preferences Manager. They could adopt the same process here and allow users to opt-out instead of the blanket opt-in currently in place.

Barring that, I’d like to know how many keywords are included in the (not provided) traffic in a given time period. Even better would be a drill-down feature with traffic against a set of anonymized keywords.

However, I’m not counting on these things coming to fruition so it’s my job to figure out how to do keyword research and optimization given the new normal. As I’ve shown, you can continue to use Google Analytics, particularly if you cluster keywords appropriately.

Of course you should be using other tools to determine user syntax, identify keyword modifiers and define query intent. When keyword performance is truly in doubt you can even resort to running a quick AdWords campaign. While this might irk you and elicit tin foil hat theories you should probably be doing a bit of this anyway.

TL;DR

Google’s (not provided) policy might not be fair but is far from the end of the world. Whining about (not provided) isn’t going to change anything. Figuring out how to overcome this obstacle is your job and how you’ll distance yourself from the competition.