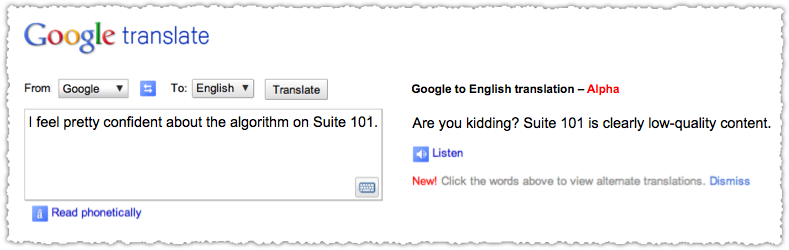

Google has a love hate relationship with the SEO community. They view many SEO agencies, consultants and services as part of the problem – parasites that seek to exploit and game their algorithm. No doubt, many fall into this category.

Unfortunately, Google’s lack of transparency contributes to the problem, spawning a host of poor theories and misguided practices. In addition, the changing nature of the algorithm creates a powerful variant of bit rot – outdated information and myths that stubbornly persist.

In response, Google has worked (perhaps reluctantly) to improve communication with the SEO community. They send employees to search conferences, write blogs, create videos, maintain a forum, provide informational tools and have a presence on social media platforms (Twitter) and sites (Hacker News).

The vast majority of these efforts are undertaken by one person: Matt Cutts.

Last month Google increased their communication efforts, dedicating a blog to search (it’s about time!) and doing a live 90 minute Q&A session via YouTube. I’m encouraged by these new developments but Google still doesn’t have a solid share of voice within the SEO community and when it does it is often viewed with suspicion.

Here are three ways Google could improve SEO relations.

Google Search Summit

Invite select members (perhaps 50) of the SEO community to the Google campus for a search summit with Google engineers. This is very different from a conference where the day-to-day mechanics of the SEO industry are discussed.

Instead, I propose a real exchange of ideas on the nature and problems of search. It could even have a lean component where groups are challenged to propose a new way to deal with a specific search problem.

There are a number of smart folks in the SEO community who could contribute positively to discussions on search quality or web spam. Even if Google doesn’t believe this, understanding how the SEO community perceives certain stances, guidelines and practices would be valuable.

At a minimum, the dialog would provide additional context behind search guidelines and algorithmic efforts. For Google, this means the attendees become agents of ‘truth’. By allowing the SEO community to truly engage and learn, they can help transmit Google’s message. I’m not talking about a Kool Aid conversion but instead building a greater degree of trust through knowledge transfer and personal relationships.

Attendance would require some modicum of discretion and a certain level of knowledge or interest in information retrieval, human computer interaction, natural language processing and machine learning.

Even if I didn’t get an invite (though I’d want one), I think it’s a good idea for Google and the SEO community.

Google Change Log

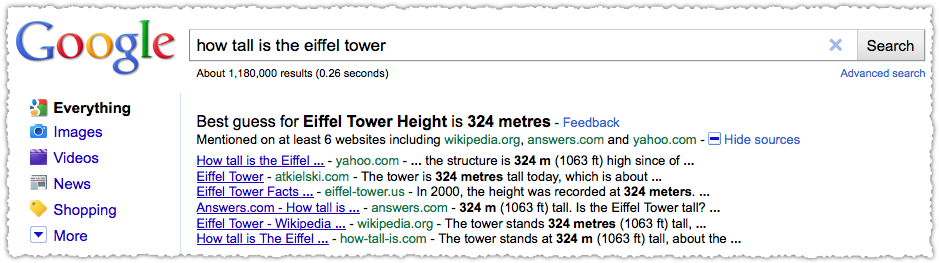

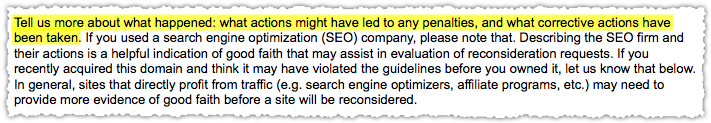

The SEO community is intensely curious about when and what changes are made to search, whether they be algorithmic or design oriented. Some amount of transparency here would go a long way. Would it really hurt to let the SEO community know that a certain type of bucket test was in the field?

We’re already seeing most of the UX tests, with blogs cranking out screenshots of the latest SERP oddity they’ve encountered. So why not publish a changelog, using FriendFeed as a model.

FriendFeed makes it clear that this wasn’t comprehensive, but it did provide a level of transparency and insight into pain points and personality. The latter even more so because the user is linked to their FriendFeed account.

Imagine a Google changelog where the user is linked to a Google Profile. God forbid we learn a little bit about the search quality engineers.

I understand that there are certain changes that cannot be shared. But opening the kimono just a little would go a long way.

LOLMatts

Matt Cutts is willing to interact at length at conferences and jump into comment threads (in a single bound). He gets a bit of help from folks like Maile Ohye and John Mueller, but he’s essentially a solo act.

If Google isn’t going to allow (or encourage) more engineers to interact with stakeholders (yeah, I have a business background) then you have to amplify the limited amount of Matt we have at our disposal.

What better way than to create a Matt Cutts meme? LOLMatts!

Yes, this is tongue in cheek, but my point is to do some marketing.

Make the messages pithy and viral.

Break through the clutter and keep it simple.

Make it easier for people to pass along important information. I’ve just created four LOLMatts that cover page sculpting, cloaking, meta keywords and paid links. Of course this can go wrong in a multitude of ways and be used for evil. But the idea is to think of ways to amplify the message.

Develop some interesting infographics. Heck, Danny Sullivan even got you started. Get busy creating some presentations (you could do worse than to use Rand as a model) and upload them to SlideShare. Or create an eBook and let people pay for it with a Tweet.

Let’s see some marketing innovation.

TL;DR

Google’s rocky relationship with the SEO community could be improved through real interaction and engagement, an increase in transparency (both technical and human) and marketing techniques that would amplify their message.

The SEO community and Google would benefit from these efforts.