Stop writing for people. Start writing for search engines.

I’ll wait while you run to get your pitchforks and light your torches. I know it sounds like heresy but I ask you to hold your judgment.

Search Engines Emulate Human Evaluation

The goal of search engine algorithms is to emulate the human evaluation of a site or page. This is not an easy task. In fact, it’s a really difficult task. Think of all the things that you tap into when you evaluate a new website. The amount of analysis that goes on in just a few seconds is astounding.

The thing to remember is that search engines want to be a proxy for human evaluation. They’re trying to be … human. Don’t lose sight of this.

Search Engines Are Not Smart

But for all of that effort, search engines aren’t smart. The name of my blog is my opinion of search engines: a search engine is like a blind five year old.

The blind part comes in because they don’t care about how pretty your site is or the gorgeous color palette you’ve selected. Mind you, visual assessment is a factor humans use in evaluating a website, but search engines aren’t able to do this.

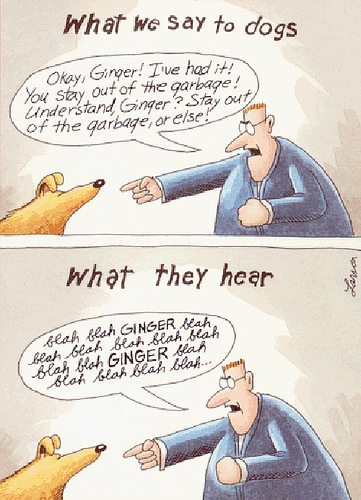

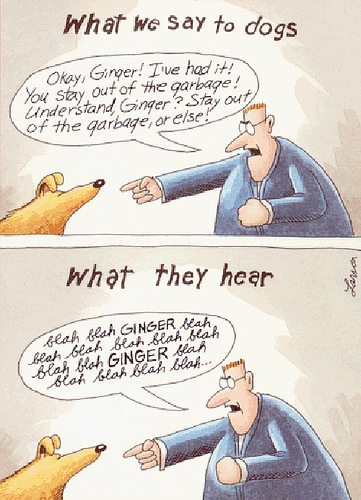

Why a five year old? For all of the advances search engines have made, they’re still not ‘reading’. They’re performing text and language analysis. That’s a huge distinction. Really. A Grand Canyon type of distinction.

A search engine would likely fail a basic reading comprehension test.

Knowing this, you need to take steps to make it very clear what that page is about and where the search engine should go next. This helps the search engine and … ultimately helps people too.

First Impressions Matter

For a number of years I ran telemarketing programs. (University fundraising if that makes you feel better about me.) What you find out is that you have only 7 seconds to convince a person to stay on the phone. They better hear something worthwhile fast or else you’ll get the dial tone.

It’s no different online. With high speed connections, tabbed browsing, real-time information and an environment where anyone can publish anything, that first impression is incredibly important. In a few seconds users are determining if that content is authoritative and relevant.

Is it any wonder why tools like FiveSecondTest and Clue have become popular?

People Scan Text

Did you know that the P.S. line is one of (if not the) most read parts in a direct mail solicitation? It is. People naturally gravitate toward it. They’re far more willing to read the P.S. line than any of the body copy.

And we see this behavior online too. Research by Jakob Nielsen shows that most readers scan instead of reading word for word.

People rarely read Web pages word by word; instead, they scan the page, picking out individual words and sentences. In research on how people read websites we found that 79 percent of our test users always scanned any new page they came across; only 16 percent read word-by-word.

Another study showed that even those who ‘look’ at your content are only reading between 18% and 28% of it.

Have you seen this spring up around the web lately? It stands for too long, didn’t read. It’s used to summarize content into a sentence or two. It can be used at the top of content or at the bottom. At the bottom, it serves as a close cousin to the tradition direct mail P.S. line.

But why exactly are we seeing tl;dr? Could it be that the content we’re writing just isn’t concise enough? That it’s not formatted for readability? It’s your job to make it easy for people to understand and engage with your content. Keep it simple, stupid.

SEO is more than tags and links. Today SEO is also about User Experience (UX).

The Brain Craves Repetition

There’s an old adage in public speaking that only a third of the audience is listening to you at any given time. This means that you have to repeat yourself at least three times to get your point across. A recent Copyblogger post touched on this subject.

The brain can’t pay attention to everything and it doesn’t let everything in. It figures anything that is repeated constantly must be important, so it holds on to that information.

I also believe in a type of visual osmosis. When evaluating a page for the first time, words that are repeated frequently make an impression, whether they’re specifically read or not.

People instinctively want consistency. They want to know that they’re reading the right thing, in the right way, in the right order. They want to group things. That’s one reason why ‘list’ posts are so popular.

Apply Steve Krug’s ‘Don’t Make Me Think‘ philosophy to your content. Not just for search engines but for people.

Stop Using Pronouns

Why use that pronoun when you can use the actual noun instead. Sure, you know what you’re talking about and the reader might, but putting it in ensures that the reader (who is not nearly as invested in your content) is following along. And our dear friend the search engine is also better served.

Having that keyword noun in your content frequently doesn’t make it worse, it makes it better. When you read it, it may feel bloated. But the majority of your readers are skimming while the minority who are truly reading will simply not see those extra nouns. In fact, they become a bit like sign posts.

Here’s an example from the world of books: dialog! What if you didn’t attribute dialog to a specific person.

“I want Mexican food,” he said.

“No, lets get Italian food,’ he replied.

“Can’t we meet in the middle?’ he queried.

How many people are talking? Two? Three? Perhaps one if you’re a Fight Club fan.

Now lets add the names back in.

“I want Mexican food,” Harry said.

“No, lets get Italian food,” Ron replied.

“Can’t we meet in the middle?” Tom queried.

Now I know there are three people talking. And as that dialog continues (as dull as it might be) I’ll use those names as sign posts so I know who’s saying what. But will I actually ‘read’ each instance of that name? Probably not. You’ll pick up the cadence of the dialog and essentially become blind to the actual name. Blind until it becomes unclear and then you’ll seek that name out to clarify exactly who said what.

When people scan they need those sign posts. They need to see that keyword so they can quickly follow along.

Web Writing is Different

When people say you should write for the user, they mean well. In spirit, I completely agree. But in practice, it usually goes dreadfully wrong.

I’ll never forget my first job out of college. I was an Account Coordinator at an advertising agency. One of my jobs was to write up meeting notes. As an English minor I took a bit of pride in my writing skills. So it was a great shock to get back my first attempt with a river of red marks on it.

What had I done wrong?

I wasn’t using the right style. Meeting notes isn’t literature or an essay. I didn’t have to find a different word for ‘agreed’ because of my dislike of using the same verb more than once (twice at most). No, I was told that my writing was too ‘flowery’ and that I needed to aim for clarity and brevity.

I’m not saying that writing for the web is like writing meeting notes. But I am saying that writing for the web is different!

So when you tell someone to write for the user, they usually write the wrong way. They write thinking the user is going to be gorging themselves on every word, giving the content their full attention. They think the user will appreciate the two paragraph humorous digression from the main topic. They’ll want to write like David Mitchell or Margaret Atwood. (Or maybe that’s just me.)

Robots Don’t Understand Irony

To my knowledge, there is no double entendre database, nor a irony subroutine or a witticism filter in the algorithm. Do they have a place in your writing? Sure. But sparingly. Not just because search engines won’t understand but because, like it or not, a lot of people won’t get it either.

Everyone might not get the inside joke … like why I used the image of this particular novel above.

Write for Search Engines

Make sure they know exactly what you’re writing about. Stay focused. Break your content up into shorter paragraphs and use big descriptive titles. Avoid pronouns and don’t assume they understand what you just said in a previous paragraph. Keep it simple and give them sign posts.

Write for people the right way. Write for a search engine.

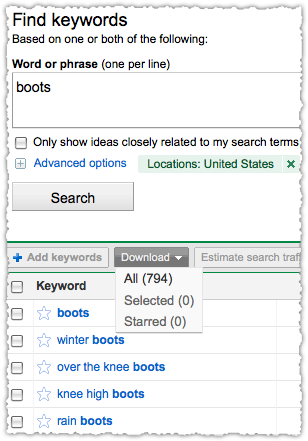

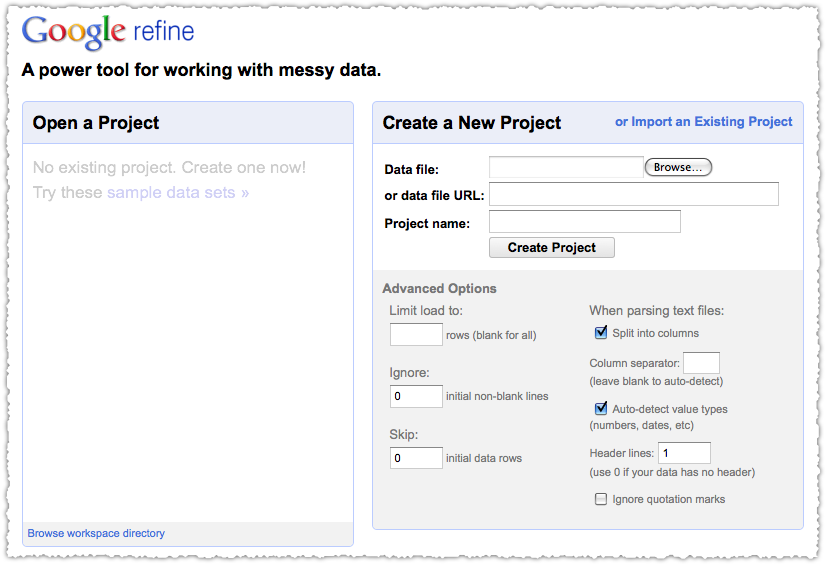

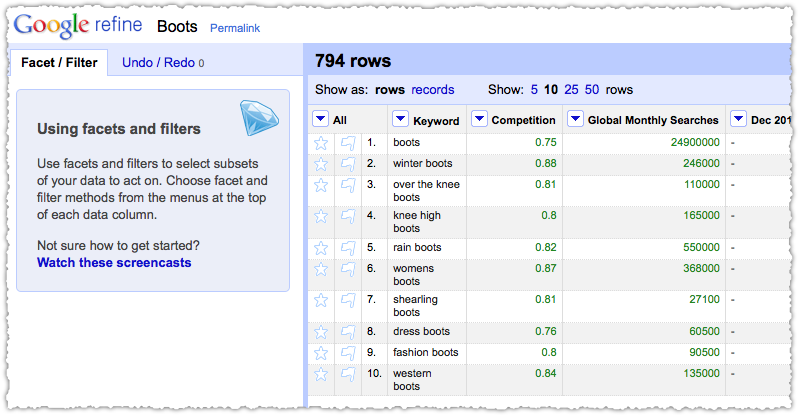

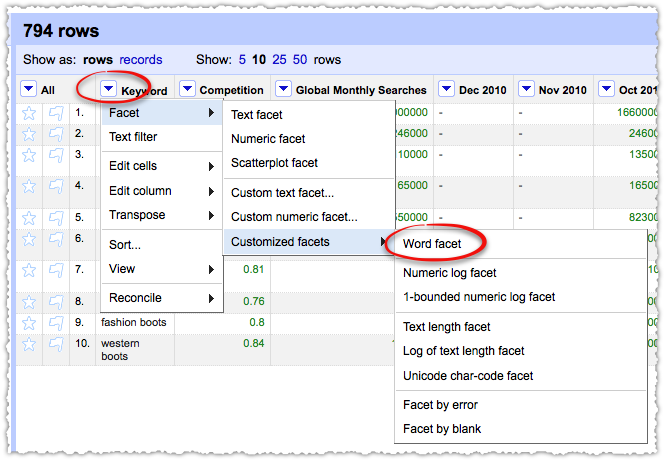

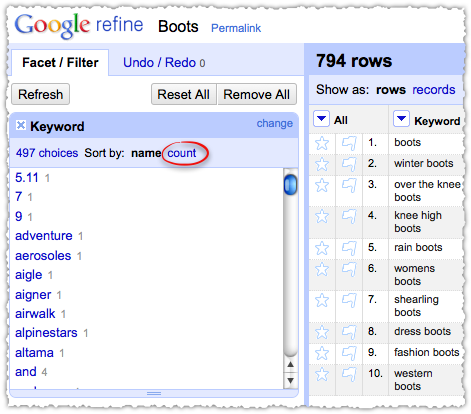

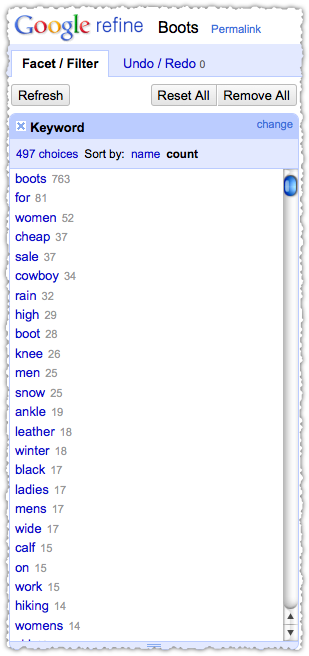

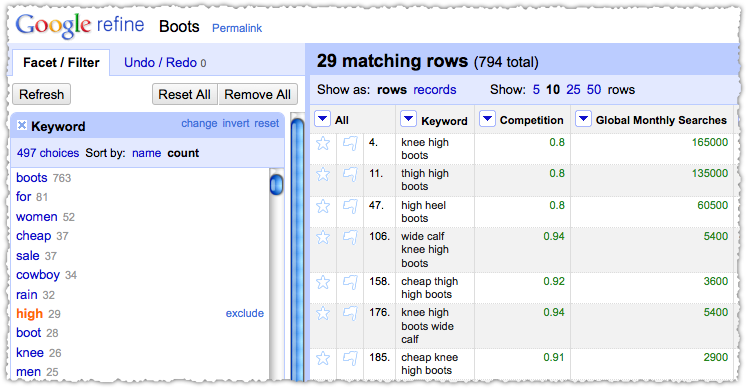

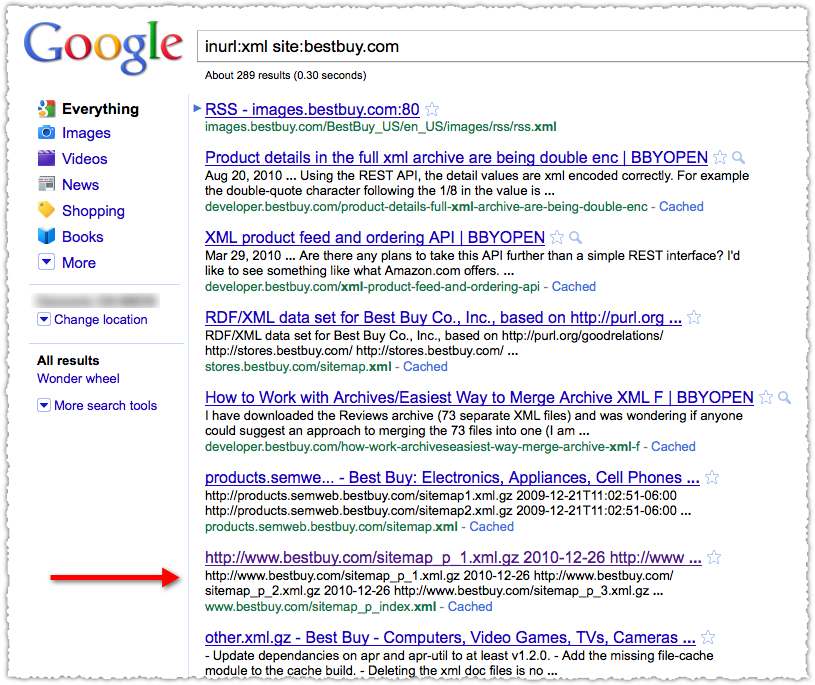

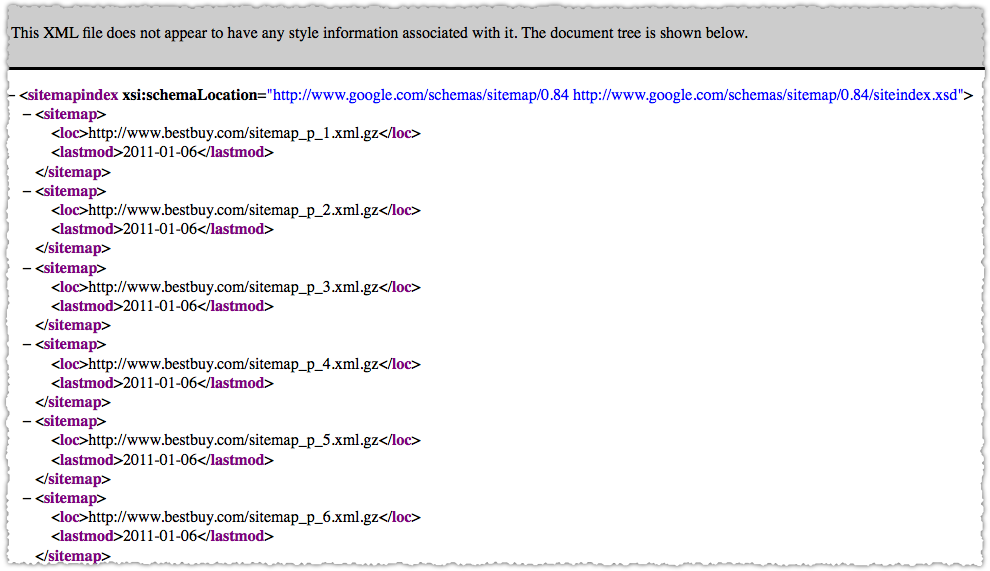

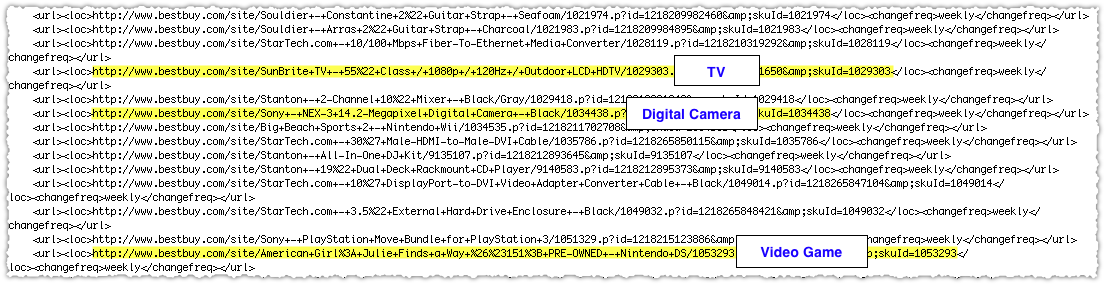

Keyword research is a vital component of SEO. Part of that research usually entails finding the most frequent modifiers for a keyword. There are plenty of ways to do this but here’s a new way to do so using Google Refine.

Keyword research is a vital component of SEO. Part of that research usually entails finding the most frequent modifiers for a keyword. There are plenty of ways to do this but here’s a new way to do so using Google Refine.