On Friday, Google released a list of questions to help guide publishers who have been impacted by the Panda update.

Because Google can’t (or won’t) give specifics about their algorithm, we’re always left to read between the lines, trying to decipher the true meaning behind the words. Statements by Matt Cutts are given the type of scrutiny Wall Street gives those by Ben Bernanke.

Speculation is entertaining, but is it productive? Google seems to encourage it, even within this recent blog post.

These are the kinds of questions we ask ourselves as we write algorithms that attempt to assess site quality. Think of it as our take at encoding what we think our users want.

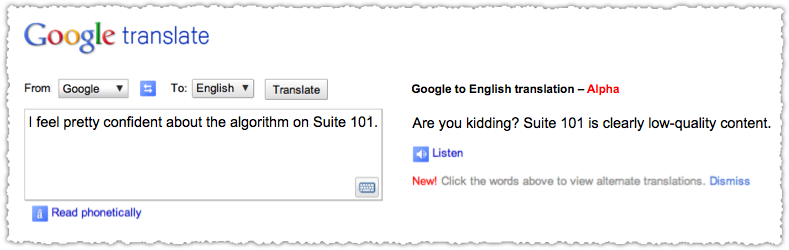

So perhaps there is value (beyond entertainment) in trying to translate and decode the recent Panda questions.

Panda Questions Translation

Would you trust the information presented in this article?

The web is still about trust and authority. The fact that this is the first question makes me believe it’s a reference to Google’s normal calculation of PageRank using the (rickety) link graph.

Is this article written by an expert or enthusiast who knows the topic well, or is it more shallow in nature?

Is Google looking at the byline of articles and the relationships between people and content? Again, the order of this question makes me think this is a reference to the declining nature of the link graph and the rising influence of the people graph.

Does the site have duplicate, overlapping, or redundant articles on the same or similar topics with slightly different keyword variations?

This reveals a potential internal duplicate content score and over-optimization signal in which normal keyword clustering is thwarted by (too) similar on-site content. It may also be referred to as the eHow signal.

Would you be comfortable giving your credit card information to this site?

Outside of qualitative measures, Google might be looking for the presence or prominence of a privacy policy.

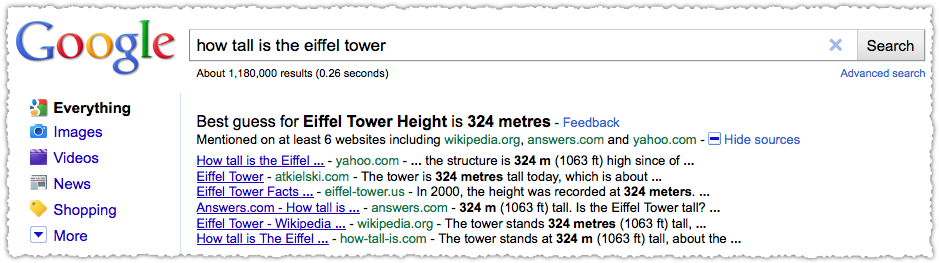

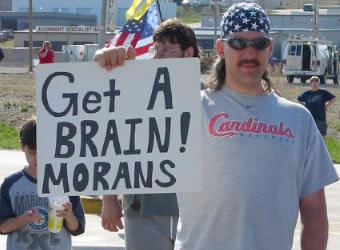

Does this article have spelling, stylistic, or factual errors?

We already know that Google applies a reading level to content. But maybe they also extract and run statements through a fact checking database. So, stating that the Eiffel tower was 500 meters (instead of 324) might be a negative signal.

Are the topics driven by genuine interests of readers of the site, or does the site generate content by attempting to guess what might rank well in search engines?

This sounds like a mechanism to find sites that have no internal topical relevance. In particular, it feels like a signal designed to identify splogs.

Does the article provide original content or information, original reporting, original research, or original analysis?

Nice example of keyword density here! But it certainly gets the point across though, doesn’t it? Google isn’t green when it comes to content recycling. Google wants original content.

Does the page provide substantial value when compared to other pages in search results?

This type of comparative relevance may be measured, over time, through pogosticking and rank normalized CTR.

How much quality control is done on content?

I think this is another reference to spelling and grammar. Google is proud (and should be) of their Did You Mean? spelling correction. I can’t imagine they wouldn’t want to apply it in other ways. As for grammar, I wonder if they dislike dangling prepositions?

Does the article describe both sides of a story?

I believe this is a Made for Amazon signal that tries to identify sites where the only goal is to generate clicks on affiliate links. I wonder if they’ve been able to develop a statistical model through machine learning that identifies overly one-sided content?

Is the site a recognized authority on its topic?

This seems like a clear reference to the idea of a site being a hub for information within a specific topic.

Is the content mass-produced by or outsourced to a large number of creators, or spread across a large network of sites, so that individual pages or sites don’t get as much attention or care?

Not much between-the-line reading necessary on this one. Google doesn’t like content farms. This might as well just reference Mahalo and Demand Media.

Was the article edited well, or does it appear sloppy or hastily produced?

Once again, more emphasis on attention to detail within the content. Brush up on those writing and editing skills!

For a health related query, would you trust information from this site?

The qualifier of health makes me think this is a promotional signal, seeking to identify sites that are promoting some supplement or herb with outrageous claims of wellness. I’d guess machine learning on the content coupled with an increased need for citations (links) from .gov, .org or .edu sites could produce a decent model.

Would you recognize this site as an authoritative source when mentioned by name?

Brands matter. You can’t get much more transparent.

Does this article provide a complete or comprehensive description of the topic?

Oddly, the first thing that jumps to mind is article length. Does size really matter?

Does this article contain insightful analysis or interesting information that is beyond obvious?

Beyond obvious is an interesting turn of phrase. I’m not sure what to make of it unless they’re somehow targeting content like ‘How To Boil Water‘.

It may also refer to ‘articles’ that are essentially a rehash of another article. You’ve seen them, the kind where large portions of another article are excerpted surrounded by a small introductory sentence.

Is this the sort of page you’d want to bookmark, share with a friend, or recommend?

This clearly refers to social bookmarking, Tweets, Facebook Likes and Google +1s and the social signals that should help better (or at least more popular) content rise to the top. These social gestures are the modern day equivalent to a link.

Does this article have an excessive amount of ads that distract from or interfere with the main content?

There is evidence (and a good deal of chatter) that Google is actually rendering pages and can determine ads and chrome (masthead, navigation etc.) from actual content. If true, Google could create a content to ad ratio.

I’d also guess that this ratio is applied most often based on what is visible to the majority of users. How much real content is visible when applied using Google’s Browser Size tool? You should know.

Would you expect to see this article in a printed magazine, encyclopedia or book?

This anachronistic question is about trust. I simply find it interesting that Google still believes that these old mediums convey more trust than their online counterparts.

Are the articles short, unsubstantial, or otherwise lacking in helpful specifics?

Another question around article length and ‘shallow’ content. I wonder if there is some sort of word diversity metric that could be applied that would help identify articles that lacked substance and specifics.

Are the pages produced with great care and attention to detail vs. less attention to detail?

The verbiage of ‘pages produced’ makes me think this is about code and not content. We’ve heard that code validation isn’t really a signal, but that’s different from seeing gross errors in the mark-up that translates into bad user experience.

Would users complain when they see pages from this site?

This is obviously a reference to the Chrome Personal Blocklist extension and new Blocked Sites functionality. Both features seemed like reactions to pressure from people like Vivek Wadhwa, Paul Kedrosky, Jeff Atwood, Michael Arrington and Rich Skrenta.

That this question is last in this list makes it seem like it was a late addition, and might lend some credence to the idea that these spam initiatives were spurred by the public attention brought by the Internati.

Panda Questions Analysis

Taken together it’s interesting to note the number of questions that seem to be consumed with grammar, spelling and attention to detail. Yet, if Google had really gotten better at identifying quality in this way, wouldn’t it have been better to apply it on the URL level and not the domain level? (I have a few ideas why this didn’t happen, but I’ll share that in another post.)

Overall, the questions point to the shifting nature of how Google measures trust and authority as well as a clear concern about critical thinking and the written word. In light of the recent changes at Google, is this evidence that Google is more concerned with returning knowledge rather than simple results?

The Next Post: SEO Freeloaders

The Previous Post: WordPress Duplicate Content

1 trackbacks/pingbacks

Comments About Translating Panda Questions

// 1 comments so far.

smart traffic reviews // June 01st 2011

A very humorous yet informative post! The Panda update has left thousands of SEOers going crazy all around the world! The list of questions laid out by Google are pretty much clear and they simply mean that if you’re website is rubbish then won’t give you any value.

Sorry, comments for this entry are closed at this time.

You can follow any responses to this entry via its RSS comments feed.