Okay, Pandu Nayak didn’t exactly teach me about SEO.

But his October 18 anti-trust hearing testimony was both important and educational. And I’m a better SEO when I understand how Google really works.

Pandu Nayak is Vice President of Search working on various aspects of Search Quality. He’s been at Google for 18 years and is the real deal when it comes to search at Google.

I’m going to highlight areas from the October 18 transcript (pdf) I find notable or interesting and afterwards tell you how I apply this to my client work. The entire transcript is well worth your time and attention.

In all of the screenshots the Q. will be Department of Justice counsel Kenneth Dintzer, The Court will be Judge Amit P. Mehta while A. or The Witness will be Pandu Nayak.

Navboost

Why the interest in Navboost?

Remember, it was said to be “one of Google’s strongest ranking signals” and is mentioned 54 times in this transcript, making it the fifth most mentioned term overall.

Nayak also acknowledges that Navboost is an important signal.

I touched on Navboost in my It’s Goog Enough! piece and now we have better explanations about what it is and how Google uses it.

Navboost is trained on click data on queries over the past 13 months. Prior to 2017, Navboost was trained on the past 18 months of data.

Navboost can be sliced in various different ways. This is in line with the foundational patents around implicit user feedback.

There are a number of references to moving a set of documents from the “green ring” to the “blue ring”. These all refer to a document that I have not yet been able to locate. However, based on the testimony it seems to visualize the way Google culls results from a large set to a smaller set where they can then apply further ranking factors.

There’s quite a bit here on understanding the process.

Nayak is always clear that Navboost is just one of the factors that helps in this process. And it’s interesting that one of those reasons is some documents don’t have clicks but could still be highly relevant. When you think about it, this has to be the case or no new content would ever have a chance to rank.

There is also interesting insights into the stages of informational retrieval and when they apply different signals.

I assume the deep learning and machine learning signals are computationally expensive, which is why they are applied to the final set of candidate documents. (Though I’ve read some things about passage ranking that might turn this on it’s head. But I digress.)

So Navboost is still an important signal which uses the memorized click data over the last 13 months.

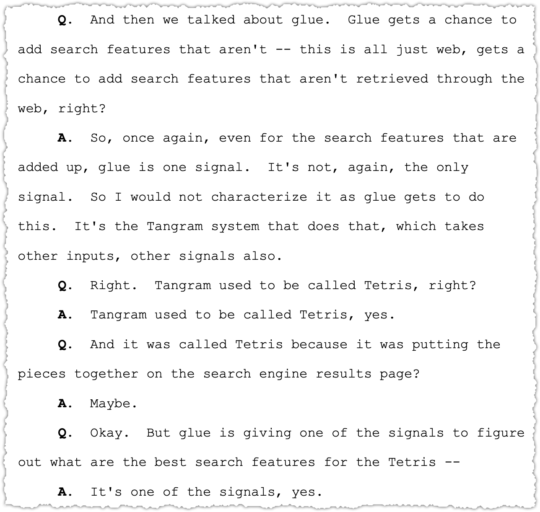

Glue

Glue is the counterpart to Navboost and informs what other features are populated on a SERP.

Though not explicitly stated, if Glue is another name for Navboost but for all of the other features on the page then it stands to reason that it’s logging the user interactions with features for all queries over the last 13 months.

So it might learn that users are more satisfied when there’s a video carousel in the SERP when searching for a movie title.

Tangram (née Tetris)

The system that works to put all of the features on a SERP is called Tangram.

I kind of like the name Tetris but Tangram essentially has the same meaning. It’s a complex and intricate puzzle.

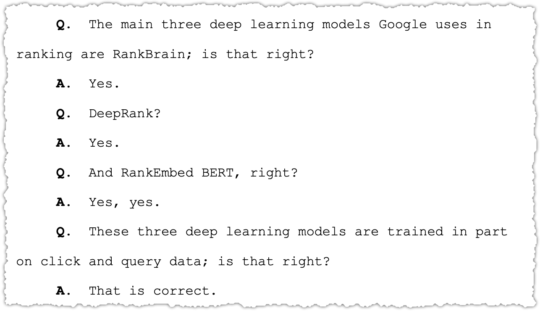

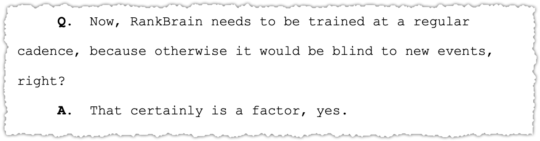

RankBrain

Now we can leave click data behind and turn our attention to the modern deep learning models like RankBrain. Right? Well … here’s the thing.

All of the deep learning models used by Google in ranking are trained, in part, on click and query data. This is further teased out in testimony.

None of this surprises me given what I know. In general, these models are trained on the click data of query-document pairs. Honestly, while I understand the concepts, the math in the papers and presentations on this subject are dense to say the least.

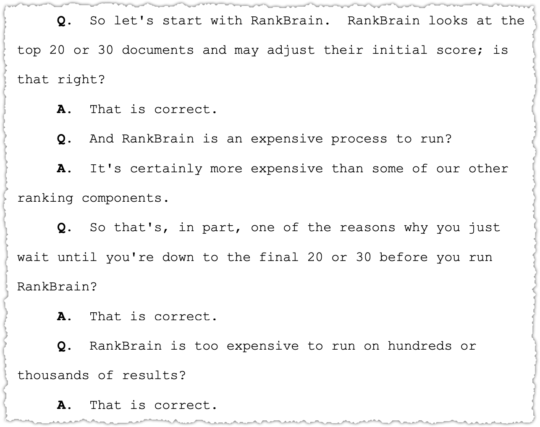

As mentioned above, RankBrain is run on a smaller set of documents at the tail end of ranking.

I’m not quite sure if RankBrain is part of the ‘official’ rank modifier engine. That’s a whole other rabbit hole for another time.

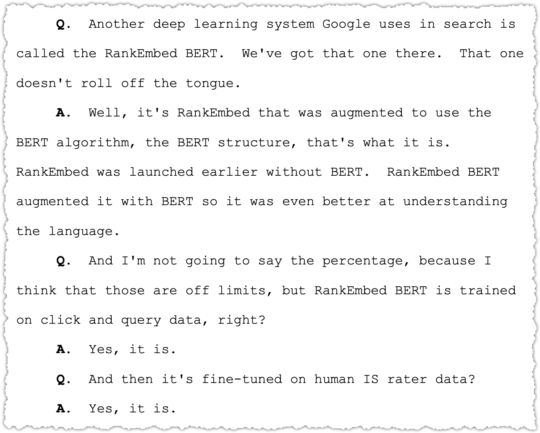

RankEmbed BERT

What about RankEmbed BERT?

Embed is clearly about word embeddings and vectors. It’s essentially a way to turn words into math. Early models like word2vec were context insensitive. BERT, due to it’s bi-directional nature, provided context-sensitive embeddings. I cover a bunch of this in Algorithm Analysis In The Age of Embeddings.

This was a major change in how Google could understand documents and may have made Google a bit less reliant on implicit user feedback.

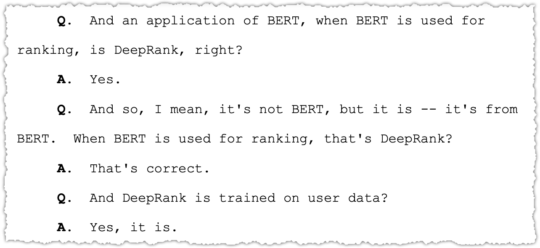

DeepRank

DeepRank seems to be the newest and most impactful deep learning model used in ranking. But it’s a bit confusing.

It seems like DeepRank is not exactly different but just when BERT is using for ranking purposes.

It would make sense that DeepRank uses transformers if it’s indeed a BERT based model. (Insert Decepticons joke here.)

Finally we get some additional color on the value of DeepRank in comparison to RankBrain.

There’s no question that Google is relying more on deep learning models but remain hesitant (with good reason) to rely on them exclusively.

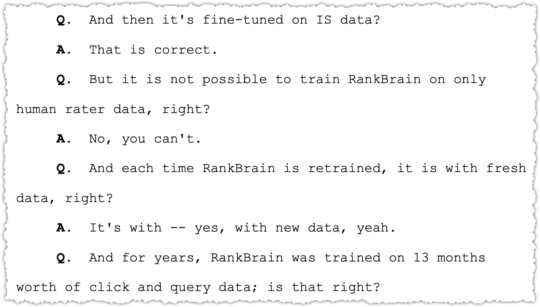

Information Satisfaction (IS) Scores

You may have noticed references to human rates and IS scores. Nowhere in the transcript does it tell you what IS stands for but Google’s Response to ACCC Issues Paper (pdf) contains the meaning.

“Google tracks search performance by measuring “information satisfaction” (IS) scores on a 100 point scale. IS is measured blind by Search Quality Raters …”

So IS scores are from human raters via the Search Quality Evaluator Guidelines (pdf).

There’s a large description about how these ratings are conducted and how they’re used from fine tuning to experimentation.

The number that pops out for me is the 616,386 experiments which is either the number of experiments from 2020 alone or since 2020.

I struggle a bit with how this works, in part because I have issues with the guidelines, particularly around lyrics. But suffice to say, it feels like the IS scores allow Google to quickly get a gut check on the impact of a change.

While not explicitly said, there is mention to columns of numbers that seem to reference the experiment counts. One is for IS score experiments and the other seems to be for live testing.

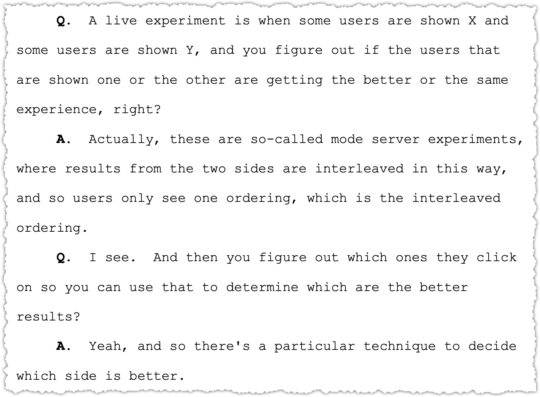

Interleaving

Live tests are not done as traditional A/B tests but instead employ interleaving.

There are a number of papers on this technique. The one I had bookmarked in Raindrop was Large-Scale Validation and Analysis of Interleaved Search Evaluation (pdf).

I believe we’ve been seeing vastly more interleaved tests in the last three years.

So Frickin What?!

A number of people have asked me why I care about the mechanics and details of search. How does it help me with clients? Someone recently quipped, “I don’t see how this helps with your SEO shenanigans.”

My first reaction? I find the topic interesting, almost from an academic perspective. I’m intrigued by information retrieval and how search engines work. I also make a living from search so why wouldn’t I want to know more about it?

But that’s a bit of a lazy answer. Because I do use what I learn and apply it in practice.

Engagement Signals

For more than a decade I’ve worked under the assumption that engagement signals were important. Sure I could be more precise and call them implicit user feedback or user interaction signals but engagement is an easier thing to communicate to clients.

That meant I focused my efforts on getting long clicks. The goal was to satisfy users and not have them pogostick back to search results. It meant I was talking with clients about UX and I became adamant about the need to aggregate intent.

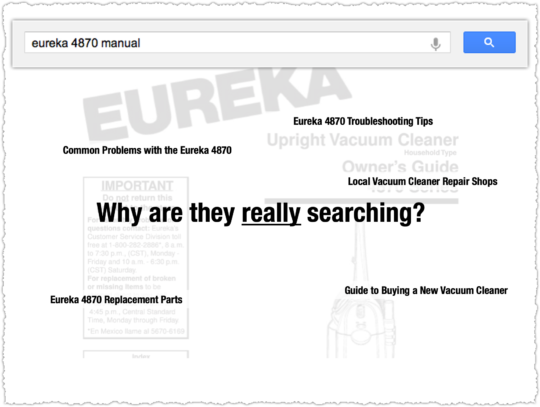

Here’s one example I’ve used again and again in presentations. It’s a set of two slides where I ask why someone is searching for a ‘eureka 4870 manual’.

Someone usually says something like, ‘because the vacuum isn’t working’. Bingo!

Satisfying active intent with that manual is okay.

But if you know why they’re really searching you can deliver not just the manual but everything else they might need or search for next.

Let’s go with one more example. If you are a local directory site, you usually have reviews from your own users prominently on the page. But it’s natural for people to wonder how other sites rate that doctor or coffee shop or assisted living facility.

My recommendation is to display the ratings from other sites there on your own page. Why? Users want those other ratings. By not having them there you chase users back to the search results to find those other ratings.

This type of pogosticking behavior sends poor signals to Google and you may simply never get that user back. So show the Yelp and Facebook rating! You can even link to them. Don’t talk to me about lost link equity.

Yes, maybe some users do click to read those Yelp reviews. But that’s not that bad because to Google you’ve still satisfied that initial query. The user hasn’t gone back to the SERP and selected Yelp. Instead, Google simply sees that your site was the last click in that search session.

No tricks, hacks or gimmicks. Knowing engagement matters simply means satisfying users with valuable content and good UX.

Navboost

Even before I knew about Navboost I was certain that brands had an advantage due to aided awareness. That meant I talked about being active on social platforms and pushed clients to invest in partnerships.

The idea is to be everywhere your customer is on the Internet. Whether they are on Pinterest or a niche forum or another site I want them to run into my client’s site and brand.

Perhaps the most important expression of this idea is long-tail search optimization. I push clients to scale short-form content that precisely satisfies long-tail query intent. These are usually top-of-funnel queries that don’t lead to a direct conversion.

Thing is, it is some of the cheapest form of branding you can do online. Done right, you are getting positive brand exposure to people in-market for that product. And that all adds up.

Because the next time a user searches – this time a more mid or bottom-of-funnel term – they might recognize your brand, associate it with that prior positive experience and … click!

I’ve proven this strategy again and again by looking at first touch attribution reports with a 30, 60 and 90 day look back window. This type of multi-search user-journey strategy builds your brand and, over time, may help you punch above your rank from a CTR perspective.

Here’s one more example. I had a type of penicillin moment a number of years ago. I was doing research on one thing and blundered into something else entirely.

I was researching something else using Google Consumer Surveys (RIP) when I learned users wanted a completely different meta description.

Meta description! Not a ranking factor right? It’s not a direct ranking factor.

When we changed from my carefully crafted meta description template to this new meta description the CTR jumped across the board. Soon after we saw a step change in ranking and the client became the leading site in that vertical and hasn’t looked back.

NLP

Google understands language differently than you and me. They turn language into math. I understand some of it but the details are often too arcane. The concepts are what really matter.

What it boils down to in practice is a vigilance around syntax. Google will often say that they understand language better as they launch something like BERT. And that’s true. But the reaction from many is that it means they can ‘write for people’ instead of search engines.

In theory that sounds great. In practice, it leads to a lot of very sloppy writing that will frustrate both your readers and Google. 12 years ago I urged people to stop writing for people. Read that piece first before you jump to conclusions.

Writing with the right syntactic structure makes it easier for Google to understand your content. Guess what? It does the same for users too.

I have another pair of slides that show an exaggerated difference in syntax.

I’d argue that content that is more readable and easier to understand is more valuable – that je ne sais quoi quality that Google seeks.

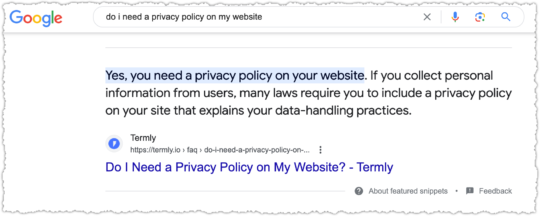

Nowhere is syntax more important than when you’re trying to obtain a featured snippet.

However, it’s also important for me to understand when Google might be employing different types of NLP. Some types of content benefit from deep learning models like BERT. But other types of content and query classes may not and still employ a BoW model like BM25.

For the latter, that means I might be more free to visualize information since normal article content isn’t going to lead to a greater understanding of the page. It also means I might be far more vigilant about the focus of the page content.

Interleaving

Google performs far more algorithm tests than are reported on industry sites and the number of tests has accelerated over the last three years.

I see this because I regularly employ rank indices for clients that track very uniform sets of query classes. Some of these indices have data for more than a decade.

What I find are patterns of testing that produce a jagged tooth trend line for average rank. These wind up being either two-steps forward and one-step back (good) or one-step forward and two-steps back (bad).

I’m pretty sure I can see when a test ends because it produces what I call a dichotomous result where some metrics improve but others decline. An example might be when the number of terms ranking in the top three go up but the number of terms ranking in the top ten go down.

Understanding the velocity of tests and how they might be performed allows me to calm panicked clients and keep them focused on the roadmap instead of spinning their wheels for no good reason.

TL;DR

Pandu Nayak’s anti-trust testimony provided interesting and educational insights into how Google search really works. From Navboost and Glue to deep learning models like DeepRank, the details can make you a better SEO.

Notes: Auditory accompaniment while writing included OutRun by Kavinsky, Redline by Lazerhawk, Vegas by Crystal Method and Invaders Must Die by The Prodigy.

The Next Post: Google Cache Bookmarklets

The Previous Post: It’s Goog Enough!

9 trackbacks/pingbacks

Comments About What Pandu Nayak Taught Me About SEO

// 27 comments so far.

Gary // November 16th 2023

AJ, love the summary points here, how you translate this into actionable work and your case studies from personal experience.

Since Navboost uses 13 months of user click behavior data, do you think that even with a site improving onpage intent matching to the query, you will still have to realistically wait it out several months to see a significant bump?

The reason being, it takes time for the older data to not be used when scoring you.

AJ Kohn // November 17th 2023

Thank you Gary. And yes, it does take time.

I have no real inside knowledge but my sense is that the signals run on something like a 90 day moving average. That’s from years of experience in working on projects meant to influence engagement and seeing how long it takes to see results.

Andriy Terentyev // December 11th 2023

Another hypothesis is that the data is refreshed each time with algorithm updates several times a year.

Richard Hearne // November 17th 2023

Well worth reading the full transcript. It’s just such a shame there’s so much REDACTED in the exhibits.

AJ Kohn // November 17th 2023

Agreed Richard! The full transcript is fascinating and Nayak’s personality also really shines through. The redactions are a shame but I’m happy to get this much.

Matt Tutt // November 17th 2023

Great article AJ. One question – how on earth can you write about such a mentally taxing, in-depth topic whilst listening to such a playlist? Genuinely curious!

AJ Kohn // November 17th 2023

Thanks Matt. Most of that playlist doesn’t have lyrics. It’s synthwave or electronic music that actually keeps me focused and driving forward.

Allen // November 17th 2023

Hey AJ!

Thanks again for a fascinating article.

I have a question about the query documents mentioned in Rankbrain. They mention running in on 20-30 documents.

Does this refer to essentially the top 30 pages matching a query? — then they run rankbrain to help determine how to rank them?

Not sure if I am understanding correctly.

Thanks again! So glad I started to follow. This is the type of content I love to read when it comes to SEO!

AJ Kohn // November 17th 2023

Appreciate that Allen and it’s nice to hear that people in the industry want this type of content. I wasn’t sure that was still the case.

As for RankBrain, my reading of it is that RankBrain is computationally expensive so it is only applied to a subset of candidates. So, yes, only when you get down to a manageable amount of between 20 to 40 perhaps.

Toby Graham // November 17th 2023

Thanks AJ, that’s an excellent summary. You don’t include how you feel about Google misleading SEO’s by saying “we do not use users signal” for so many years, so how do you feel about that?

AJ Kohn // November 17th 2023

Thanks Toby. And how do I feel? Well, in almost all the cases they were technically right when we asked these questions.

“Does click-through rate impact rankings?” Does that specific metric impact rankings? No. But it does in the context of a larger user interaction model.

In general, I understand why they didn’t want to talk about it then and why they’re more comfortable about doing so now. The many Googlers who I’ve been lucky to meet and talk to are all committed to doing the right things for search. So it’s hard for me to get mad about this, particularly when they never went on the offensive.

They could have done far more to undermine my take on this topic when I first wrote about it in 2015. So I’m not particularly upset at them.

Victor Pan // November 17th 2023

Lots of twist and turns in emotions from surprise, respect, to affirmation.

I’ve got a lot of respect for Googlers and how they’ve communicated their work. I’m reminded of the interview Barry re-shared with Hyung-jin Kim on the Coati algorithm, and how many ranking factors were added to make the web better (and probably cheaper for Google to process the web). Illyes talks about 2nd-order effects as well.

I’m also not mad the moments where Googlers had to be intentionally “technical” to be honest or “vague” to drive a better web. If anything, it just affirmed rules about rule #1 of the linkerati. You don’t talk about it, and everyone goes on building trust with one another because the end goal is the same. Life’s too short to make the web worse for the next generation.

AJ Kohn // November 17th 2023

Right there with you Victor. All of the Googlers I’ve had the opportunity to talk with are clearly working to make search and the web better.

So I too am not mad when they’re parsing questions and answering them in ways that are still true but may not provide the whole truth.

Mervin Lance // November 19th 2023

A really great read AJ! I’m currently working on a client where navboost is probably the main algorithm that hinders them from ranking at number 1 mostly because their competitor is wildly popular as a brand. For context, my client is only about one-thirds of their popularity (in the range of a million brand searches).

I’ve long speculated that Google would, of course, preferentially rank more popular brands where all other factors are assumed equal.

I wish there more evidence of this so I don’t come across as speculative when suggesting that brand-building matters in the context of SEO. But this article definitely helps!

AJ Kohn // November 20th 2023

Thanks Mervin. And when it comes to these types of situations you have to think about potential brand bias as well as engagement on your own site.

I think of it as a two-pronged approach: build brand equity and improve user experience. If you continually do those two things you have a better chance of overtaking that popular competitor.

Dmitriy Shelepin // November 20th 2023

Hi AJ. Thanks for this post! What do you think about the idea that not only clicks from search are included in the ranking algorithm, but also highly motivated referral traffic with good user engagement? We made some experiments around that and got a significant boost in rankings (top 3) in a highly competitive niche, without any backlinks, and established topical authority. In fact, this was the first piece on the topic. Chrome browser definitely is sending some data, which might be used. What do you think?

AJ Kohn // November 20th 2023

No problem Dmitriy. There have been theories about the use of Chrome data or the DoubleClick cookie for quite some time. I don’t think these scale well given that they don’t cover all users and would be incomplete, potentially injecting bias into that data. It’s possible they check it from time to time to validate things but I’d be surprised if it was a working model.

However, Ranking documents based on user behavior and/or feature data is a patent that deals with user interactions on links. The layman term for this is the Reasonable Surfer Model, which assigns more weight to links that have a higher probability of being clicked.

In general, I think traffic from a link is usually a good proxy for a highly weighted link. So you may find success if you’re getting a bunch of referral traffic combined with high topical relevance.

Valentin // November 20th 2023

Thanks for the great summary. Since I’ve always been very curious about IR I really appreciate your work.

Too bad that they didn’t go into more detail on “core topicality signals”. Topically seems very straight forward at a first glance (sites which focus on a topic are more likely to succeed for related queries). I wonder how does it work for general news sites which typically cover an endless range of topics. My guess is that the reduced topical fit gets somehow balanced by other signals.

AJ Kohn // November 20th 2023

Thanks Valentin. Topicality is a pretty big deal for Google and may be something they have to lean on more to fix the issue of brands being able to rank for any topic.

I think news sites likely have their own categorization and are generally treated differently, particularly in what surfaces in the News unit.

aaron wall // November 23rd 2023

“The user hasn’t gone back to the SERP and selected Yelp. Instead, Google simply sees that your site was the last click in that search session.”

I think this is mostly correct, but they could where they own Android or Chrome see that sequence and cut out the intermediate step to some degree by creating a boost toward the other site the user wound up on. No way they don’t at least consider that data as a signal.

—

“Since Navboost uses 13 months of user click behavior data, do you think that even with a site improving onpage intent matching to the query, you will still have to realistically wait it out several months to see a significant bump?”

Navboost would be just as much about aided awareness from brand & repeat user visits as it would be by using better copy to get the clicks. Some aspects of data are folded in regularly for highly trusted sites, whereas lower trust sites might have batch processing until they meet some aggregate thresholds (age, click count, etc).

—

‘“Does click-through rate impact rankings?” Does that specific metric impact rankings? No. But it does in the context of a larger user interaction model.”‘

One part that was particularly disingenuous of them when answering these questions is they would typically clarify the user question into CTR as a strawman to debunk the category, and then broaden out the answer to say user signals are rather noisy. That said, they had every incentive to keep people focusing on other things so their own jobs were easier.

—

“I wish there more evidence of this so I don’t come across as speculative when suggesting that brand-building matters in the context of SEO. But this article definitely helps!”

Many years ago there was an Eric Schmidt quote that brands were how you sort out the cesspool. That statement + the Vince algorithm that surfaced brands + the shopping features on the sidebar of the SERP that were recently tested using brands as filters + the prior post here on Goog enough + this reference testimony is more than enough to have concrete certainty in the power of brand.

—

“Chrome browser definitely is sending some data, which might be used. What do you think?”

I have seen some Android Chrome data spill over like this.

Jacek Wieczorek // November 23rd 2023

Well, the first thought is about gaming the algo. In most cases it will be extremely hard but what do you think about local long tail keywords? It’s not a problem to buy 50 phones, change IP addresses frequently and perform google searches to pick our clients’ urls.

It’s definately worth testing. I’d be happy to engage in this kind of tests.

AJ Kohn // November 23rd 2023

Interesting idea Jacek. They have models they can extend for long-tail queries where they don’t have enough click data.

The question is whether their anti-spam click tracking has evolved to the point of identifying and neutralizing those types of long-tail clicks. I think they’ve made great strides here over the last number of years but there’s only one way to find out.

aaron wall // November 29th 2023

Some of the Google patents discuss developing trust ratings around users similar to how they do for particular publishers.

Large spikes from totally new and previously unused devices would likely also require a mix of other users doing similar searches for it to sort of paper over the artificiality of it.

AJ Kohn // November 30th 2023

Correct Aaron. I think it might be quite difficult to game user interaction signals today.

The only way I can see it working is if you’ve been running a botnet for years and then lease it out for these purposes. But even then the anomaly detection that can now be performed might catch the sudden increase in activity for a cluster of queries.

Nate Kay // December 09th 2023

Thanks for putting in the time and effort into this!

aaron wall // December 30th 2023

There are some VPN providers who offer free VPN services & then recoup the costs of the distribution & tech by running synthetic queries on hidden browsers to allow others access to the residential IPs for data scraping.

As one example, BrightData is the rebrand of the Luminati.io tied to Hola & discussed about a half-decade ago here.

Lawrence Ozeh // January 04th 2024

I reread this post again to pick because it randomly came back to me that someone covered this aspect of Google. Incredible work AJ.

Sorry, comments for this entry are closed at this time.

You can follow any responses to this entry via its RSS comments feed.