(This post is an experiment of sorts since I’m publishing it before my usual hard core editing. I’ll be going back later to edit and reorganize so that it’s a bit less Jack Kerouac in style. I wanted to publish this version now so I could get some feedback and get back to my client work. You’ve been warned.)

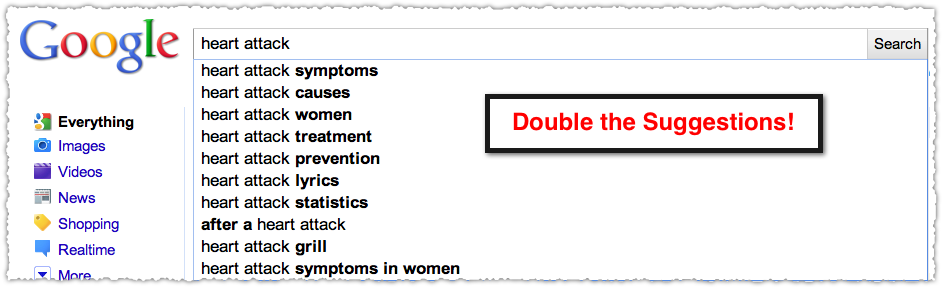

I’ve been on Google+ for one week now and have collected some thoughts on the service. This won’t be a tips and tricks style post since I believe G+ (that’s the cool way to reference it now) will evolve quickly and what we’re currently seeing is a minimum viable product (MVP).

In fact, while I have enjoyed the responsiveness that the G+ team has shown, it echoes what I heard during Buzz. One of my complaints about Buzz was that they didn’t iterate fast enough. So G+, please go ahead and break things in the name of speed. Ignore the howling in the interim.

Circles

Circles is clearly the big selling point for G+. I was a big fan of the presentation Paul Adams put together last year that clearly serves as the foundation to Circles. The core concept was that the way you share offline should be mirrored online. My family and high school friends probably don’t want to be overwhelmed with all the SEO related content I share. And if you want to share a personal or intimate update, you might want to only share that with family or friends.

It made perfect sense … in theory.

I’m not sure Circles works in practice, or at least not the way many though they would. The flexibility of Circles could be its achilles heel. I have watched people create a massive ordered list of Circles for every discrete set of people. Conversely, I’ve seen others just lump everyone into a big Circle. Those in the latter seem unsettled, thinking that they’re doing something wrong by not creating more Circles.

Of course there is no right or wrong way to use Circles.

But I believe there are two forces at work here that influence the value of Circles. First is the idea of configuration. I don’t think many people want to invest time into building Circles. These Circles are essentially lists, which have been tried on both Facebook and Twitter. Yet, both of these two social giants have relegated lists in their user interface. Was this because people didn’t set them up? Or that once they set them up they didn’t use them?

I sense that Facebook and Twitter may have realized that the stated need for lists or Circles simply didn’t show up in real life usage. This is one of those problems with qualitative research. Sometimes people say one thing and do another.

As an aside, I think most people would say that more is better. That’s why lists sound so attractive. Suddenly you can really organize and you’ll have all these lists and you’ll feel … better. But there is compelling research that shows that more choice leads to less satisfaction. Barry Schwartz dubbed it The Paradox of Choice.

The Paradox of Choice has been demonstrated with jam, where sales were higher when consumers had three choices instead of thirty. It’s also been proven in looking at 401k participation, the more mutual fund choices available, the lower the participation in the 401k program.

Overwhelmed with options, we often simply opt-out of the decision and walk away. And even when we do decide, we are often less satisfied since we’re unsure we’ve made the right selection. Those who scramble to create a lot of lists could fall prey to the Paradox of Choice. That’s not the type of user experience you want.

The second thing at work here is the notion that people want to share online as they do offline. Is that a valid assumption? Clearly, if you’re into cycling (like I am) you probably only want to share your Tour de France thoughts with other cyclists. But the sharing dynamic may have changed. I wrote before that Google has a Heisenberg problem in relation to measuring the link graph. That by the act of measuring the link graph they have forever changed it.

I think we may have the same problem in relation to online sharing. By sharing online we’ve forever changed the way we share.

If I interpret what FriendFeed (which is the DNA for everything you’re seeing right now), and particularly Paul Buchheit envisioned, it was that people should share more openly. That by sharing more, you could shine light on the dark corners of life. People could stop feeling like they were strange, alone or embarrassed. Facebook too seems to have this same ethos, though perhaps for different reasons – or not. And I think many of us have adopted this new way of sharing. Whether it was done intentionally at first or not becomes moot.

So G+ is, in some ways, rooted in the past, of the way we used to share.

Even if you don’t believe that people are now more willing to share more broadly, I think there are a great many differences in how we share offline versus how we share online. First, the type and availability of content is far greater online. Tumblr quotes, LOLcats, photos and a host of other types of media are quickly disseminated. The Internet has seen an explosion of digital content that runs through a newly built social infrastructure. In the past, you might share some of the things you’d seen recently at a BBQ or the next time you saw your book group. Not anymore.

Also, the benchmark for sharing content online is far lower than it is offline. The ease with which you can share online means you share more. The share buttons are everywhere and social proof is a powerful mechanism.

You also can’t touch and feel any of this stuff. For instance, think about the traditional way you sell offline. The goal is to get the customer to hold the product, because that greatly increases the odds they’ll purchase. But that’s an impossibility online.

Finally, you probably share with more people. The social infrastructure built over the last five years has allowed us to reconnect with people from the past. We continue to share with weak ties. I’m concerned about this since I believe holding onto the past may prevent us from growing. I’m a firm believer in Dunbar’s number, so the extra people we choose to share with wind up being noise. Social entropy must be allowed to take place.

Now Circles might support that since you can drop people into a ‘people I don’t care about’ Circle that is never used. (I don’t have this Circle, I’m just saying you could!) But then you simply wind up with a couple of Circles that you use on a frequent basis. In addition, the asynchronous model encourages people to connect with more people which flies in the face of this hardwired number of social connections we can maintain.

Lists and circles also rarely work for digesting content. Circles is clearly a nice way to segment and share your content with the ‘right’ people. But I don’t think Circles are very good as a content viewing device.

You might make a Circle for your family. Makes perfect sense. And you might then share important and potentially sensitive information using this Circle. But when you look at the content feed from that Circle, what do you get? It would not just be sensitive family information.

If your brother is Robert Scoble you’d see a boat load of stuff there. That’s an extreme example, but lets bring it to the more mundane example of, say, someone who is a diehard sports fan. Maybe that family member would share only with his sports buddies, but a lot of folks are just going to broadcast publicly and so you get everything from that person.

To put it more bluntly, people are not one-dimensional.

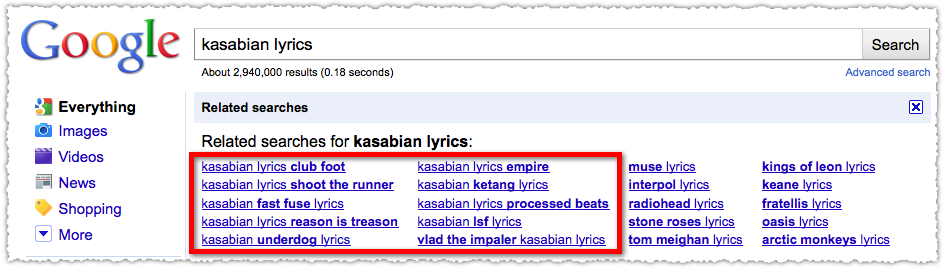

I love bicycling. I also have a passion for search and SEO. I also enjoy books, UX, LOLcats and am a huge Kasabian fan. If you put me in an SEO Circle, there’s a good chance you’ll get LOLcats and Kasabian lyrics mixed in with my SEO stuff. In fact, most of my stuff is on Public, so you’ll get a fire hose of my material right now.

Circles is good for providing a more relevant sharing mechanism, but I think it’s a bit of a square peg in a round hole when it comes to digesting content. That’s further exacerbated by the fact that the filtering capabilities for content are essentially on and off (mute) right now.

Sure, you could segment your Circles ever more finely until you found the people who were just talking about the topic you were interested in, but that would be a small group probably and if you had more than just one interest (which is, well, pretty much everyone) then you’ll need lots of Circles. And with lots of Circles you run into the Paradox of Choice.

Conversation

I’ve never been a fan of using Twitter to hold conversations. The clipped and asynchronous style of banter just doesn’t do it for me. FriendFeed was (is?) the place where you could hold real debate and discussion. It provided long-form commenting ability.

G+ does a good job fostering conversation, but the content currently being shared and some of the feature limitations may be crushing long-form discussions and instead encouraging ‘reactions’.

I don’t want a stream of 6 word You Tube like comments. That doesn’t add value. I’m purposefully using this terminology because I think delivering value is important to Google. Comments should add value and there is a difference in comment quality. And yes, you can influence the quality of comments.

Because if the comments and discussion are engaging you will win my attention. And that is what I believe is most important in the social arms race we’re about to witness.

Attention

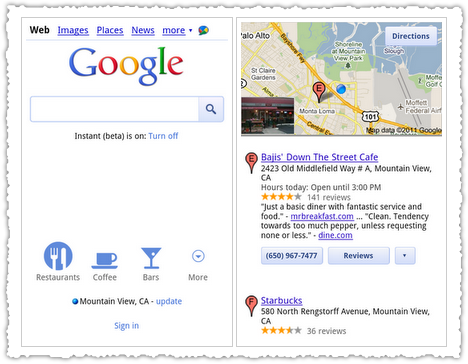

There is a war for your attention and Facebook has been winning. G+ must fracture that attention before Facebook really begins to leverage the Open Graph and provide search and discovery features. As it stands Facebook is a search engine. The News Feed is simply a passive search experience based on your social connections and preferences. Google’s talked a lot about being psychic and knowing what you want before you do. Facebook is well on their way there in some ways.

User Interface

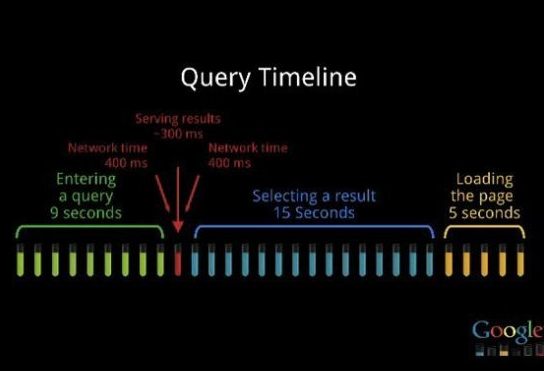

If it’s one thing that Google got right it was the Red Number user interface. It is by far the most impressive part of the experience and feeds your G+ addiction and retains your attention.

The Red Number sits at the top of the page on G+, Google Reader, Google Search and various other Google products. It is nearly omnipresent in my own existence. (Thank goodness it’s not on Google Analytics or I really wouldn’t get any work done.) The red number indicator is both a notifier, navigation and engagement feature all-in-one. It is epic.

It is almost scary though, since you can’t help but want to check what’s going on when that number lights up and begins to increment. It’s Pavlovian in nature. It inspired me to put together a quick LOLcat mashup.

It draws you in (again and again) and keeps you engaged. It’s a very slick user interface and Google is smart to integrate this across as many properties as possible. This one user interface may be the way that G+ wins in the long-run since they’ll have time to work out the kinks while training us to respond to that red number. The only way it fails is if that red number never lights up.

I’ll give G+ credit for reducing a lot of the friction around posting and commenting. The interactions are intuitive but are hamstrung by Circles as well as the display and ordering of content.

Content

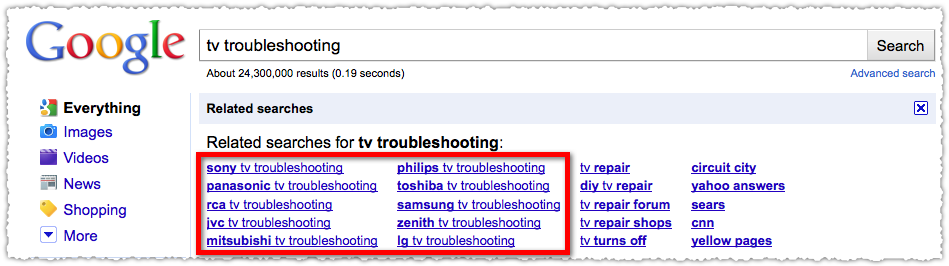

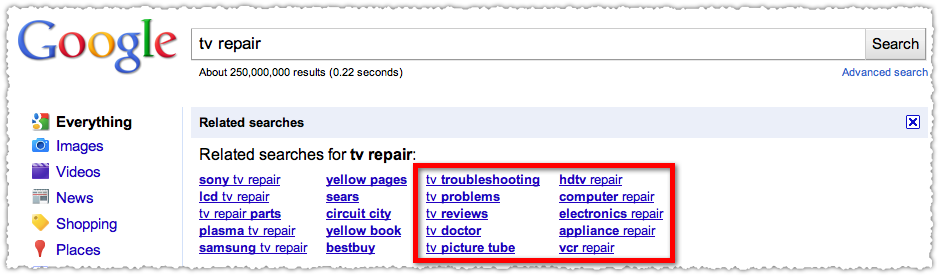

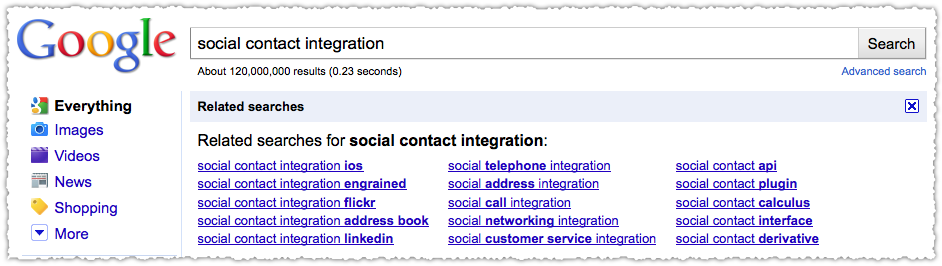

There is no easy way to add content to G+ right now. In my opinion, this is hugely important because content is the kindling to conversation. Good content begets good conversation. Sure we could all resort to creating content on G+ through posting directly, but that’s going to get old quickly. And Sparks as it now stands is not effective in the slightest. Sorry but this is one feature that seems half-done (and that’s being generous.) Right now the content through Sparks is akin to a very unfocused Google alert.

I may be in the minority in thinking that social interactions happen around content, topics and ideas far more often than they do around people. I might interact with people I’m close to on a more personal level, responding to check-ins and status updates but for the most part I believe it’s about the content we’re all seeing and sharing.

I really don’t care if you updated your profile photo. (Again, I should be able to not see these by default if I don’t want to.)

Good content will drive conversation and engagement. The easiest way to effect that is by aggregating the streams of content we already produce. This blog, my YouTube favorites, my Delicious bookmarks, my Google Reader favorites, my Last.fm favorites and on and on and on. Yes, this is exactly what FriendFeed did and it has, in many ways, failed. As much as I love the service, it never caught on with the mainstream.

I think some of this had to do with configuration. You had to configure the content streams and those content streams didn’t necessarily have to be yours. But we’ve moved on quite a bit since FriendFeed was introduced and Google is adhering to the Quora model, and requiring people to use their real names on their profiles.

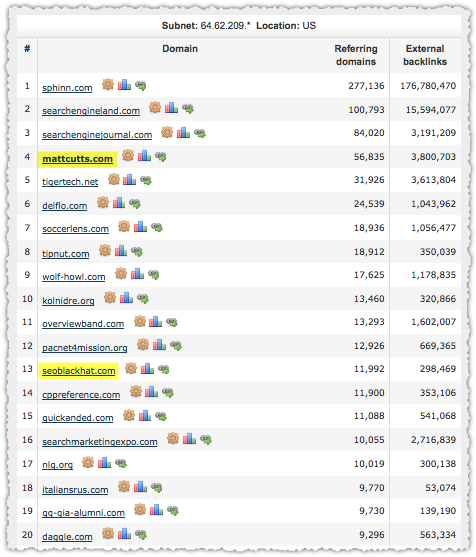

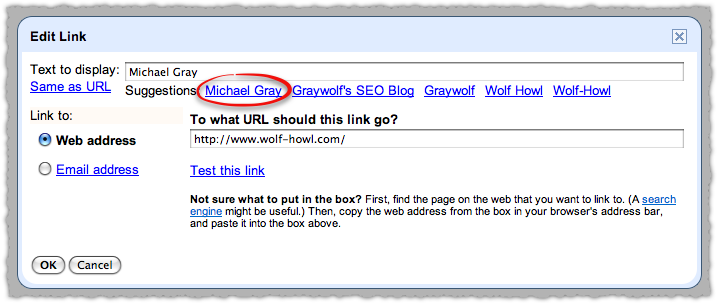

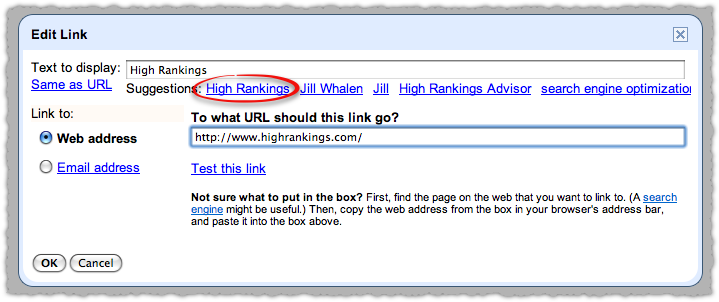

Google is seeking to create a better form of identify, a unified form of identity it can then leverage for a type of PeopleRank signal that can inform trust and authority in search and elsewhere. But identity on the web is fairly transparent as we all have learned from Rapleaf and others who still map social profiles across the web. Google could quite easily find those outposts and prompt you to confirm and add them to your Google profile.

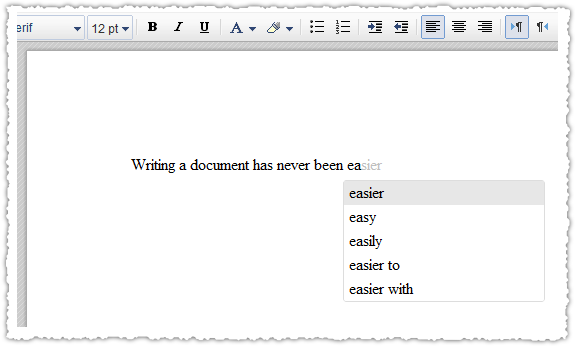

Again, we’ve all become far more public and even if email is not the primary key, the name and even username can be used with a fairly high degree of confidence. Long story short, Google can short-circuit the configuration problem around content feeds and greatly reduce the friction of contributing valuable content to G+.

By flowing content into G+, you would also increase the odds of that red number lighting up. So even if I haven’t visited G+ in a day (heck I can’t go an hour right now unless I’m sleeping) you might get drawn back in because someone gave your Last.fm favorite a +1. Suddenly you want to know who likes the same type of music you do and you’re hooked again.

Display

What we’re talking about here is aggregation, which has turned into a type of dirty word lately. And right now Google isn’t prepared for these types of content feeds. They haven’t fixed duplication detection so I see the same posts over and over again. And there are some other factors in play here that I think need to be fixed prior to bringing in more content.

People don’t quite understand Circles and seem compelled to share content with their own Circles. The +1 button should really do this, but then you might have to make the +1 button conditional based on your Circles (e.g. – I want to +1 this bicycling post to my TDF Circle.) That level of complexity isn’t going to work.

At a minimum they’ll need to collapse all of the shares into one ‘story’, with the dominant story being the one that you’ve interacted with or, barring prior interaction, the one that comes from someone in your Circle and if there are more than one from your Circle then the most recent or first from that group.

In addition, while the red number interface does deliver the active discussions to me, I think the order of content in the feed will need to change. Once I interact on an item it should be given more weight and float to the top more often, particularly if someone I have in my Circles is contributing to the discussion there.

Long-term it would also be nice to pin certain posts to the top of a feed if I’m interested in following the actual conversation as it unfolds.

The display of content needs to get better before G+ can confidently aggregate more content sources.

Privacy

One of the big issues, purportedly, is privacy. I frankly believe that the privacy issue is way overblown. (Throw your stones now.) As an old school direct marketer I know I can get a tremendous amount of information about a person, all from their offline transactions and interactions.

Even without that knowledge, it’s clear that people might talk about privacy but they don’t do much about it. If people truly valued privacy and thought Facebook was violating that privacy you’d see people shuttering their accounts. And not just the few Internati out there who do so to prove a point but everyday people. But that’s just not happening.

People say one thing, but do another. They say they value privacy but then they’ll give it away for a chance to win the new car sitting in the local mall.

Also, it’s very clear that people do have a filter for what they share on social networks. The incidents where this doesn’t happen make great headlines, but the behavioral survey work showing a hesitance to share certain topics on Facebook make it clear we’re not in full broadcast mode.

But for the moment lets say that privacy is one of the selling points of G+. The problem is that the asymmetric sharing model exposes a lot more than you might think. Early on, I quipped that the best use of G+ was to stalk Google employees. I think a few people took this the wrong way, and I understand that.

But my point was that it was very easy to find people on G+. In fact, it is amazingly simple to skim the social graph. In particular, by looking at who someone has in their Circles and who has that person in their Circles.

So, why wouldn’t I be interested in following folks at Google? In general, they’re a very intelligent, helpful and amiable bunch. My Google circle grew. It grew to 300 rather quickly by simply skimming the Circles for some prominent Googlers.

The next day or so I did this every once in a while. I didn’t really put that much effort into it. The interface for finding and adding people is quite good – very fluid. So, I got to about 700 in three or four days. And during that time the suggested users feature began to help out, providing me with a never ending string of Googlers for me to add.

But you know what else happened? It suggested people who were clearly Googlers but were not broadcasting that fact. How do I know that? Well, if 80% of your Circle are Googlers, and 80% of the people who have you in Circles are Googlers there’s a good change you’re a Googler. Being a bit OCD I didn’t automatically add these folks to my Google Circle but their social graph led me to others (bonus!) and if I could verify through other means – their posts or activity elsewhere on the Internet – then I’d add them.

How many people do I have in my Google circle today?

Now, perhaps people are okay with this. In fact, I’m okay with it. But if privacy is a G+ benefit, I don’t think it succeeds. Too many people will be upset by this level of transparency. Does the very private Google really want someone to be parsing the daily output of its employees? I’m harmless but others might be trolling for something more.

G+ creates this friction because of the asymmetric sharing model and the notion that you only have to share with the people in your circles. Circles ensures your content is compartmentalized and safe. But it exposes your social graph in a way that people might not expect or want.

Yes, I know there are ways to manage this exposure, but configuration of your privacy isn’t very effective. Haven’t we learned this yet?

Simplicity

Circles also has an issue with simplicity. Creating Circles is very straight forward but how content in those Circles is transmitted is a bit of a mystery to many. So much so that there are diagrams showing how and who will see your content based on the Circle permutations. While people might make diagrams just for the fun of it, I think these diagrams are an indication that the underlying information architecture might be too complex for mainstream users. Or maybe they won’t care. But if sharing with the ‘right’ people is the main selling point, this will muddy the waters.

At present there are a lot of early adopters on G+ and many are hell bent on kissing up to the Google team at every turn. Don’t get me wrong, I am rooting for G+. I like Google and the people that work there and I’ve never been a Facebook fan. But my marketing background kicks in hard. I know I’m not the target market. In fact, most of the people I know aren’t the target market. I wonder if G+ really understands this or not.

Because while my feed was filled with people laughing at Mark Zuckerberg and his ‘awesome’ announcement, I think they missed something, something very fundamental.

Yes, hangouts (video chat) with 10 people are interesting and sort of fun. But is that the primary use case for video chat? No, it’s not. This idea that 1 to 1 video chat is so dreadful and small-minded is simply misguided. Because what Facebook said was that they worked on making that video chat experience super easy to use. It’s not about the Internati using video chat, it’s about your grandparents using video chat.

Mark deftly avoided the G+ question but then, he couldn’t help himself. He brought up the background behind Groups. I’m paraphrasing here, but Zuckerberg essentially said that Groups flourished because everyone knew each other (that’s an eye poke at the asymmetric sharing model) and that ad hoc Groups were vitally important since people didn’t want to spend time configuring lists. Again, this is – in my opinion – a swipe at Circles. In many ways, Zuck is saying that lists fail and that content sharing permissions are done on an ad hoc basis.

Instead of asking people to configure Circles and manage and maintain them Facebook is making it easier to just assemble them on the fly through Groups. And the EdgeRank algorithm that separates your Top News from Recent News is their way of delivering the right content to you based on your preferences and interactions. I believe their goal is to automagically make the feed relevant to you instead of forcing the user to create that relevance.

Sure there’s a filter bubble argument to be made, but give Facebook credit for having the Recent News tab prominently displayed in the interface.

But G+ could do something like this. In fact, they’re better placed than Facebook to deliver a feed of relevant information based on the tie-ins to other products. Right now there is essentially no tie in at all, which is frustrating. A +1 on a website does not behave as a Like. It does not send that page or site to my Public G+ feed. Nor does Google seem to be using Google Reader or Gmail as ways to determine what might be more interesting to me and who really I’m interacting with.

G+

I’m addicted to G+ so they’re doing something right. But remember, I’m not the target market.

I see a lot of potential with G+ (and I desperately want it to succeed) but I worry that social might not be in their DNA, that they might be chasing a mirage that others have already dismissed and that they might be too analytical for their own good.